Deep High-Resolution Representation Learning for Human Pose Estimation

Official Repo

Code Snippet

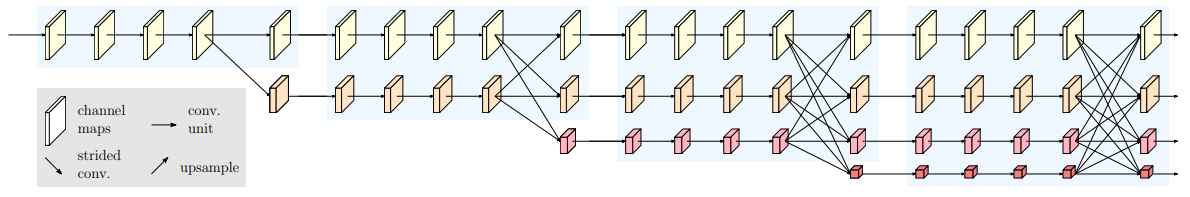

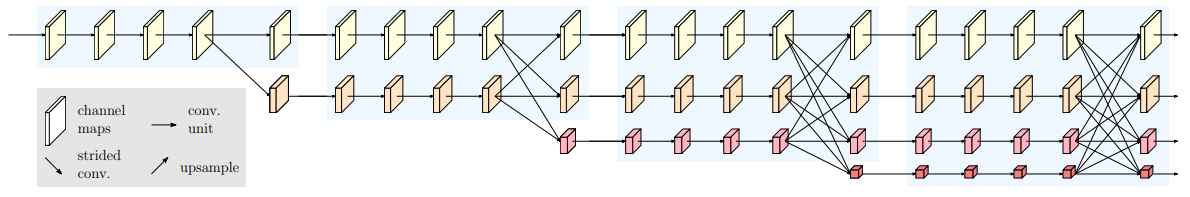

High-resolution representations are essential for position-sensitive vision problems, such as human pose estimation, semantic segmentation, and object detection. Existing state-of-the-art frameworks first encode the input image as a low-resolution representation through a subnetwork that is formed by connecting high-to-low resolution convolutions \emph{in series} (e.g., ResNet, VGGNet), and then recover the high-resolution representation from the encoded low-resolution representation. Instead, our proposed network, named as High-Resolution Network (HRNet), maintains high-resolution representations through the whole process. There are two key characteristics: (i) Connect the high-to-low resolution convolution streams \emph{in parallel}; (ii) Repeatedly exchange the information across resolutions. The benefit is that the resulting representation is semantically richer and spatially more precise. We show the superiority of the proposed HRNet in a wide range of applications, including human pose estimation, semantic segmentation, and object detection, suggesting that the HRNet is a stronger backbone for computer vision problems. All the codes are available at this https URL.

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

512x1024 |

40000 |

1.7 |

23.74 |

V100 |

73.86 |

75.91 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x1024 |

40000 |

2.9 |

12.97 |

V100 |

77.19 |

78.92 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x1024 |

40000 |

6.2 |

6.42 |

V100 |

78.48 |

79.69 |

config |

model | log |

| FCN |

HRNetV2p-W18-Small |

512x1024 |

80000 |

- |

- |

V100 |

75.31 |

77.48 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x1024 |

80000 |

- |

- |

V100 |

78.65 |

80.35 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x1024 |

80000 |

- |

- |

V100 |

79.93 |

80.72 |

config |

model | log |

| FCN |

HRNetV2p-W18-Small |

512x1024 |

160000 |

- |

- |

V100 |

76.31 |

78.31 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x1024 |

160000 |

- |

- |

V100 |

78.80 |

80.74 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x1024 |

160000 |

- |

- |

V100 |

80.65 |

81.92 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

512x512 |

80000 |

3.8 |

38.66 |

V100 |

31.38 |

32.45 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

80000 |

4.9 |

22.57 |

V100 |

36.27 |

37.28 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

80000 |

8.2 |

21.23 |

V100 |

41.90 |

43.27 |

config |

model | log |

| FCN |

HRNetV2p-W18-Small |

512x512 |

160000 |

- |

- |

V100 |

33.07 |

34.56 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

160000 |

- |

- |

V100 |

36.79 |

38.58 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

160000 |

- |

- |

V100 |

42.02 |

43.86 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

512x512 |

20000 |

1.8 |

43.36 |

V100 |

65.5 |

68.89 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

20000 |

2.9 |

23.48 |

V100 |

72.30 |

74.71 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

20000 |

6.2 |

22.05 |

V100 |

75.87 |

78.58 |

config |

model | log |

| FCN |

HRNetV2p-W18-Small |

512x512 |

40000 |

- |

- |

V100 |

66.61 |

70.00 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

40000 |

- |

- |

V100 |

72.90 |

75.59 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

40000 |

- |

- |

V100 |

76.24 |

78.49 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W48 |

480x480 |

40000 |

6.1 |

8.86 |

V100 |

45.14 |

47.42 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

480x480 |

80000 |

- |

- |

V100 |

45.84 |

47.84 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W48 |

480x480 |

40000 |

- |

- |

V100 |

50.33 |

52.83 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

480x480 |

80000 |

- |

- |

V100 |

51.12 |

53.56 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

512x512 |

80000 |

1.59 |

24.87 |

V100 |

49.28 |

49.42 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

80000 |

2.76 |

12.92 |

V100 |

50.81 |

50.95 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

80000 |

6.20 |

9.61 |

V100 |

51.42 |

51.64 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

512x512 |

80000 |

1.58 |

36.00 |

V100 |

77.64 |

78.8 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

80000 |

2.76 |

19.25 |

V100 |

78.26 |

79.24 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

80000 |

6.20 |

16.42 |

V100 |

78.39 |

79.34 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

512x512 |

80000 |

1.58 |

38.11 |

V100 |

71.81 |

73.1 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

512x512 |

80000 |

2.76 |

19.55 |

V100 |

72.57 |

74.09 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

512x512 |

80000 |

6.20 |

17.25 |

V100 |

72.50 |

73.52 |

config |

model | log |

| Method |

Backbone |

Crop Size |

Lr schd |

Mem (GB) |

Inf time (fps) |

Device |

mIoU |

mIoU(ms+flip) |

config |

download |

| FCN |

HRNetV2p-W18-Small |

896x896 |

80000 |

4.95 |

13.84 |

V100 |

62.30 |

62.97 |

config |

model | log |

| FCN |

HRNetV2p-W18 |

896x896 |

80000 |

8.30 |

7.71 |

V100 |

65.06 |

65.60 |

config |

model | log |

| FCN |

HRNetV2p-W48 |

896x896 |

80000 |

16.89 |

7.34 |

V100 |

67.80 |

68.53 |

config |

model | log |

Note:

@inproceedings{SunXLW19,

title={Deep High-Resolution Representation Learning for Human Pose Estimation},

author={Ke Sun and Bin Xiao and Dong Liu and Jingdong Wang},

booktitle={CVPR},

year={2019}

}