Problem in running Zundel example #7

-

|

Hello, |

Beta Was this translation helpful? Give feedback.

Replies: 4 comments 2 replies

-

|

Hi rajorshichat, this sounds like an issue with your n2p2 setup. Best, |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

Your error output indicates problems with mpi. You are using Line 364 in f8adf1a to Other recommendations would be to check that n2p2 was compiled with Another workaround is to set |

Beta Was this translation helpful? Give feedback.

-

|

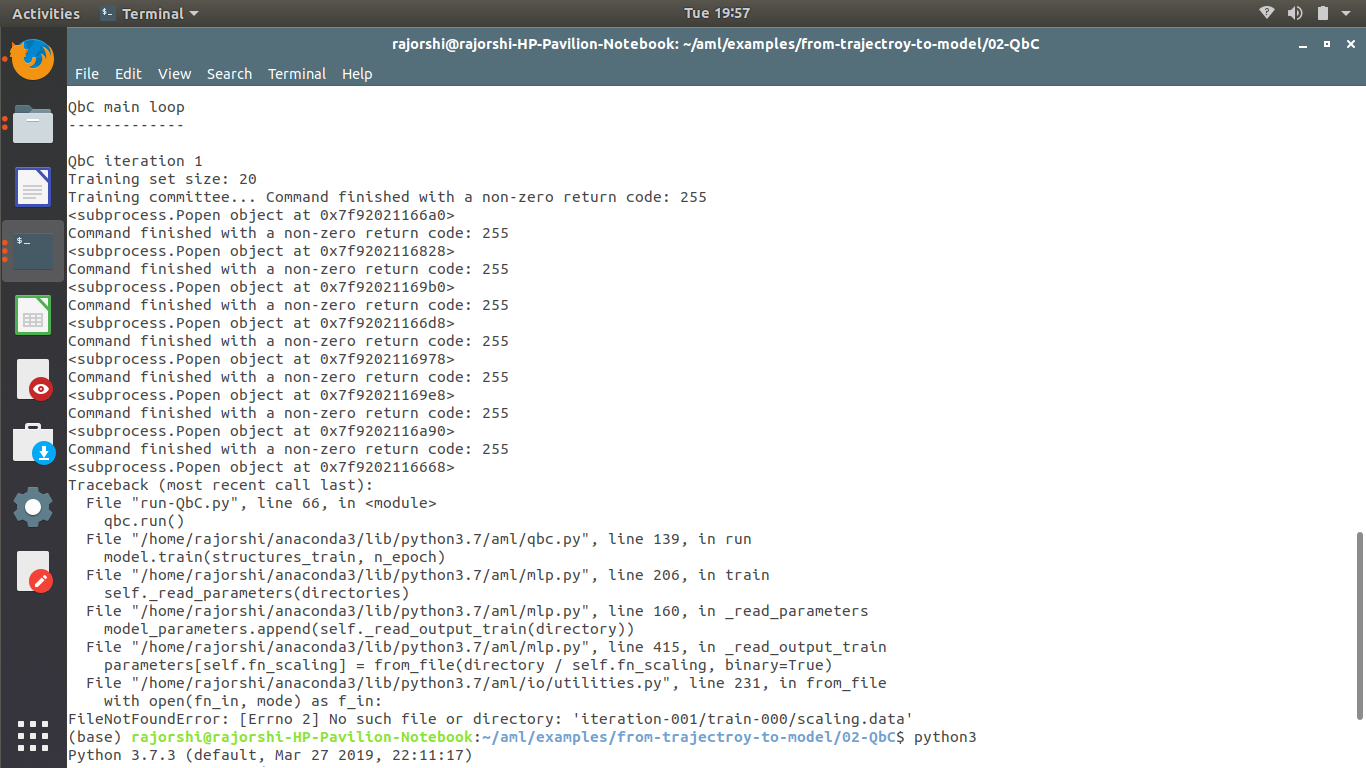

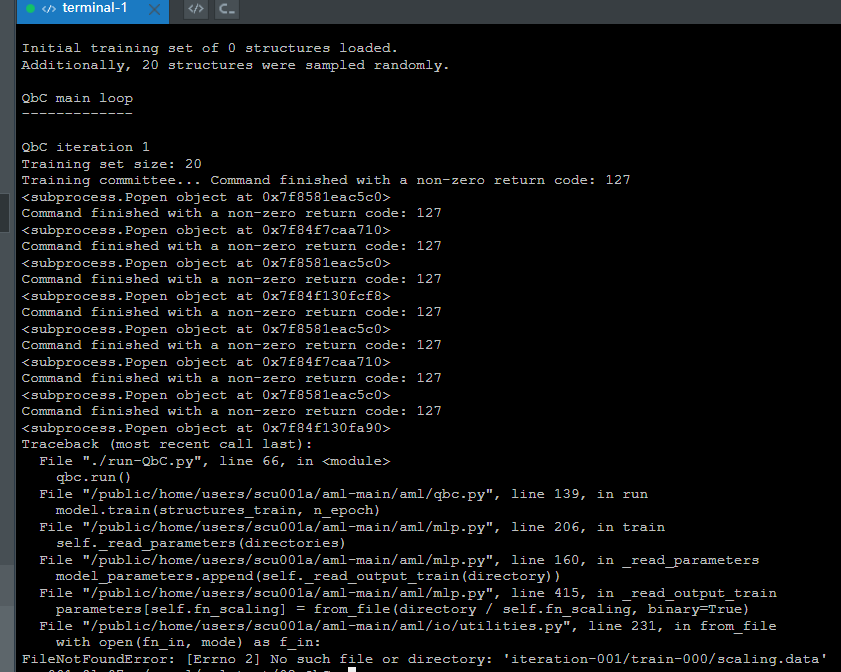

Hi Christoph I have set the n_core_task = 1 in the example/02/run-Qbc.py code and run it directly with ./run-Qbc.py. It did not work and the erros like: What should I do then? |

Beta Was this translation helpful? Give feedback.

Your error output indicates problems with mpi. You are using

mpich, which seems to have trouble with our default MPI-command used to initiate the training.A quick workaround should be to change this line

aml/aml/mlp.py

Line 364 in f8adf1a

to

Other recommendations would be to check that n2p2 was compiled with

mpichand that the number of cores accessible to the QbC run does not exceedn_tasks*n_core_task, which is 24 cores in the example.Another workaround is to set

n_core_task=1, which would trigger a serial n2p2 run. You can still parallelize up to the number of committee members withn_tasks.