diff --git a/materials/sections/adc-intro-to-policies.Rmd b/materials/sections/adc-intro-to-policies.Rmd

deleted file mode 100644

index c98e84e3..00000000

--- a/materials/sections/adc-intro-to-policies.Rmd

+++ /dev/null

@@ -1,126 +0,0 @@

-## Introduction to the Arctic Data Center and NSF Standards and Policies

-

-### Learning Objectives

-

-In this lesson, we will discuss:

-

-- The mission and structure of the Arctic Data Center

-- How the Arctic Data Center supports the research community

-- About data policies from the NSF Arctic program

-

-### Arctic Data Center - History and Introduction

-

-The Arctic Data Center is the primary data and software repository for the Arctic section of National Science Foundation’s Office of Polar Programs (NSF OPP).

-

-We’re best known in the research community as a data archive – researchers upload their data to preserve it for the future and make it available for re-use. This isn’t the end of that data’s life, though. These data can then be downloaded for different analyses or synthesis projects. In addition to being a data discovery portal, we also offer top-notch tools, support services, and training opportunities. We also provide data rescue services.

-

-

-

-NSF has long had a commitment to data reuse and sharing. Since our start in 2016, we’ve grown substantially – from that original 4 TB of data from ACADIS to now over 76 TB at the start of 2023. In 2021 alone, we saw 16% growth in dataset count, and about 30% growth in data volume. This increase has come from advances in tools – both ours and of the scientific community, plus active community outreach and a strong culture of data preservation from NSF and from researchers. We plan to add more storage capacity in the coming months, as researchers are coming to us with datasets in the terabytes, and we’re excited to preserve these research products in our archive. We’re projecting our growth to be around several hundred TB this year, which has a big impact on processing time. Give us a heads up if you’re planning on having larger submissions so that we can work with you and be prepared for a large influx of data.

-

-

-

-The data that we have in the Arctic Data Center comes from a wide variety of disciplines. These different programs within NSF all have different focuses – the Arctic Observing Network supports scientific and community-based observations of biodiversity, ecosystems, human societies, land, ice, marine and freshwater systems, and the atmosphere as well as their social, natural, and/or physical environments, so that encompasses a lot right there in just that one program. We’re also working on a way right now to classify the datasets by discipline, so keep an eye out for that coming soon.

-

-

-

-Along with that diversity of disciplines comes a diversity of file types. The most common file type we have are image files in four different file types. Probably less than 200-300 of the datasets have the majority of those images – we have some large datasets that have image and/or audio files from drones. Most of those 6600+ datasets are tabular datasets. There’s a large diversity of data files, though, whether you want to look at remote sensing images, listen to passive acoustic audio files, or run applications – or something else entirely. We also cover a long period of time, at least by human standards. The data represented in our repository spans across centuries.

-

-

-

-We also have data that spans the entire Arctic, as well as the sub-Arctic, regions.

-

-

-

-### Data Discovery Portal

-

-To browse the data catalog, navigate to [arcticdata.io](https://arcticdata.io/). Go to the top of the page and under data, go to search. Right now, you’re looking at the whole catalog. You can narrow your search down by the map area, a general search, or searching by an attribute.

-

-

-Clicking on a dataset brings you to this page. You have the option to download all the files by clicking the green “Download All” button, which will zip together all the files in the dataset to your Downloads folder. You can also pick and choose to download just specific files.

-

-

-

-All the raw data is in open formats to make it easily accessible and compliant with [FAIR](https://www.go-fair.org/fair-principles/) principles – for example, tabular documents are in .csv (comma separated values) rather than Excel documents.

-

-The metrics at the top give info about the number of citations with this data, the number of downloads, and the number of views. This is what it looks like when you click on the Downloads tab for more information.

-

-

-

-Scroll down for more info about the dataset – abstract, keywords. Then you’ll see more info about the data itself. This shows the data with a description, as well as info about the attributes (or variables or parameters) that were measured. The green check mark indicates that those attributes have been annotated, which means the measurements have a precise definition to them. Scrolling further, we also see who collected the data, where they collected it, and when they collected it, as well as any funding information like a grant number. For biological data, there is the option to add taxa.

-

-### Tools and Infrastructure

-

-Across all our services and partnership, we are strongly aligned with the community principles of making data FAIR (Findable, Accesible, Interoperable and Reusable).

-

-

-

-We have a number of tools available to submitters and researchers who are there to download data. We also partner with other organizations, like [Make Data Count](https://makedatacount.org/) and [DataONE](https://www.dataone.org/), and leverage those partnerships to create a better data experience.

-

-

-

-One of those tools is provenance tracking. With provenance tracking, users of the Arctic Data Center can see exactly what datasets led to what product, using the particular script that the researcher ran.

-

-

-

-Another tool are our Metadata Quality Checks. We know that data quality is important for researchers to find datasets and to have trust in them to use them for another analysis. For every submitted dataset, the metadata is run through a quality check to increase the completeness of submitted metadata records. These checks are seen by the submitter as well as are available to those that view the data, which helps to increase knowledge of how complete their metadata is before submission. That way, the metadata that is uploaded to the Arctic Data Center is as complete as possible, and close to following the guideline of being understandable to any reasonable scientist.

-

-

-

-### Support Services

-

-Metadata quality checks are the automatic way that we ensure quality of data in the repository, but the real quality and curation support is done by our curation [team](https://arcticdata.io/team/). The process by which data gets into the Arctic Data Center is iterative, meaning that our team works with the submitter to ensure good quality and completeness of data. When a submitter submits data, our team gets a notification and beings to evaluate the data for upload. They then go in and format it for input into the catalog, communicating back and forth with the researcher if anything is incomplete or not communicated well. This process can take anywhere from a few days or a few weeks, depending on the size of the dataset and how quickly the researcher gets back to us. Once that process has been completed, the dataset is published with a DOI (digital object identifier).

-

-

-

-### Training and Outreach

-

-In addition to the tools and support services, we also interact with the community via trainings like this one and outreach events. We run workshops at conferences like the American Geophysical Union, Arctic Science Summit Week and others. We also run an intern and fellows program, and webinars with different organizations. We’re invested in helping the Arctic science community learn reproducible techniques, since it facilitates a more open culture of data sharing and reuse.

-

-

-

-We strive to keep our fingers on the pulse of what researchers like yourselves are looking for in terms of support. We’re active on [Twitter](https://twitter.com/arcticdatactr) to share Arctic updates, data science updates, and specifically Arctic Data Center updates, but we’re also happy to feature new papers or successes that you all have had with working with the data. We can also take data science questions if you’re running into those in the course of your research, or how to make a quality data management plan. Follow us on Twitter and interact with us – we love to be involved in your research as it’s happening as well as after it’s completed.

-

-

-

-

-### Data Rescue

-

-We also run data rescue operations. We digitiazed Autin Post's collection of glacier photos that were taken from 1964 to 1997. There were 100,000+ files and almost 5 TB of data to ingest, and we reconstructed flight paths, digitized the images of his notes, and documented image metadata, including the camera specifications.

-

-

-

-### Who Must Submit

-

-Projects that have to submit their data include all Arctic Research Opportunities through the NSF Office of Polar Programs. That data has to be uploaded within two years of collection. The Arctic Observing Network has a shorter timeline – their data products must be uploaded within 6 months of collection. Additionally, we have social science data, though that data often has special exceptions due to sensitive human subjects data. At the very least, the metadata has to be deposited with us.

-

-**Arctic Research Opportunities (ARC)**

-

-- Complete metadata and all appropriate data and derived products

-- Within 2 years of collection or before the end of the award, whichever comes first

-

-**ARC Arctic Observation Network (AON)**

-

-- Complete metadata and all data

-- Real-time data made public immediately

-- Within 6 months of collection

-

-**Arctic Social Sciences Program (ASSP)**

-

-- NSF policies include special exceptions for ASSP and other awards that contain sensitive data

-- Human subjects, governed by an Institutional Review Board, ethically or legally sensitive, at risk of decontextualization

-- Metadata record that documents non-sensitive aspects of the project and data

- - Title, Contact information, Abstract, Methods

-

-For more complete information see our "Who Must Submit" [webpage](https://arcticdata.io/submit/#who-must-submit)

-

-Recognizing the importance of sensitive data handling and of ethical treatment of all data, the Arctic Data Center submission system provides the opportunity for researchers to document the ethical treatment of data and how collection is aligned with community principles (such as the CARE principles). Submitters may also tag the metadata according to community develop data sensitivity tags. We will go over these features in more detail shortly.

-

-

-

-### Summary

-

-All the above informtion can be found on our website or if you need help, ask our support team at support@arcticdata.io or tweet us \@arcticdatactr!

-

-

-

diff --git a/materials/sections/closing-materials.Rmd b/materials/sections/closing-materials.Rmd

deleted file mode 100644

index 45a92e12..00000000

--- a/materials/sections/closing-materials.Rmd

+++ /dev/null

@@ -1,35 +0,0 @@

-### Material to include:

-

-- Project Messaging

-- Methods / Analytical Process

-- Next Steps

-

-#### Project Messaging

-

-Present your message box. High Level. You can structure it within the envelope style visual format or as a section based document.

-

-Make sure to include:

-

-- Audience

-- Issue

-- Problem

-- So What?

-- Solution

-- Benefits

-

-

-

-#### Methods / Analytical Process

-

-Provide an update on your approaches to solving your 'Problem'. How are you tackling this? If multiple elements, describe each. Present the workflow for your synthesis.

-

-

-

-#### Next Steps

-

-Articulate your plan for the next steps of the project. Some things to consider as you plan:

-

-- The one-day workshop is January 24th

-- January deliverables include analyses and results

- - What needs do you anticipate for data / code support

-- Project funding ends May 2022

diff --git a/materials/sections/collaboration-social-data-policies.Rmd b/materials/sections/collaboration-social-data-policies.Rmd

deleted file mode 100644

index dd00f42a..00000000

--- a/materials/sections/collaboration-social-data-policies.Rmd

+++ /dev/null

@@ -1,172 +0,0 @@

-

-## Developing a Code of Conduct

-

-Whether you are joining a lab group or establishing a new collaboration, articulating a set of shared agreements about how people in the group will treat each other will help create the conditions for successful collaboration. If agreements or a code of conduct do not yet exist, invite a conversation among all members to create them. Co-creation of a code of conduct will foster collaboration and engagement as a process in and of itself, and is important to ensure all voices heard such that your code of conduct represents the perspectives of your community. If a code of conduct already exists, and your community will be a long-acting collaboration, you might consider revising the code of conduct. Having your group 'sign off' on the code of conduct, whether revised or not, supports adoption of the principles.

-

-When creating a code of conduct, consider both the behaviors you want to encourage and those that will not be tolerated. For example, the Openscapes code of conduct includes Be respectful, honest, inclusive, accommodating, appreciative, and open to learning from everyone else. Do not attack, demean, disrupt, harass, or threaten others or encourage such behavior.

-

-Below are other example codes of conduct:

-

-- [NCEAS Code of Conduct](https://www.nceas.ucsb.edu/sites/default/files/2021-11/NCEAS_Code-of-Conduct_Nov2021_0.pdf)

-- [Carpentries Code of Conduct](https://docs.carpentries.org/topic_folders/policies/code-of-conduct.html)

-- [Arctic Data Center Code of Conduct](https://docs.google.com/document/d/1-eVjnwyLBAfg_f4DRIUVWnLeekgrzrz9wgbhnpOmuVE/edit)

-- [Mozilla Community Participation Guidelines](https://www.mozilla.org/en-US/about/governance/policies/participation/)

-- [Ecological Society of America Code of Conduct](https://www.esa.org/esa/code-of-conduct-for-esa-events/)

-

-

-## Authorship and Credit Policies

-

-

-

-

-Navigating issues of intellectual property and credit can be a challenge, particularly for early career researchers. Open communication is critical to avoiding misunderstandings and conflicts. Talk to your coauthors and collaborators about authorship, credit, and data sharing **early and often**. This is particularly important when working with new collaborators and across lab groups or disciplines which may have divergent views on authorship and data sharing. If you feel uncomfortable talking about issues surrounding credit or intellectual property, seek the advice or assistance of a mentor to support you in having these important conversations.

-

-The “Publication” section of the [Ecological Society of America’s Code of Ethics](https://www.esa.org/about/code-of-ethics/) is a useful starting point for discussions about co-authorship, as are the [International Committee of Medical Journal Editors guidelines](http://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html) for authorship and contribution. You should also check guidelines published by the journal(s) to which you anticipate submitting your work.

-

-For collaborative research projects, develop an authorship agreement for your group early in the project and refer to it for each product. This example [authorship agreement](http://training.arcticdata.io/2020-10-arctic/files/template-authorship-policy-ADC-training.docx) from the Arctic Data Center provides a useful template. It builds from information contained within [Weltzin et al (2006)](https://core.ac.uk/download/pdf/215745938.pdf) and provides a rubric for inclusion of individuals as authors. Your collaborative team may not choose to adopt the agreement in the current form, however it will prompt thought and discussion in advance of developing a consensus. Some key questions to consider as you are working with your team to develop the agreement:

-

-- What roles do we anticipate contributors will play? e.g., the NISO [Contributor Roles Taxonomy (CRediT)](https://credit.niso.org/) identifies 14 distinct roles:

-

- - Conceptualization

- - Data curation

- - Formal Analysis

- - Funding acquisition

- - Investigation

- - Methodology

- - Project administration

- - Resources

- - Software

- - Supervision

- - Validation

- - Visualization

- - Writing – original draft

- - Writing – review & editing

-

-- What are our criteria for authorship? (See the [ICMJE guidelines](http://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html) for potential criteria)

-- Will we extend the opportunity for authorship to all group members on every paper or product?

-- Do we want to have an opt in or opt out policy? (In an opt out policy, all group members are considered authors from the outset and must request removal from the paper if they don’t want think they meet the criteria for authorship)

-- Who has the authority to make decisions about authorship? Lead author? PI? Group?

-- How will we decide authorship order?

-- In what other ways will we acknowledge contributions and extend credit to collaborators?

-- How will we resolve conflicts if they arise?

-

-

-## Data Sharing and Reuse Policies

-

-As with authorship agreements, it is valuable to establish a shared agreement around handling of data when embarking on collaborative projects. Data collected as part of a funded research activity will typically have been managed as part of the Data Management Plan (DMP) associated with that project. However, collaborative research brings together data from across research projects with different data management plans and can include publicly accessible data from repositories where no management plan is available. For these reasons, a discussion and agreement around the handling of data brought into and resulting from the collaboration is warranted and management of this new data may benefit from going through a data management planning process. Below we discuss example data agreements.

-

-The example data policy [template](http://training.arcticdata.io/2020-10-arctic/files/template-data-policy-ADC-training.docx) provided by the Arctic Data Center addresses three categories of data.

-

-- Individual data not in the public domain

-- Individual data with public access

-- Derived data resulting from the project

-

-For the first category, the agreement considers conditions under which those data may be used and permissions associated with use. It also addresses access and sharing. In the case of individual, publicly accessible data, the agreement stipulates that the team will abide by the attribution and usage policies that the data were published under, noting how those requirements we met. In the case of derived data, the agreement reads similar to a DMP with consideration of making the data public; management, documentation and archiving; pre-publication sharing; and public sharing and attribution. As research data objects receive a persistent identifier (PID), often a DOI, there are citable objects and consideration should be given to authorship of data, as with articles.

-

-The following [example lab policy](https://github.com/temporalecologylab/labgit/blob/master/datacodemgmt/tempeco_DMP.pdf) from the [Wolkovich Lab](http://temporalecology.org/) combines data management practices with authorship guidelines and data sharing agreements. It provides a lot of detail about how this lab approaches data use, attribution and authorship. For example:

-

-#### Section 6: Co-authorship & data {- .aside}

-

-If you agree to take on existing data you cannot offer co-authorship for use of the data unless four criteria are met:

-

-- The co-author agrees to (and does) make substantial intellectual contribution to the work, which includes the reading and editing of all manuscripts on which you are a co-author through the submission-for-publication stage. This includes helping with interpretation of the data, system, study questions.

-- Agreement of co-authorship is made at the start of the project.

-- Agreement is approved of by Lizzie.

-- All data-sharers are given an equal opportunity at authorship. It is not allowed to offer or give authorship to one data-sharer unless all other data-sharers are offered an equal opportunity at authorship—this includes data that are publicly-available, meaning if you offer authorship to one data-sharer and were planning to use publicly-available data you must reach out to the owner of the publicly-available data and strongly offer equivalent authorship as offered to the other data-sharer. As an example, if five people share data freely with you for a meta-analysis and and a sixth wants authorship you either must strongly offer equivalent authorship to all five or deny authorship to the sixth person. Note that the above requirements must also be met in this situation. If one or more datasets are more central or critical to a paper to warrant selective authorship this must be discussed and approved by Lizzie (and has not, to date, occurred within the lab).

-

-#### {-}

-

-#### Policy Preview

-

-

-

-

-

-This policy is communicated with all incoming lab members, from undergraduate to postdocs and visiting scholars, and is shared here with permission from Dr Elizabeth Wolkovich.

-

-

-### Community Principles: CARE and FAIR

-

-The CARE and FAIR Principles were introduced previously in the context of introducing the Arctic Data Center and our data submission and documentation process. In this section we will dive a little deeper.

-

-To recap, the Arctic Data Center is an openly-accessible data repository and the data published through the repository is open for anyone to reuse, subject to conditions of the license (at the Arctic Data Center, data is released under one of two licenses: [CC-0 Public Domain](https://creativecommons.org/publicdomain/zero/1.0/) and [CC-By Attribution 4.0](https://creativecommons.org/licenses/by/4.0/)). In facilitating use of data resources, the data stewardship community have converged on principles surrounding best practices for open data management One set of these principles is the [FAIR principles](https://force11.org/info/guiding-principles-for-findable-accessible-interoperable-and-re-usable-data-publishing-version-b1-0/). FAIR stands for Findable, Accessible, Interoperable, and Reproducible.

-

-

-

-

-The “[Fostering FAIR Data Practices in Europe](https://zenodo.org/record/5837500#.Ygb7VFjMJ0t)” project found that it is more monetarily and timely expensive when FAIR principles are not used, and it was estimated that 10.2 billion dollars per years are spent through “storage and license costs to more qualitative costs related to the time spent by researchers on creation, collection and management of data, and the risks of research duplication.” FAIR principles and open science are overlapping concepts, but are distinctive concepts. Open science supports a culture of sharing research outputs and data, and FAIR focuses on how to prepare the data.

-

-

-

-

-Another set of community developed principles surrounding open data are the [CARE Principles](https://static1.squarespace.com/static/5d3799de845604000199cd24/t/5da9f4479ecab221ce848fb2/1571419335217/CARE+Principles_One+Pagers+FINAL_Oct_17_2019.pdf). The CARE principles for Indigenous Data Governance complement the more data-centric approach of the FAIR principles, introducing social responsibility to open data management practices. The CARE Principles stand for:

-

-- Collective Benefit - Data ecosystems shall be designed and function in ways that enable Indigenous Peoples to derive benefit from the data

-- Authority to Control - Indigenous Peoples’ rights and interests in Indigenous data must be recognised and their authority to control such data be empowered. Indigenous data governance enables Indigenous Peoples and governing bodies to determine how Indigenous Peoples, as well as Indigenous lands, territories, resources, knowledges and geographical indicators, are represented and identified within data.

-- Responsibility - Those working with Indigenous data have a responsibility to share how those data are used to support Indigenous Peoples’ self-determination and collective benefit. Accountability requires meaningful and openly available evidence of these efforts and the benefits accruing to Indigenous Peoples.

-- Ethics - Indigenous Peoples’ rights and wellbeing should be the primary concern at all stages of the data life cycle and across the data ecosystem.

-

-The CARE principles align with the FAIR principles by outlining guidelines for publishing data that is findable, accessible, interoperable, and reproducible while at the same time, accounts for Indigenous’ Peoples rights and interests. Initially designed to support Indigenous data sovereignty, CARE principles are now being adopted across domains, and many researchers argue they are relevant for both Indigenous Knowledge and data, as well as data from all disciplines (Carroll et al., 2021). These principles introduce a “game changing perspective” that encourages transparency in data ethics, and encourages data reuse that is purposeful and intentional that aligns with human well-being aligns with human well-being (Carroll et al., 2021).

-

-## Research Data Publishing Ethics

-

-For over 20 years, the [Committee on Publication Ethics (COPE)](https://publicationethics.org/) has provided trusted guidance on ethical practices for scholarly publishing. The COPE guidelines have been broadly adopted by academic publishers across disciplines, and represent a common approach to identify, classify, and adjudicate potential breaches of ethics in publication such as authorship conflicts, peer review manipulation, and falsified findings, among many other areas. Despite these guidelines, there has been a lack of ethics standards, guidelines, or recommendations for data publications, even while some groups have begun to evaluate and act upon reported issues in data publication ethics.

-

-

-

-To address this gap, the [Force 11 Working Group on Research Data Publishing Ethics](https://force11.org/groups/research-data-publishing-ethics/home/) was formed as a collaboration among research data professionals and the Committee on Publication Ethics (COPE) "to develop industry-leading guidance and recommended best practices to support repositories, journal publishers, and institutions in handling the ethical responsibilities associated with publishing research data." The group released the "Joint FORCE11 & COPE Research Data Publishing Ethics Working Group Recommendations" [@puebla_2021], which outlines recommendations for four categories of potential data ethics issues:

-

-

-

-- [Authorship and Contribution Conflicts](https://zenodo.org/record/5391293/files/Authorship%20%26%20Contributions_datapubethics.pdf?download=1)

- - Authorship omissions

- - Authorship ordering changes / conflicts

- - Institutional investigation of author finds misconduct

-

-- [Legal/regulatory restrictions](https://zenodo.org/record/5391293/files/Legal%20%26%20Regulatory%20Restrictions_datapubethics.pdf?download=1)

- - Copyright violation

- - Insufficient rights for deposit

- - Breaches of national privacy laws (GPDR, CCPA)

- - Breaches of biosafety and biosecurity protocols

- - Breaches of contract law governing data redistribution

-

-- [Risks of publication or release](https://zenodo.org/record/5391293/files/Risk_datapubethics.pdf?download=1)

- - Risks to human subjects

- - Lack of consent

- - Breaches of himan rights

- - Release of personally identifiable information (PII)

- - Risks to species, ecosystems, historical sites

- - Locations of endangered species or historical sites

- - Risks to communities or societies

- - Data harvested for profit or surveillance

- - Breaches of data sovereignty

-

-- [Rigor of published data](https://zenodo.org/record/5391293/files/Rigor_datapubethics.pdf?download=1)

- - Unintentional errors in collection, calculation, display

- - Un-interpretable data due to lack of adequate documentation

- - Errors of of study design and inference

- - Data manipulation or fabrication

-

-Guidelines cover what actions need to be taken, depending on whether the data are already published or not, as well as who should be involved in decisions, who should be notified of actions, and when the public should be notified. The group has also published templates for use by publishers and repositories to announce the extent to which they plan to conform to the data ethics guidelines.

-

-### Discussion: Data publishing policies {.unnumbered .exercise}

-

-At the Arctic Data Center, we need to develop policies and procedures governing how we react to potential breaches of data publication ethics. In this exercise, break into groups to provide advice on how the Arctic Data Center should respond to reports of data ethics issues, and whether we should adopt the Joint FORCE11 & COPE Research Data Publishing Ethics Working Group Policy Templates for repositories. In your discussion, consider:

-

-- Should the repository adopt the [repository policy templates](https://zenodo.org/record/6422102/files/Repository%20Policy%20Template%20v1.pdf?download=1) from Force11?

-- Who should be involved in evaluation of the merits of ethical cases reported to ADC?

-- Who should be involved in deciding the actions to take?

-- What are the range of responses that the repository should consider for ethical breaches?

-- Who should be notified when a determination has been made that a breach has occurred?

-

-You might consider a hypothetical scenario such as the following in considering your response.

-

-> The data coordinator at the Arctic Data Center receives an email in 2022 from a prior postdoctoral fellow who was employed as part of an NSF-funded project on microbial diversity in Alaskan tundra ecosystems. The email states that a dataset from 2014 in the Arctic Data Center was published with the project PI as author, but omits two people, the postdoc and an undergraduate student, as co-authors on the dataset. The PI retired in 2019, and the postdoc asks that they be added to the author list of the dataset to correct the historical record and provide credit.

-

-

-### {-}

-

-## Extra Reading

-

-- [Cheruvelil, K. S., Soranno, P. A., Weathers, K. C., Hanson, P. C., Goring, S. J., Filstrup, C. T., & Read, E. K. (2014). Creating and maintaining high-performing collaborative research teams: The importance of diversity and interpersonal skills. Frontiers in Ecology and the Environment, 12(1), 31-38. DOI: 10.1890/130001](https://esajournals.onlinelibrary.wiley.com/doi/epdf/10.1890/130001)

-- [Carroll, S. R., Garba, I., Figueroa-Rodríguez, O. L., Holbrook, J., Lovett, R., Materechera, S., … Hudson, M. (2020). The CARE Principles for Indigenous Data Governance. Data Science Journal, 19(1), 43. DOI: http://doi.org/10.5334/dsj-2020-043](http://doi.org/10.5334/dsj-2020-043)

diff --git a/materials/sections/collaboration-thinking-preferences-in-person.Rmd b/materials/sections/collaboration-thinking-preferences-in-person.Rmd

deleted file mode 100644

index a77f6696..00000000

--- a/materials/sections/collaboration-thinking-preferences-in-person.Rmd

+++ /dev/null

@@ -1,54 +0,0 @@

-

-## Thinking preferences

-

-### Learning Objectives

-

-An activity and discussion that will provide:

-

-- Opportunity to get to know fellow participants and trainers

-- An introduction to variation in thinking preferences

-

-### Thinking Preferences Activity

-

-Step 1:

-

-- Don't read ahead!! We're headed to the patio.

-

-

-### About the Whole Brain Thinking System

-

-Everyone thinks differently. The way individuals think guides the way they work, and the way groups of individuals think guides how teams work. Understanding thinking preferences facilitates effective collaboration and team work.

-

-The Whole Brain Model, developed by Ned Herrmann, builds upon early conceptualizations of brain functioning. For example, the left and right hemispheres were thought to be associated with different types of information processing while our neocortex and limbic system would regulate different functions and behaviours.

-

-

-

-#### {-}

-

-

-The Herrmann Brain Dominance Instrument (HBDI) provides insight into dominant characteristics based on thinking preferences. There are four major thinking styles that reflect the left cerebral, left limbic, right cerebral and right limbic.

-

-- Analytical (Blue)

-- Practical (Green)

-- Relational (Red)

-- Experimental (Yellow)

-

-

-

-These four thinking styles are characterized by different traits. Those in the BLUE quadrant have a strong logical and rational side. They analyze information and may be technical in their approach to problems. They are interested in the 'what' of a situation. Those in the GREEN quadrant have a strong organizational and sequential side. They like to plan details and are methodical in their approach. They are interested in the 'when' of a situation. The RED quadrant includes those that are feelings-based in their apporach. They have strong interpersonal skills and are good communicators. They are interested in the 'who' of a situation. Those in the YELLOW quadrant are ideas people. They are imaginative, conceptual thinkers that explore outside the box. Yellows are interested in the 'why' of a situation.

-

-

-

-Undertsanding how people think and process information helps us understand not only our own approach to problem solving, but also how individuals within a team can contribute. There is great value in diversity of thinking styles within collaborative teams, each type bringing stengths to different aspects of project development.

-

-

-

-

-Of course, most of us identify with thinking styles in more than one quadrant and these different thinking preferences reflect a complex self made up of our rational, theoretical self; our ordered, safekeeping self; our emotional, interpersonal self; and our imaginitive, experimental self.

-

-

-

-#### Bonus Activity: Your Complex Self

-

-Using the statements contrained within this [document](files/ThinkingPreferencesMapping.pdf), plot the quadrilateral representing your complex self.

-

diff --git a/materials/sections/collaboration-thinking-preferences-short.Rmd b/materials/sections/collaboration-thinking-preferences-short.Rmd

deleted file mode 100644

index ceb17e7b..00000000

--- a/materials/sections/collaboration-thinking-preferences-short.Rmd

+++ /dev/null

@@ -1,54 +0,0 @@

-

-## Thinking preferences

-

-### Learning Objectives

-

-An activity and discussion that will provide:

-

-- Opportunity to get to know fellow participants and trainers

-- An introduction to variation in thinking preferences

-

-### Thinking Preferences Activity

-

-Step 1:

-

-- Read through the statements contained within this [document](files/ThinkingPreferencesMapping.pdf) and determine which descriptors are most like you. Make a note of them.

-- Review the descriptors again and determine which are quite like you.

-- You are working towards identifying your top 20. If you have more than 20, discard the descriptors that resonate the least.

-- Using the letter codes in the right hand column, count the number of descriptors that fall into the categories A B C and D.

-

-Step 2: Scroll to the second page and copy the graphic onto a piece of paper, completing the quadrant with your scores for A, B, C and D.

-

-Step 3: Reflect and share out: Do you have a dominant letter? Were some of the statements you included in your top 20 easier to resonate with than others? Were you answering based on how you *are* or how you wish to be?

-

-

-### About the Whole Brain Thinking System

-

-Everyone thinks differently. The way individuals think guides the way they work, and the way groups of individuals think guides how teams work. Understanding thinking preferences facilitates effective collaboration and team work.

-

-The Whole Brain Model, developed by Ned Herrmann, builds upon early conceptualizations of brain functioning. For example, the left and right hemispheres were thought to be associated with different types of information processing while our neocortex and limbic system would regulate different functions and behaviours.

-

-

-

-The Herrmann Brain Dominance Instrument (HBDI) provides insight into dominant characteristics based on thinking preferences. There are four major thinking styles that reflect the left cerebral, left limbic, right cerebral and right limbic.

-

-- Analytical (Blue)

-- Practical (Green)

-- Relational (Red)

-- Experimental (Yellow)

-

-

-

-These four thinking styles are characterized by different traits. Those in the BLUE quadrant have a strong logical and rational side. They analyze information and may be technical in their approach to problems. They are interested in the 'what' of a situation. Those in the GREEN quadrant have a strong organizational and sequential side. They like to plan details and are methodical in their approach. They are interested in the 'when' of a situation. The RED quadrant includes those that are feelings-based in their apporach. They have strong interpersonal skills and are good communicators. They are interested in the 'who' of a situation. Those in the YELLOW quadrant are ideas people. They are imaginative, conceptual thinkers that explore outside the box. Yellows are interested in the 'why' of a situation.

-

-

-

-Most of us identify with thinking styles in more than one quadrant and these different thinking preferences reflect a complex self made up of our rational, theoretical self; our ordered, safekeeping self; our emotional, interpersonal self; and our imaginitive, experimental self.

-

-

-

-Undertsanding the complexity of how people think and process information helps us understand not only our own approach to problem solving, but also how individuals within a team can contribute. There is great value in diversity of thinking styles within collaborative teams, each type bringing stengths to different aspects of project development.

-

-

-

-

diff --git a/materials/sections/collaboration-thinking-preferences.Rmd b/materials/sections/collaboration-thinking-preferences.Rmd

deleted file mode 100644

index 1bcd8e1b..00000000

--- a/materials/sections/collaboration-thinking-preferences.Rmd

+++ /dev/null

@@ -1,57 +0,0 @@

-

-## Thinking preferences

-

-### Learning Objectives

-

-An activity and discussion that will provide:

-

-- Opportunity to get to know fellow participants and trainers

-- An introduction to variation in thinking preferences

-

-### Thinking Preferences Activity

-

-Step 1: Don't jump ahead in this document. (Did I just jinx it?)

-

-Step 2: Review the [list of statements here](files/HBDIStatements.pdf) and reflect on your traits. Do you learn through structured activities? Are you conscious of time and are punctual? Are you imaginative? Do you like to take risks?

-Determine the three statements that resonate most with you and record them. Note the symbol next to each of them.

-

-Step 3: Review the [symbol key here](files/HBDIkey.pdf) and assign a color to each of your three remaining statements. Which is your dominant color or are you a mix of three?

-

-Step 4: Using the zoom breakout room feature, move between the five breakout rooms and talk to other participants about their dominant color statements. Keep moving until you cluster into a group of 'like' dominant colors. If you are a mix of three colors, find other participants that are also a mix.

-

-Step 5: When the breakout rooms have reached stasis, each group should note the name and dominant color of your breakout room in slack.

-

-Step 6: Take a moment to reflect on one of the statements you selected and share with others in your group. Why do you identify strongly with this trait? Can you provide an example that illustrates this in your life?

-

-### About the Whole Brain Thinking System

-

-Everyone thinks differently. The way individuals think guides the way they work, and the way groups of individuals think guides how teams work. Understanding thinking preferences facilitates effective collaboration and team work.

-

-The Whole Brain Model, developed by Ned Herrmann, builds upon our understanding of brain functioning. For example, the left and right hemispheres are associated with different types of information processing and our neocortex and limbic system regulate different functions and behaviours.

-

-

-

-The Herrmann Brain Dominance Instrument (HBDI) provides insight into dominant characteristics based on thinking preferences. There are four major thinking styles that reflect the left cerebral, left limbic, right cerebral and right limbic.

-

-- Analytical (Blue)

-- Practical (Green)

-- Relational (Red)

-- Experimental (Yellow)

-

-

-

-These four thinking styles are characterized by different traits. Those in the BLUE quadrant have a strong logical and rational side. They analyze information and may be technical in their approach to problems. They are interested in the 'what' of a situation. Those in the GREEN quadrant have a strong organizational and sequential side. They like to plan details and are methodical in their approach. They are interested in the 'when' of a situation. The RED quadrant includes those that are feelings-based in their apporach. They have strong interpersonal skills and are good communicators. They are interested in the 'who' of a situation. Those in the YELLOW quadrant are ideas people. They are imaginative, conceptual thinkers that explore outside the box. Yellows are interested in the 'why' of a situation.

-

-

-

-Most of us identify with thinking styles in more than one quadrant and these different thinking preferences reflect a complex self made up of our rational, theoretical self; our ordered, safekeeping self; our emotional, interpersonal self; and our imaginitive, experimental self.

-

-

-

-Undertsanding the complexity of how people think and process information helps us understand not only our own approach to problem solving, but also how individuals within a team can contribute. There is great value in diversity of thinking styles within collaborative teams, each type bringing stengths to different aspects of project development.

-

-

-

-### Bonus Activity: Your Complex Self

-

-Using the statements contrained within this [document](files/ThinkingPreferencesMapping.pdf), plot the quadrilateral representing your complex self.

diff --git a/materials/sections/communication.Rmd b/materials/sections/communication.Rmd

deleted file mode 100644

index f71618d7..00000000

--- a/materials/sections/communication.Rmd

+++ /dev/null

@@ -1,115 +0,0 @@

-## Communication Principles and Practices

-

-*"The ingredients of good science are obvious—novelty of research topic, comprehensive coverage of the relevant literature, good data, good analysis including strong statistical support, and a thought-provoking discussion. The ingredients of good science reporting are obvious—good organization, the appropriate use of tables and figures, the right length, writing to the intended audience— do not ignore the obvious" - Bourne 2005*

-

-### Scholarly publications

-

-Peer-reviewed publication remains a primary mechanism for direct and efficient communication of research findings. Other scholarly communications include abstracts, technical reports, books and book chapters. These communications are largely directed towards students and peers; individuals learning about or engaged in the process of scientific research whether in a university, non-profit, agency, commercial or other setting. In this section we will focus less on peer-reviewed publications and more on messaging in general, whether for publications, reports, articles or social media. That said, the following tabling is a good summary of '10 Simple Rules' for writing research papers (adapted from [Zhang 2014](https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1003453), published in [Budden and Michener, 2017](https://link.springer.com/chapter/10.1007%2F978-3-319-59928-1_14))

-

-1. **Make it a Driving Force** "design a project with an ultimate paper firmly in mind”

-2. **Less Is More** "fewer but more significant papers serve both the research community and one’s career better than more papers of less significance”

-3. **Pick the Right Audience** “This is critical for determining the organization of the paper and the level of detail of the story, so as to write the paper with the audience in mind.”

-4. **Be Logical** “The foundation of ‘‘lively’’ writing for smooth reading is a sound and clear logic underlying the story of the paper.” “An effective tactic to help develop a sound logical flow is to imaginatively create a set of figures and tables, which will ultimately be developed from experimental results, and order them in a logical way based on the information flow through the experiments.”

-5. **Be Thorough and Make It Complete** Present the central underlying hypotheses; interpret the insights gleaned from figures and tables and discuss their implications; provide sufficient context so the paper is self-contained; provide explicit results so readers do not need to perform their own calculations; and include self-contained figures and tables that are described in clear legends

-6. **Be Concise** “the delivery of a message is more rigorous if the writing is precise and concise”

-7. **Be Artistic** “concentrate on spelling, grammar, usage, and a ‘‘lively’’ writing style that avoids successions of simple, boring, declarative sentences”

-8. **Be Your Own Judge** Review, revise and reiterate. “…put yourself completely in the shoes of a referee and scrutinize all the pieces—the significance of the work, the logic of the story, the correctness of the results and conclusions, the organization of the paper, and the presentation of the materials.”

-9. **Test the Water in Your Own Backyard** “…collect feedback and critiques from others, e.g., colleagues and collaborators.”

-10. **Build a Virtual Team of Collaborators** Treat reviewers as collaborators and respond objectively to their criticisms and recommendations. This may entail redoing research and thoroughly re-writing a paper.

-

-### Other communications

-

-Communicating your research outside of peer-reviewed journal articles is increasingly common, and important. These non academic communications can reach a more broad and diverse audience than traditional publications (and should be tailored for specific audience groups accordingly), are not subject to the same pay-walls as journal articles, and can augment and amplify scholarly publications. For example, this twitter announcement from Dr Heather Kropp, providing a synopsis of their synthesis publication in Environmental Research (note the reference to a repository where the data are located, though a DOI would be better).

-

-

-Whether this communication occurs through blogs, social media, or via interviews with others, developing practices to refine your messaging is critical for successful communication. One tool to support your communication practice is 'The Message Box' developed by COMPASS, an organization that helps scientists develop communications skills in order to share their knowledge and research across broad audiences without compromising the accuracy of their research.

-

-### The Message Box

-

-

-

-

-

-The [Message Box](https://www.compassscicomm.org/leadership-development/the-message-box/) is a tool that helps researchers take the information they hold about their research and communicate it in a way that resonates with the chosen audience. It can be used to help prepare for interviews with journalists or employers, plan for a presentation, outline a paper or lecture, prepare a grant proposal, or clearly, and with relevancy, communicate your work to others. While the message box *can* be used in all these ways, you must first identify the audience for your communication.

-

-The Message Box comprises five sections to help you sort and distill your knowledge in a way that will resonate with your (chosen) audience. How we communicate with other scientists (through scholarly publications) is not how the rest of the rest of the world typically communicates. In a scientific paper, we establish credibility in the introduction and methods, provide detailed data and results, and then share the significance of our work in the discussion and conclusions. But the rest of the world leads with the impact, the take home message. A quick glance of newspaper headlines demonstrates this.

-

-

-

-The five sections of the Message Box are provided below. For a detailed explanation of the sections and guidance on how to use the Message Box, work through the [Message Box Workbook](https://www.compassscicomm.org/wp-content/uploads/2020/05/The-Message-Box-Workbook.pdf)

-

-#### Message Box Sections

-

-**The Issue**

-

-The Issue section in the center of the box identifies and describes the overarching issue or topic that you’re addressing in broad terms. It’s the big-picture context of your work. This should be very concise and clear; no more than a short phrase. You might find you revisit the Issue after you’ve filled out your Message Box, to see if your thinking on the overarching topic has changed since you started.

-

-- Describes the overarching issue or topic: Big Picture

-- Broad enough to cover key points

-- Specific enough to set up what's to come

-- Concise and clear

-- 'Frames' the rest of the message box

-

-**The Problem**

-

-The Problem is the chunk of the broader issue that you’re addressing in your area of expertise. It’s your piece of the pie, reflecting your work and expert knowledge. Think about your research questions and what aspect of the specific problem you’re addressing would matter to your audience. The Problem is also where you set up the So What and describe the situation you see and want to address.

-

-- The part of the broader issue that your work is addressing

-- Builds upon your work and expert knowledge

-- Try to focus on one problem per audience

-- Often the ***Problem*** is your research question

-- This section sets you up for ***So What***

-

-**The So What**

-

-The crux of the Message Box, and the critical question the COMPASS team seeks to help scientists answer, is “So what?”

-Why should your audience care? What about your research or work is important for them to know? Why are you talking to them about it? The answer to this question may change from audience to audience, and you’ll want to be able to adjust based on their interests and needs. We like to use the analogy of putting a message through a prism that clarifies the importance to different audiences. Each audience will be interested in different facets of your work, and you want your message to reflect their interests and accommodate their needs. The prism below includes a spectrum of audiences you might want to reach, and some of the questions they might have about your work.

-

-- This is the crux of the message box

-- Why should you audience care?

-- What about your research is important for them to know?

-- Why are you talking to them about it?

-

-

-

-**The Solution**

-

-The Solution section outlines the options for solving the problem you identified. When presenting possible solutions, consider whether they are something your audience can influence or act upon. And remind yourself of your communication goals: Why are you communicating with this audience? What do you want to accomplish?

-

-- Outlines the options for solving the ***Problem ***

-- Can your audience influence or act upon this?

-- There may be multiple solutions

-- Make sure your ***Solution*** relates back to the ***Problem***. Edit one or both as needed

-

-**The Benefit**

-

-In the Benefit section, you list the benefits of addressing the Problem — all the good things that could happen if your Solution section is implemented. This ties into the So What of why your audience cares, but focuses on the positive results of taking action (the So What may be a negative thing — for example, inaction could lead to consequences that your audience cares about). If possible, it can be helpful to be specific here — concrete examples are more compelling than abstract. Who is likely to benefit, and where, and when?

-

-- What are the benefits of addressing the ***Problem***?

-- What good things come from implementing your ***Solution***?

-- Make sure it connects with your ***So What***

-- ***Benefits*** and ***So What*** may be similar

-

-**Finally**, to make you message more memorable you should:

-

-- Support your message with data

-- Limit the use of numbers and statistics

-- Use specific examples

-- Compare numbers to concepts, help people relate

-- Avoid jargon

-- Lead with what you know

-

-

-

-In addition to the [Message Box Workbook](https://www.compassscicomm.org/wp-content/uploads/2020/05/The-Message-Box-Workbook.pdf), COMPASS have resources on how to [increase the impact](https://www.compassscicomm.org/practice/) of your message (include important statistics, draw comparisons, reduce jargon, use examples), exercises for practicing and [refining](https://www.compassscicomm.org/compare/) your message and published [examples](https://www.compassscicomm.org/examples/).

-

-

-### Resources

-

-

-DataONE Webinar: Communication Strategies to Increase Your Impact from DataONE on Vimeo.

-

-- Budden, AE and Michener, W (2017) [Communicating and Disseminating Research Findings](https://link.springer.com/chapter/10.1007%2F978-3-319-59928-1_14). In: Ecological Informatics, 3rd Edition. Recknagel, F, Michener, W (eds.) Springer-Verlag

-- COMPASS [Core Principles of Science Communication](https://www.compassscicomm.org/core-principles-of-science-communication-2/)

-- [Example Message Boxes](https://www.compassscicomm.org/examples/)

-

diff --git a/materials/sections/data-ethics-eloka-2023.Rmd b/materials/sections/data-ethics-eloka-2023.Rmd

deleted file mode 100644

index d976306c..00000000

--- a/materials/sections/data-ethics-eloka-2023.Rmd

+++ /dev/null

@@ -1,228 +0,0 @@

-## Introduction

-

-This part of the course was developed with input from ELOKA and the NNA-CO, and is a work-in-progress. The training introduces ethics issues in a broad way and includes discussion of social science data and open science, but the majority of the section focuses on issues related to research with, by, and for Indigenous communities. We recognize that there is a need for more in-depth training and focus on open science for social scientists and others who are not engaging with Indigenous Knowledge holders and Indigenous communities, and hope to develop further resources in this area in the future. **Many of the data stewardship practices that have been identified as good practices through Indigenous Data Sovereignty framework development are also relevant for those working with Arctic communities that are not Indigenous, although the rights frameworks and collective ownership is specific to the Indigenous context.**

-

-The examples we include in this training are primarily drawn from the North American research context. In future trainings, we plan to expand and include examples from other Indigenous Arctic contexts. **We welcome suggestions and resources that would strengthen this training for audiences outside of North America.**

-

-We also recognize the importance of trainings on Indigenous data sovereignty and ethics that are being developed and facilitated by Indigenous organizations and facilitators. In this training we offer some introductory material but there is much more depth offered in IDS specific trainings.

-

-## Introduction to ELOKA

-

-The Exchange for Local Observations and Knowledge of the Arctic is an NSF funded project. ELOKA partners with Indigenous communities in the Arctic to create online products that facilitate the collection, preservation, exchange, and use of local observations and Indigenous Knowledge of the Arctic. ELOKA fosters collaboration between resident Arctic experts and visiting researchers, provides data management and user support, and develops digital tools for Indigenous Knowledge in collaboration with our partners. By working together, Arctic residents and researchers can make significant contributions to a deeper understanding of the Arctic and the social and environmental changes ongoing in the region.

-

-Arctic residents and Indigenous peoples have been increasingly involved in, and taking control of, research. Through Local and Indigenous Knowledge and community-based monitoring, Arctic communities have made, and continue to make, significant contributions to understanding recent Arctic change. In ELOKA's work, we subscribe to ideas of information and data sovereignty, in that we want our projects to be community-driven with communities having control over how their data, information, and knowledge are shared in an ethical manner.

-

-A key challenge of Local and Indigenous Knowledge research and community-based monitoring to date is having an effective and appropriate means of recording, storing, representing, and managing data and information in an ethical manner. Another challenge is to find an effective means of making such data and information available to Arctic residents and researchers, as well as other interested groups such as teachers, students, and decision-makers. Without a network and data management system to support Indigenous Knowledge and community-based research, a number of problems have arisen, such as misplacement or loss of extremely precious data, information, and stories from Elders who have passed away, a lack of awareness of previous studies causing repetition of research and wasted resources occurring in the same communities, and a reluctance or inability to initiate or maintain community-based research without an available data management system. Thus, there is an urgent need for effective and appropriate means of recording, preserving, and sharing the information collected in Arctic communities. ELOKA aims to fill this gap by partnering with Indigenous communities to ensure their knowledge and data are stored in an ethical way, thus ensuring sovereignty over these valuable sources of information.

-

-ELOKA's overarching philosophy is that Local and Indigenous Knowledge and scientific expertise are complementary and reinforcing ways of understanding the Arctic system. Collecting, documenting, preserving, and sharing knowledge is a cooperative endeavor, and ELOKA is dedicated to fostering that shared knowledge between Arctic residents, scientists, educators, policy makers, and the general public. ELOKA operates on the principle that all knowledge should be treated ethically, and intellectual property rights should be respected.

-

-ELOKA is a service available for research projects, communities, organizations, schools, and individuals who need help storing, protecting, and sharing Local and Indigenous Knowledge. ELOKA works with many different types of data and information, including:

-

-- Written interview transcripts

-

-- Audio or video tapes and files

-

-- Photographs, artwork, illustrations, and maps

-

-- Digital geographic information such as GPS tracks, and data created using Geographic Information Systems

-

-- Quantitative data such as temperature, snow thickness, wind data, etc.

-

-- Many other types of Indigenous Knowledge and local observations, including place names

-

-ELOKA collaborates with other organizations engaged in addressing data management issues for community-based research. Together, we are working to build a community that facilitates international knowledge exchange, development of resources, and collaboration focused on local communities and stewardship of their data, information, and knowledge.

-

-## Working With Arctic Communities

-

-Arctic communities (defined as a place and the people who live there, based on geographic location in the Arctic/sub-Arctic) are involved in research in diverse ways - as hosts to visiting or non-local researchers, as well as "home" to community researchers who are leading or collaborating on research projects. **Over the past decades, community voices of discontent with standard research practices that are often exclusive and perpetuate inequities have grown stronger**. The Arctic research community (defined more broadly as the range of institutions, organizations, researchers and local communities involved in research) is in the midst of a complex conversation about equity in research aimed at transforming research practice to make it more equitable and inclusive.

-

-**One of the drivers of community concerns is the colonial practice of extracting knowledge from a place or group of people without respect for local norms of relationship with people and place, and without an ethical commitment to sharing and making benefits of knowledge accessible and accountable to that place.** Such approaches to knowledge and data extraction follow hundreds of years of exploration and research that viewed science as a tool of "Enlightenment" yet focused exclusively on benefits to White, European (or "southern" from an Arctic community perspective) researchers and scientists. This prioritization of non-local perspectives and needs (to Arctic communities) continues in Arctic research.

-

-**One result of this approach to research has been a lack of access for Arctic residents to the data and knowledge that have resulted from research conducted in their own communities.** Much of this data was stored in the personal files or hard drives of researchers, or in archives located in urban centers far from the Arctic.

-

-## Indigenous Data Governance and Sovereignty

-

-

-

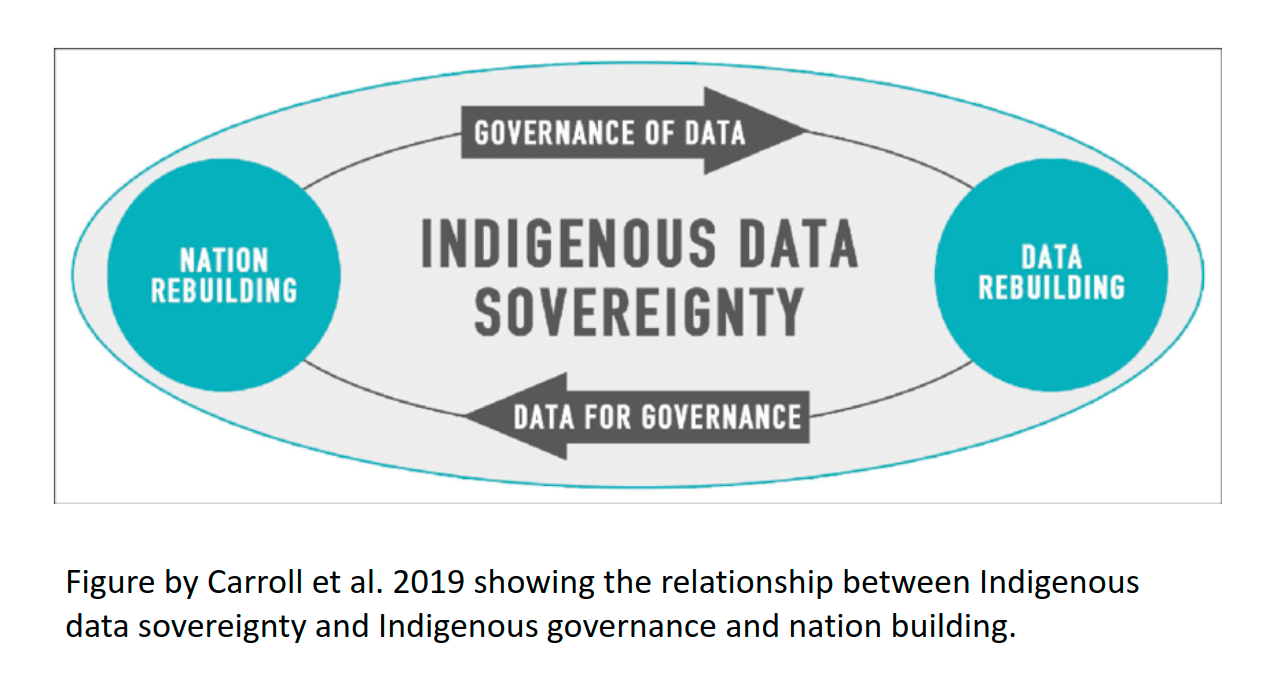

-**All governing entities, whether national, state, local, or tribal, need access to good, current, relevant data in order to make policy, planning, and programmatic decisions.** Indigenous nations and organizations have had to push for data about their peoples and communities to be collected and shared in ethical and culturally appropriate ways, and they have also had to fight for resources and capacity to develop and lead their own research programs.

-

-#### **Indigenous Data Definitions**

-

-**Indigenous data sovereignty** "...refers to the right of Indigenous peoples to govern the collection, ownership, and application of data about Indigenous communities, peoples, lands, and resources (Rainie et al. 2019). These governance rights apply "regardless of where/by whom data is held (Rainie et al. 2019).

-

-Some Indigenous individuals and communities have expressed dissatisfaction with the term "data" as being too narrowly focused and abstract to represent the embedded and holistic nature of knowledge in Indigenous communities. **Knowledge sovereignty** is a related term that has a similar meaning but is framed more broadly, and has been defined as:

-

-"Tribal communities having control over the documentation and production of knowledge (such as through research activities) which relate to Alaska Native people and the resources they steward and depend on" (Kawerak 2021).

-

-**Indigenous data** is "data in a wide variety of formats inclusive of digital data and data as knowledge and information. It encompasses data, information, and knowledge about Indigenous individuals, collectives, entities, lifeways, cultures, lands, and resources." (Rainie et al. 2019)

-

-**Indigenous data governance** is "The entitlement to determine how Indigenous data is governed and stewarded" (Rainie et al. 2019)

-

-## CARE Principles

-

-In facilitating use of data resources, the data stewardship community have converged on principles surrounding best practices for open data management One set of these principles is the FAIR principles. FAIR stands for Findable, Accessible, Interoperable, and Reproducible.

-

-The FAIR (Findable, Accessible, Interoperable, Reproducible) principles for data management are widely known and broadly endorsed.

-

-FAIR principles and open science are overlapping concepts, but are distinctive concepts. Open science supports a culture of sharing research outputs and data, and FAIR focuses on how to prepare the data. **The FAIR principles place emphasis on machine readability, "distinct from peer initiatives that focus on the human scholar" (Wilkinson et al 2016) and as such, do not fully engage with sensitive data considerations and with Indigenous rights and interests (Research Data Alliance International Indigenous Data Sovereignty Interest Group, 2019)**. Research has historically perpetuated colonialism and represented extractive practices, meaning that the research results were not mutually beneficial. These issues also related to how data was owned, shared, and used.

-

-**To address issues like these, the Global Indigenous Data Alliance (GIDA) introduced CARE Principles for Indigenous Data Governance to support Indigenous data sovereignty**. To many, the FAIR and CARE principles are viewed by many as complementary: CARE aligns with FAIR by outlining guidelines for publishing data that contributes to open-science and at the same time, accounts for Indigenous' Peoples rights and interests. CARE Principles for Indigenous Data Governance stand for Collective Benefit, Authority to Control, Responsibility, Ethics. The CARE principles for Indigenous Data Governance complement the more data-centric approach of the FAIR principles, introducing social responsibility to open data management practices. These principles ask researchers to put human well-being at the forefront of open-science and data sharing (Carroll et al., 2021; Research Data Alliance International Indigenous Data Sovereignty Interest Group, September 2019).

-

-Indigenous data sovereignty and considerations related to working with Indigenous communities are particularly relevant to the Arctic. The CARE Principles stand for:

-

-- **Collective Benefit** - Data ecosystems shall be designed and function in ways that enable Indigenous Peoples to derive benefit from the data for:

-

- - Inclusive development/innovation

-

- - Improved governance and citizen engagement

-

- - Equitable outcomes

-

-- **Authority to Control** - Indigenous Peoples' rights and interests in Indigenous data must be recognised and their authority to control such data be empowered. Indigenous data governance enables Indigenous Peoples and governing bodies to determine how Indigenous Peoples, as well as Indigenous lands, territories, resources, knowledges and geographical indicators, are represented and identified within data.

-

- - Recognizing Indigenous rights (individual and collective) and interests

-

- - Data for governance

-

- - Governance of data

-

-- **Responsibility** - Those working with Indigenous data have a responsibility to share how those data are used to support Indigenous Peoples' self-determination and collective benefit. Accountability requires meaningful and openly available evidence of these efforts and the benefits accruing to Indigenous Peoples.

-

- - For positive relationships

-

- - For expanding capability and capacity (enhancing digital literacy and digital infrastructure)

-

- - For Indigenous languages and worldviews (sharing data in Indigenous languages)

-

-- **Ethics** - Indigenous Peoples' rights and wellbeing should be the primary concern at all stages of the data life cycle and across the data ecosystem.

-

- - Minimizing harm/maximizing benefit - not using a "deficit lens" that conceives of and portrays Indigenous communities as dysfunctional, lacking solutions, and in need of intervention. For researchers, adopting a deficit lens can lead to collection of only a subset of data while excluding other data and information that might identify solutions, innovations, and sources of resilience from within Indigenous communities. For policy makers, a deficit lens can lead to harmful interventions framed as "helping."

-

- - For justice - addressing power imbalances and equity

-

- - For future use - acknowledging potential future use/future harm. Metadata should acknowledge provenance and purpose and any limitations in secondary use inclusive of issues of consent.

-

-

-

-**Sharing sensitive data introduces unique ethical considerations, and FAIR and CARE principles speak to this by recommending sharing anonymized metadata to encourage discover ability and reduce duplicate research efforts, following consent of rights holders (Puebla & Lowenberg, 2021)**. While initially designed to support Indigenous data sovereignty, CARE principles are being adopted more broadly and researchers argue they are relevant across all disciplines (Carroll et al., 2021). As such, these principles introduce a "game changing perspective" for all researchers that encourages transparency in data ethics, and encourages data reuse that is both purposeful and intentional and that aligns with human well-being (Carroll et al., 2021). Hence, to enable the research community to articulate and document the degree of data sensitivity, and ethical research practices, the Arctic Data Center has introduced new submission requirements.

-

-\

-\

-

-### Discussion Questions:

-

-1. Do any of the practices of your data management workflow reflect the CARE Principles, or incorporate aspects of them?

-

-\

-

-### Examples from ELOKA

-

-#### Nunaput Atlas

-

-

-

-\

-

-Nunaput translates to "our land" in Cup'ik, the Indigenous language of Chevak, Alaska. Chevak or Cev'aq means "cut through channel" and refers to the creation of a short cut between two rivers. Chevak is a Cup'ik community, distinct from the Yup'ik communities that surround it, located in the Yukon-Kuskokwim Delta region of Western Alaska.

-

-The Nunaput Atlas is a community driven, interactive, online atlas for the Chevak Traditional Council and Chevak community members to create a record of observations, knowledge, and share stories about their land. The Nunaput Atlas is being developed in collaboration with the Exchange for Local Observations and Knowledge of the Arctic (ELOKA) and the U.S. Geological Survey (USGS). The community of Chevak has been involved in a number of community-based monitoring and research projects with the USGS and the Yukon River Inter-Tribal Watershed Council (YRITWC) over the years. The monitoring data collected by the Chevak Traditional Council's Environmental department as well as results from research projects are also presented in this atlas.

-

-All atlases are created uniquely and data ethics issues and privacy are presented. There is no standard template for user agreements that all atlases adopt: each user agreement is designed by the community, and it isdesigned specific to their needs. Nunaput Atlas has a public view, but is primarily used on the private, password protected side.

-

-#### Yup'ik Atlas

-

-

-

-Yup'ik Atlas is a great example of the data owners and community wanting the data to be available and public. Part of Indigenous governance and data governance is about having good data, to enable decision making. The Yup'ik atlas has many aspects and uses, and one of the primary uses is for it to be integrated into the regional curriculum to engage youth.

-

-#### AAOKH

-

-

-

-The Alaska Arctic Observatory and Knowledge Hub is a great example of the continual correspondence and communication between researchers and community members/knowledge holders once the data is collected. Specifically, AAOKH has dedicated a lot of time and in person meetings to creating a data citation that best reflects what the community members want.

-

-\

-

-### Final Questions

-

-1. Do CARE Principles apply to your research? Why or why not?

-

-2. Are there any limitations or barriers to adopting CARE Principles?

-

-### Data Ethics Resources

-

-**Trainings:**

-

-Fundamentals of OCAP (online training - for working with First Nations in Canada):

-

-Native Nations Institute trainings on Indigenous Data Sovereignty and Indigenous Data Governance:

-

-The [Alaska Indigenous Research Program](https://anthc.org/alaska-indigenous-research-program/), is a collaboration between the Alaska Native Tribal Health Consortium (ANTHC) and Alaska Pacific University (APU) to increase capacity for conducting culturally responsive and respectful health research that addresses the unique settings and health needs of Alaska Native and American Indian People. The 2022 program runs for three weeks (May 2 - May 20), with specific topics covered each week. Week two (Research Ethics) may be of particular interest. Registration is free.

-

-The r-ETHICS training (Ethics Training for Health in Indigenous Communities Study) is starting to become an acceptable, recognizable CITI addition for IRB training by tribal entities.

-

-Kawerak, Inc and First Alaskans Institute have offered trainings in research ethics and Indigenous Data Sovereignty. Keep an eye out for further opportunities from these Alaska-based organizations.

-

-**On open science and ethics:**

-

-

-

-ON-MERRIT recommendations for maximizing equity in open and responsible research

-

-

-

-

-

-**Arctic social science and data management:**

-

-Arctic Horizons report: Anderson, S., Strawhacker, C., Presnall, A., et al. (2018). Arctic Horizons: Final Report. Washington D.C.: Jefferson Institute.

-

-Arctic Data Center workshop report:

-

-**Arctic Indigenous research and knowledge sovereignty frameworks, strategies and reports:**

-

-Kawerak, Inc. (2021) [Knowledge & Research Sovereignty Workshop](https://kawerak.org/download/kawerak-knowledge-and-research-sovereignty-ksi-workshop-report/) May 18-21, 2021 Workshop Report. Prepared by Sandhill.Culture. Craft and Kawerak Inc. Social Science Program. Nome, Alaska.

-

-Inuit Circumpolar Council. 2021. Ethical and Equitable Engagement Synthesis Report: A collection of Inuit rules, guidelines, protocols, and values for the engagement of Inuit Communities and Indigenous Knowledge from Across Inuit Nunaat. Synthesis Report. International.

-

-Inuit Tapiriit Kanatami. 2018. National Inuit Strategy on Research. Accessed at:

-

-**Indigenous Data Governance and Sovereignty:**

-

-McBride, K. [Data Resources and Challenges for First Nations Communities](https://www.afnigc.ca/main/includes/media/pdf/digital%20reports/Data_Resources_Report.pdf). Document Review and Position Paper. Prepared for the Alberta First Nations Information Governance Centre.

-

-Carroll, S.R., Garba, I., Figueroa-Rodríguez, O.L., Holbrook, J., Lovett, R., Materechera, S., Parsons, M., Raseroka, K., Rodriguez-Lonebear, D., Rowe, R., Sara, R., Walker, J.D., Anderson, J. and Hudson, M., 2020. The CARE Principles for Indigenous Data Governance. Data Science Journal, 19(1), p.43. DOI:

-