Open

Description

Bug description

Steps/Code to reproduce bug

I run this example from Merlin Models. I run it all the way (and inclusive) to the cell where I train the model:

model.compile(run_eagerly=False, optimizer='adam', loss="categorical_crossentropy")

model.fit(train_set_processed, batch_size=64, epochs=5, pre=mm.SequenceMaskRandom(schema=seq_schema, target=target, masking_prob=0.3, transformer=transform

Subsequently, I attempt to exporting the ensemble to perform inference on triton using the following code:

from merlin.systems.dag.ops.tensorflow import PredictTensorflow

from merlin.systems.dag.ensemble import Ensemble

from merlin.systems.dag.ops.workflow import TransformWorkflow

inf_ops = wf.input_schema.column_names >> TransformWorkflow(wf) >> PredictTensorflow(model)

ensemble = Ensemble(inf_ops, wf.input_schema)

ensemble.export('/workspace/models_for_benchmarking');

Expected behavior

The error doesn't occur and I am able to export the ensemble.

Environment details

I am running the 22.12 tensorflow container with all repos updated to current main.

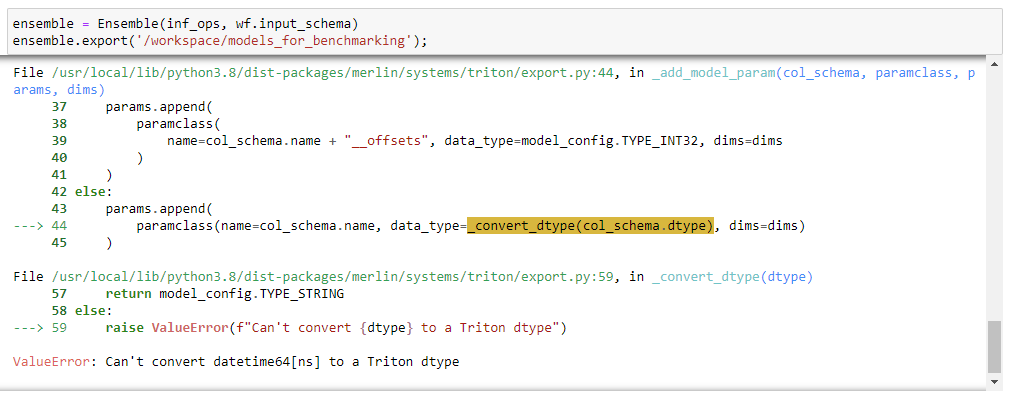

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[24], line 8

5 inf_ops = wf.input_schema.column_names >> TransformWorkflow(wf) >> PredictTensorflow(model)

7 ensemble = Ensemble(inf_ops, wf.input_schema)

----> 8 ensemble.export('/workspace/models_for_benchmarking');

File /usr/local/lib/python3.8/dist-packages/merlin/systems/dag/ensemble.py:153, in Ensemble.export(self, export_path, runtime, **kwargs)

148 """

149 Write out an ensemble model configuration directory. The exported

150 ensemble is designed for use with Triton Inference Server.

151 """

152 runtime = runtime or TritonExecutorRuntime()

--> 153 return runtime.export(self, export_path, **kwargs)

File /usr/local/lib/python3.8/dist-packages/merlin/systems/dag/runtimes/triton/runtime.py:133, in TritonExecutorRuntime.export(self, ensemble, path, version, name)

131 node_id = node_id_table.get(node, None)

132 if node_id is not None:

--> 133 node_config = node.export(path, node_id=node_id, version=version)

134 if node_config is not None:

135 node_configs.append(node_config)

File /usr/local/lib/python3.8/dist-packages/merlin/systems/dag/node.py:50, in InferenceNode.export(self, output_path, node_id, version)

27 def export(

28 self,

29 output_path: Union[str, os.PathLike],

30 node_id: int = None,

31 version: int = 1,

32 ):

33 """

34 Export a Triton config directory for this node.

35

(...)

48 Triton model config corresponding to this node.

49 """

---> 50 return self.op.export(

51 output_path,

52 self.input_schema,

53 self.output_schema,

54 node_id=node_id,

55 version=version,

56 )

File /usr/local/lib/python3.8/dist-packages/merlin/systems/dag/runtimes/triton/ops/workflow.py:170, in TransformWorkflowTriton.export(self, path, input_schema, output_schema, params, node_id, version)

167 node_export_path = pathlib.Path(path) / node_name

168 node_export_path.mkdir(parents=True, exist_ok=True)

--> 170 backend_model_config = _generate_nvtabular_model(

171 modified_workflow,

172 node_name,

173 node_export_path,

174 sparse_max=self.op.sparse_max,

175 max_batch_size=self.op.max_batch_size,

176 cats=self.op.cats,

177 conts=self.op.conts,

178 )

180 return backend_model_config

File /usr/local/lib/python3.8/dist-packages/merlin/systems/dag/runtimes/triton/ops/workflow.py:206, in _generate_nvtabular_model(workflow, name, output_path, version, output_model, max_batch_size, sparse_max, backend, cats, conts)

195 """converts a workflow to a triton mode

196 Parameters

197 ----------

(...)

203 Names of the continuous columns

204 """

205 workflow.save(os.path.join(output_path, str(version), "workflow"))

--> 206 config = _generate_nvtabular_config(

207 workflow,

208 name,

209 output_path,

210 output_model,

211 max_batch_size,

212 sparse_max=sparse_max,

213 backend=backend,

214 cats=cats,

215 conts=conts,

216 )

218 # copy the model file over. note that this isn't necessary with the c++ backend, but

219 # does provide us to use the python backend with just changing the 'backend' parameter

220 with importlib.resources.path(

221 "merlin.systems.triton.models", "workflow_model.py"

222 ) as workflow_model:

File /usr/local/lib/python3.8/dist-packages/merlin/systems/dag/runtimes/triton/ops/workflow.py:289, in _generate_nvtabular_config(workflow, name, output_path, output_model, max_batch_size, sparse_max, backend, cats, conts)

287 else:

288 for col_name, col_schema in workflow.input_schema.column_schemas.items():

--> 289 _add_model_param(col_schema, model_config.ModelInput, config.input)

291 for col_name, col_schema in workflow.output_schema.column_schemas.items():

292 if sparse_max and col_name in sparse_max.keys():

293 # this assumes max_sequence_length is equal for all output columns

File /usr/local/lib/python3.8/dist-packages/merlin/systems/triton/export.py:44, in _add_model_param(col_schema, paramclass, params, dims)

37 params.append(

38 paramclass(

39 name=col_schema.name + "__offsets", data_type=model_config.TYPE_INT32, dims=dims

40 )

41 )

42 else:

43 params.append(

---> 44 paramclass(name=col_schema.name, data_type=_convert_dtype(col_schema.dtype), dims=dims)

45 )

File /usr/local/lib/python3.8/dist-packages/merlin/systems/triton/export.py:59, in _convert_dtype(dtype)

57 return model_config.TYPE_STRING

58 else:

---> 59 raise ValueError(f"Can't convert {dtype} to a Triton dtype")

ValueError: Can't convert datetime64[ns] to a Triton dtype