-

Notifications

You must be signed in to change notification settings - Fork 77

Home

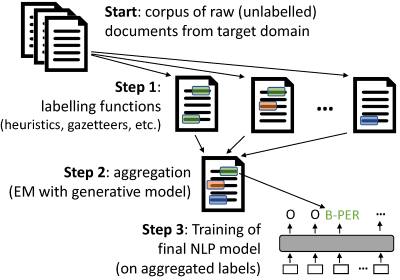

skweak a versatile, Python-based software toolkit enabling NLP developers to apply weak supervision to a wide range of tasks, and in particular sequence labelling and text classification. Instead of labelling data points by hand, we define labelling functions to automatically annotate text documents from the target domain. The results of those labelling functions are then aggregated into one single annotation layer using a generative model.

As shown above, weak supervision with skweak is divided in several steps:

-

Start: We must first prepare the(unlabelled) corpus onto which the labelling functions will be applied.

skweakis build on top of SpaCy, and operates with SpacyDocobjects, so you first need to convert your documents toDocobjects withspacy. - Step 1: We then define a range of labelling functions that will take those documents and annotate spans with labels. Those labelling functions can take a variety of forms, from handcrafted heuristics to machine learning models, gazetteers, etc.

-

Step 2: Once the labelling functions have been applied to your corpus, we aggregate their results in order to obtain a single, probabilistic annotation (instead of the multiple, possibly conflicting annotations from the labelling functions). This is done in

skweakusing a generative model that automatically estimates the relative accuracy and possible confuctions of each labelling function. - Step 3: Finally, based on those aggregated labels, we train our final model. Step 2 gives us a labelled corpus that (probabilistically) aggregates the outputs of all labelling functions, and you can use this labelled data to estimate any kind of machine learning model.

skweak expects all documents to be represented as SpaCy Doc. SpaCy documents are already tokenised, and often comes with a range of additional linguistic features such as POS tags, lemma, dependency relations etc. which may be useful when defining labelling functions.

If you are not already familiar with SpaCy, here is a short example:

import spacy

nlp = spacy.load("en_core_web_md") # We load an English-language model

doc = nlp("This is a just a test").See Spacy: Model for more information on available language packages. Note that:

- If your language is not yet supported by SpaCy, you can use the multi-language model, which offers a decent tokenisation)

- If you have a large number of documents, it is advised to run

docs = list(nlp.pipe(docs))instead of calling on each document invividually, within a loop.