-

Notifications

You must be signed in to change notification settings - Fork 7

Description

Target

- Given (orig, destination, timestamp, …), calculate estimate travel time.

- We want to use user's prob data to generate ETA

- We want the system could represent the liveness of our GPS probs

- The scenario is, after several user experience traffic delay or road closure, the ETA we generate could represent such situation and we will recover back after receiving normal user prob data.

- German Artist cheats GOOGLE MAPS AI Algorithm by... 99 SMARTPHONES!

- We want the API could also generate reasonable ETA for

legsof a entire route. In case your routing engine calculate a un-compatible route with ETA model, allow user to input a route(or key information of the route) is a nice feature.

Links

- Draft design for data pipeline:Streaming experiment for ETA service #357 (comment)

- Draft design for training and prediction: Machine Learning Experiment for ETA service #356 (comment)

- Discussions Q&A

Update on 06232020

- ETA service could generates acceptable ETA for specific user's

routine route(daily commute) - ETA service could generate acceptable ETA for generic requests

- Besides ETA, we could learn navigation related pattern from data, such as

reason for deviation - The entire solution should accept generic data input(gps traces) and mainly based on open source stack

Important topics

Data cleaning and visualization

Understanding input is the most important thing, I would say 80% percent of work is in data cleaning and new feature generating based on existing data.

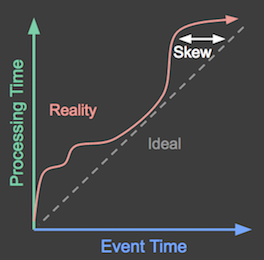

Event driven

Using streaming architecture and proved toolset like Apache Beam/Apache Flink is the key to approach low latency.

Before we abstract reasonable events, we could use batch data and pub/sub or Apache Kafka to simulate streaming data. And calculation engine line Apache Beam or Apache Flink could help generate unique logic for both batch calculation and streaming calculation.

ETA modle

ETA calculation as blackbox, we want the system could answer the following questions:

- As a specific user, whether there is a delay for usual trip, for how long

- As a generic user, what's the eta for given orig/dest/timestamp input

we don't use the way take machine learning to optimize ETA calculation parameters(such as theluafiles in osrm).

Our CEO discussed with CodeBear about founding a firm as ETA supplier for our current company.

CEO: CodeBear, this ETA feature is a fantastic feature. Its like we copy the power of Time Gem from Doctor Stange, we are able to ansower all question related with time and help people to make accurate plans, tens of thousands of our users will benefit from it. This is an opportunity worth millions of dollars.

CodeBear: Yeah, sounds great. What do you want me to do?

CEO: You will have gps probs from our customers, what I expect is three things: one, I always drive car from home to office at morning time, you could tell me weather there is a delay compare to usual situation. Two, if there is a delay, for how long. Three, as a generic user, you could tell travel time for given (orig location, destination location, timestamp, …).

CodeBear: Copy that. We could building event driven systems based on user's prob data and rely on machine learning to calculate ETA

CEO: Any technical challenge? What's the minimum visible product?

CodeBear: I could generate a fake API for the start. Then I will try to map matching user's gps log into map edges, it will have complicate situation since then, you know that because this gps log might have kinds of issues and not compatible with our map source, map matching's result might have big difference with actual route… besides, we could use OSRM to calculate route, but his cost model might need to be adjusted…O, we have live traffic from venders, they are the key element for accurate ETA, but integrate it into pipeline need take a while… Our architect Peter always mentions the influence of Weather, that one shouldn't be forget…

CEO: That sounds a long time…Can you have something smaller, maybe not accurate at first… What if you know nothing about navigation, what will you do?

CodeBear: Let me think… May be I will start something simple. For the initial version, just consider less attributes such as start location and end location from users' input data, and do estimation based on that, but I need to have a evaluation strategy, to tell me how bad my estimation result is. Then for the upper topics, I could treat them as new features, with the help of evaluation strategy, during development I could see the feedback and you could see improvements each round…

CEO: Sounds ok…..

(Fiction DIALOGUE :) )

Evaluation strategy

There are tons of machine learning models, and each of them need twist based on input and problem scope, but no matter which one to use, we have root truth of user's real driving duration to evaluate estimation result.

We could generate rmse for each round to represent estimation result. Other value like margin or absolute value could also helps.

And, we should build a way to rank the importance of features, such as

| feature name | importance | rmse_wo_feature |

|---|---|---|

| osrm_distance | 777 | 0.387 |

| pickup_region | 774 | 0.386 |

| avg_speed | 773 | 0.385 |

Cluster data

My friend and master Shibo lives at Albuquerque in NM for years. During my travel there, I mentioned I want to take a quick visit before next arrangement at Mister Car Wash, which is a scene from Breaking Bad, but I don't know whether time is appropriate. He take a look at his watch, "well, its 11:15am at Wednesday morning, from here, near city center, to eastern area probably takes 15 minutes for one way." This ETA is exactly the same as my road test… See, local people could tell accurate ETA just based on start region(no need exactly location, destination region, time and experience he could give you reasonable suggestion.

I think either using spatial index(Google S2, Uber H3) or use Kmeans, could help the learning system improve prediction. But whether this helps or which one is better, should be tell by value from Evaluation System

Route feature from GPS trace

gps trace-> map matching -> list of connected edges -> generate osrm route response -> route based features(legs, distance, turns, etc)

The result could joined with other data based on a unique ID. Again, which feature is needed should be told by data not experience.

Before we have the complex version, we could skip map matching and directly calculate route for given start/end point.

Machine Learning Model

I am not an expert of this part, and I believe understanding raw data and feature engineering is the key to the accuracy.

For the first step, I just want the system have a feedback and later we could upgrade this component.

Will give a try with XGBoost model with PCA. We also have colleague have experience with Learning to Estimate the Travel Time, could put which into next step.

Experiments

Kernel to estimate duration based on public prob data

The more data content a machine learning system has, the better result it could generated. We believe it is not the algorithm itself to win business in the future, but the uniqueness of data.

#356

Streaming system

Let's say we have about 1GB's user prob data as csv. We load which into distribute file system and then export them to pub/sub system with unique record id be generated.

For each record, will go though several micro services to generate new features

- OSRM feature from GPS trace

- Spatial index for gps trace

- attach weather

- etc...

Each of them will be calculate separately and pub result intopub/subsystem. Thewatermarkvalue during running time could gave a sense of the delay of events.

For the first step, we could put all data into pub/sub system and simulate a stream of fresh data.

#357

Experiment with cloud provider

We will choose one cloud provider for all the work.

Currently, our company have more experience with aws, personally I tried gcp services like datapipeline/dataflow/datastudio/pub/sub/bigquery.

If we go with docker/kubernetes way, then we have less dependency for cloud provider but probably there will be more work for env setup and debug and internal information visualization.

For experiment, will choose most easiest way to go, will consult others and probably go with docker/kubernetes for next.

Experiment internal user prob data

Important criteria

- correctness

- delay

- cost

More info

- ETA Phone Home: How Uber Engineers an Efficient Route

- Beyond L2 Loss — How we experiment with loss functions at Lyft

- 深度学习在美团配送ETA预估中的探索与实践

- Uber Tech Day: What's My ETA? The Billion Dollar Question

- Learning to Estimate the Travel Time

- Analyzing Experiment Outcomes: Beyond Average Treatment Effects

- Travel-Time Prediction With Support Vector Regression

- Predictive Analytics for Enhancing Travel Time Estimation in Navigation Apps of Apple, Google, and Microsoft

- Uber's Data Infra

- Lyft's Data Infra

- Netflix's Data Infra

- Google's Infra Stack

- ETA in Grab