Releases: apache/druid

druid-0.22.1

Apache Druid 0.22.1 is a bug fix release that fixes some security issues. See the complete set of changes for additional details.

# Bug fixes

#12051 Update log4j to 2.15.0 to address CVE-2021-44228

#11787 JsonConfigurator no longer logs sensitive properties

#11786 Update axios to 0.21.4 to address CVE-2021-3749

#11844 Update netty4 to 4.1.68 to address CVE-2021-37136 and CVE-2021-37137

# Credits

Thanks to everyone who contributed to this release!

@abhishekagarwal87

@andreacyc

@clintropolis

@gianm

@jihoonson

@kfaraz

@xvrl

druid-0.22.0

Apache Druid 0.22.0 contains over 400 new features, bug fixes, performance enhancements, documentation improvements, and additional test coverage from 73 contributors. See the complete set of changes for additional details.

# New features

# Query engine

# Support for multiple distinct aggregators in same query

Druid now can support multiple DISTINCT 'exact' counts using the grouping aggregator typically used with grouping sets. Note that this only applies to exact counts - when druid.sql.planner.useApproximateCountDistinct is false, and can be enabled by setting druid.sql.planner.useGroupingSetForExactDistinct to true.

# SQL ARRAY_AGG and STRING_AGG aggregator functions

The ARRAY_AGG aggregation function has been added, to allow accumulating values or distinct values of a column into a single array result. This release also adds STRING_AGG, which is similar to ARRAY_AGG, except it joins the array values into a single string with a supplied 'delimiter' and it ignores null values. Both of these functions accept a maximum size parameter to control maximum result size, and will fail if this value is exceeded. See SQL documentation for additional details.

# Bitwise math function expressions and aggregators

Several new SQL functions functions for performing 'bitwise' math (along with corresponding native expressions), including BITWISE_AND, BITWISE_OR, BITWISE_XOR and so on. Additionally, aggregation functions BIT_AND, BIT_OR, and BIT_XOR have been added to accumulate values in a column with the corresponding bitwise function. For complete details see SQL documentation.

# Human readable number format functions

Three new SQL and native expression number format functions have been added in Druid 0.22.0, HUMAN_READABLE_BINARY_BYTE_FORMAT, HUMAN_READABLE_DECIMAL_BYTE_FORMAT, and HUMAN_READABLE_DECIMAL_FORMAT, which allow transforming results into a more friendly consumption format for query results. For more information see SQL documentation.

# Expression aggregator

Druid 0.22.0 adds a new 'native' JSON query expression aggregator function, that lets you use Druid native expressions to perform "fold" (alternatively known as "reduce") operations to accumulate some value on any number of input columns. This adds significant flexibility to what can be done in a Druid aggregator, similar in a lot of ways to what was possible with the Javascript aggregator, but in a much safer, sandboxed manner.

Expressions now being able to perform a "fold" on input columns also really rounds out the abilities of native expressions in addition to the previously possible "map" (expression virtual columns), "filter" (expression filters) and post-transform (expression post-aggregators) functions.

Since this uses expressions, performance is not yet optimal, and it is not directly documented yet, but it is the underlying technology behind the SQL ARRAY_AGG, STRING_AGG, and bitwise aggregator functions also added in this release.

# SQL query routing improvements

Druid 0.22 adds some new facilities to provide extension writers with enhanced control over how queries are routed between Druid routers and brokers. The first adds a new manual broker selection strategy to the Druid router, which allows a query to manually specify which Druid brokers a query should be sent to based on a query context parameter brokerService to any broker pool defined in druid.router.tierToBrokerMap (this corresponds to the 'service name' of the broker set, druid.service).

The second new feature allows the Druid router to parse and examine SQL queries so that broker selection strategies can also function for SQL queries. This can be enabled by setting druid.router.sql.enable to true. This does not affect JDBC queries, which use a different mechanism to facilitate "sticky" connections to a single broker.

# Avatica protobuf JDBC Support

Druid now supports using Avatica Protobuf JDBC connections, such as for use with the Avatica Golang Driver, and has a separate endpoint from the JSON JDBC uri.

String url = "jdbc:avatica:remote:url=http://localhost:8082/druid/v2/sql/avatica-protobuf/;serialization=protobuf";

# Improved query error logging

Query exceptions have been changed from WARN level to ERROR level to include additional information in the logs to help troubleshoot query failures. Additionally, a new query context flag, enableQueryDebugging has been added that will include stack traces in these query error logs, to provide even more information without the need to enable logs at the DEBUG level.

# Streaming Ingestion

# Task autoscaling for Kafka and Kinesis streaming ingestion

Druid 0.22.0 now offers experimental support for dynamic Kafka and Kinesis task scaling. The included strategies are driven by periodic measurement of stream lag (which is based on message count for Kafka, and difference of age between the message iterator and the oldest message for Kinesis), and will adjust the number of tasks based on the amount of 'lag' and several configuration parameters. See Kafka and Kinesis documentation for complete information.

# Avro and Protobuf streaming InputFormat and Confluent Schema Registry Support

Druid streaming ingestion now has support for Avro and Protobuf in the updated InputFormat specification format, which replaces the deprecated firehose/parser specification used by legacy Druid streaming formats. Alongside this, comes support for obtaining schemas for these formats from Confluent Schema Registry. See data formats documentation for further information.

# Kafka ingestion support for specifying group.id

Druid Kafka streaming ingestion now optionally supports specifying group.id on the connections Druid tasks make to the Kafka brokers. This is useful for accessing clusters which require this be set as part of authorization, and can be specified in the consumerProperties section of the Kafka supervisor spec. See Kafka ingestion documentation for more details.

# Native Batch Ingestion

# Support for using deep storage for intermediary shuffle data

Druid native 'perfect rollup' 2-phase ingestion tasks now support using deep storage as a shuffle location, as an alternative to local disks on middle-managers or indexers. To use this feature, set druid.processing.intermediaryData.storage.type to deepstore, which uses the configured deep storage type.

Note - With "deepstore" type, data is stored in shuffle-data directory under the configured deep storage path, auto clean up for this directory is not supported yet. One can setup cloud storage lifecycle rules for auto clean up of data at shuffle-data prefix location.

# Improved native batch ingestion task memory usage

Druid native batch ingestion has received a new configuration option, druid.indexer.task.batchProcessingMode which introduces two new operating modes that should allow batch ingestion to operate with a smaller and more predictable heap memory usage footprint. The CLOSED_SEGMENTS_SINKS mode is the most aggressive, and should have the smallest memory footprint, and works by eliminating in memory tracking and mmap of intermediary segments produced during segment creation, but isn't super well tested at this point so considered experimental...

druid-0.21.1

Apache Druid 0.21.1 is a bug fix release that fixes a few regressions with the 0.21 release. The first is an issue with the published Docker image, which causes containers to fail to start due to volume permission issues, described in #11166 as fixed in #11167. This release also fixes an issue caused by a bug in the upgraded Jetty version which was released in 0.21, described in #11206 and fixed in #11207. Finally, a web console regression related to field validation has been added in #11228.

# Bug fixes

#11167 fix docker volume permissions

#11207 Upgrade jetty version

#11228 Web console: Fix required field treatment

#11299 Fix permission problems in docker

# Credits

Thanks to everyone who contributed to this release!

druid-0.21.0

Apache Druid 0.21.0 contains around 120 new features, bug fixes, performance enhancements, documentation improvements, and additional test coverage from 36 contributors. Refer to the complete list of changes and everything tagged to the milestone for further details.

# New features

# Operation

# Service discovery and leader election based on Kubernetes

The new Kubernetes extension supports service discovery and leader election based on Kubernetes. This extension works in conjunction with the HTTP-based server view (druid.serverview.type=http) and task management (druid.indexer.runner.type=httpRemote) to allow you to run a Druid cluster with zero ZooKeeper dependencies. This extension is still experimental. See Kubernetes extension for more details.

# New dynamic coordinator configuration to limit the number of segments when finding a candidate segment for segment balancing

You can set the percentOfSegmentsToConsiderPerMove to limit the number of segments considered when picking a candidate segment to move. The candidates are searched up to maxSegmentsToMove * 2 times. This new configuration prevents Druid from iterating through all available segments to speed up the segment balancing process, especially if you have lots of available segments in your cluster. See Coordinator dynamic configuration for more details.

# status and selfDiscovered endpoints for Indexers

The Indexer now supports status and selfDiscovered endpoints. See Processor information APIs for details.

# Querying

# New grouping aggregator function

You can use the new grouping aggregator SQL function with GROUPING SETS or CUBE to indicate which grouping dimensions are included in the current grouping set. See Aggregation functions for more details.

# Improved missing argument handling in expressions and functions

Expression processing now can be vectorized when inputs are missing. For example a non-existent column. When an argument is missing in an expression, Druid can now infer the proper type of result based on non-null arguments. For instance, for longColumn + nonExistentColumn, nonExistentColumn is treated as (long) 0 instead of (double) 0.0. Finally, in default null handling mode, math functions can produce output properly by treating missing arguments as zeros.

# Allow zero period for TIMESTAMPADD

TIMESTAMPADD function now allows zero period. This functionality is required for some BI tools such as Tableau.

# Ingestion

# Native parallel ingestion no longer requires explicit intervals

Parallel task no longer requires you to set explicit intervals in granularitySpec. If intervals are missing, the parallel task executes an extra step for input sampling which collects the intervals to index.

# Old Kafka version support

Druid now supports Apache Kafka older than 0.11. To read from an old version of Kafka, set the isolation.level to read_uncommitted in consumerProperties. Only 0.10.2.1 have been tested up until this release. See Kafka supervisor configurations for details.

Multi-phase segment merge for native batch ingestion

A new tuningConfig, maxColumnsToMerge, controls how many segments can be merged at the same time in the task. This configuration can be useful to avoid high memory pressure during the merge. See tuningConfig for native batch ingestion for more details.

# Native re-ingestion is less memory intensive

Parallel tasks now sort segments by ID before assigning them to subtasks. This sorting minimizes the number of time chunks for each subtask to handle. As a result, each subtask is expected to use less memory, especially when a single Parallel task is issued to re-ingest segments covering a long time period.

# Web console

# Updated and improved web console styles

The new web console styles make better use of the Druid brand colors and standardize paddings and margins throughout. The icon and background colors are now derived from the Druid logo.

# Partitioning information is available in the web console

The web console now shows datasource partitioning information on the new Segment granularity and Partitioning columns.

Segment granularity column in the Datasources tab

Partitioning column in the Segments tab

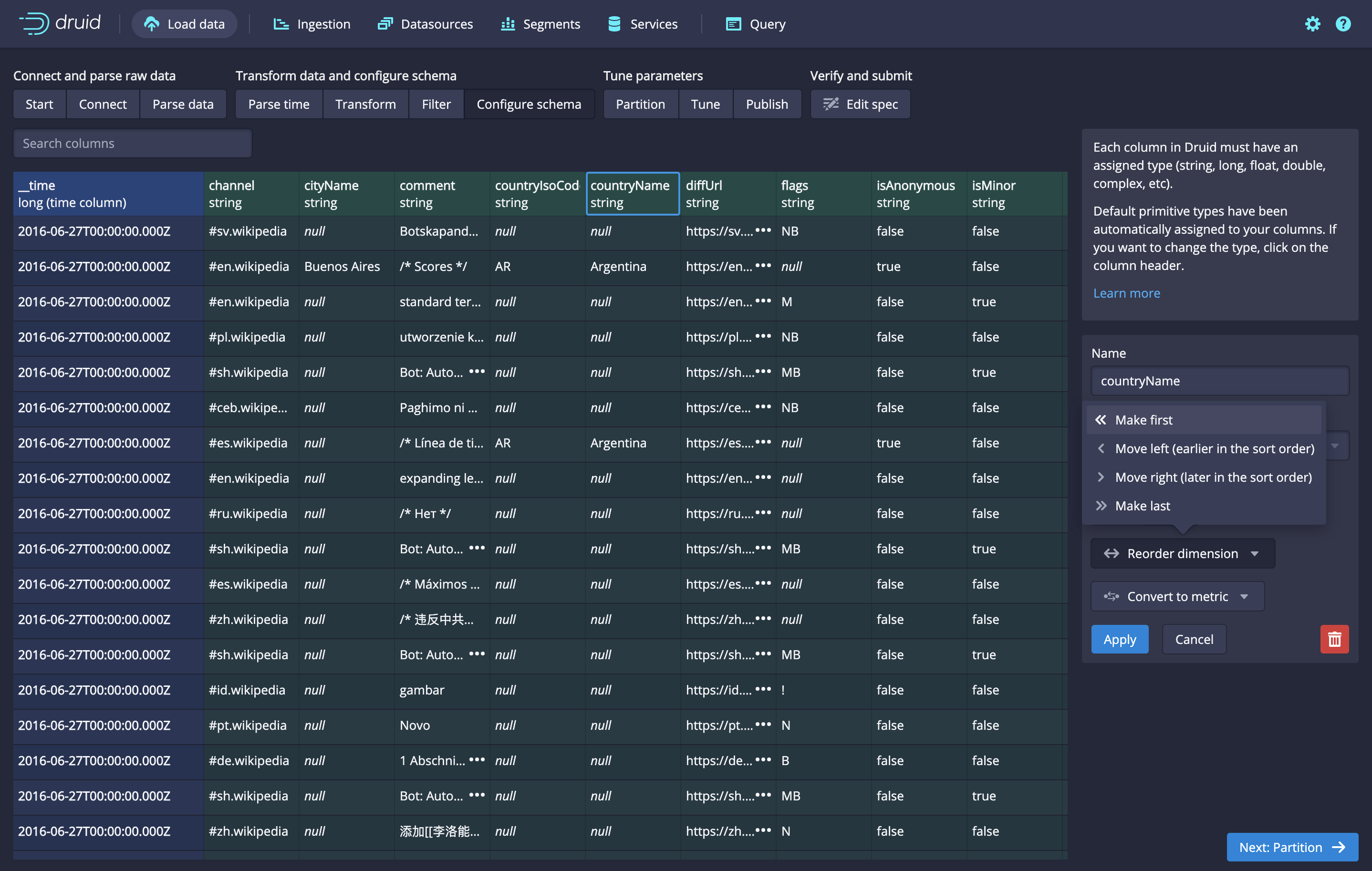

# The column order in the Schema table matches the dimensionsSpec

The Schema table now reflects the dimension ordering in the dimensionsSpec.

# Metrics

# Coordinator duty runtime metrics

The coordinator performs several 'duty' tasks. For example segment balancing, loading new segments, etc. Now there are two new metrics to help you analyze how fast the Coordinator is executing these duties.

coordinator/time: the time for an individual duty to executecoordinator/global/time: the time for the whole duties runnable to execute

# Query timeout metric

A new metric provides the number of timed out queries. Previously timed out queries were treated as interrupted and included in the query/interrupted/count (see Changed HTTP status codes for query errors for more details).

query/timeout/count: the number of timed out queries during the emission period

# Shuffle metrics for batch ingestion

Two new metrics provide shuffle statistics for MiddleManagers and Indexers. These metrics have the supervisorTaskId as their dimension.

ingest/shuffle/bytes: number of bytes shuffled per emission periodingest/shuffle/requests: number of shuffle requests per emission period

To enable the shuffle metrics, add org.apache.druid.indexing.worker.shuffle.ShuffleMonitor in druid.monitoring.monitors. See Shuffle metrics for more details.

# New clock-drift safe metrics monitor scheduler

The default metrics monitor scheduler is implemented based on ScheduledThreadPoolExecutor which is prone to unbounded clock drift. A new monitor scheduler, ClockDriftSafeMonitorScheduler, overcomes this limitation. To use the new scheduler, set druid.monitoring.schedulerClassName to org.apache.druid.java.util.metrics.ClockDriftSafeMonitorScheduler in the runtime.properties file.

# Others

# New extension for a password p...

druid-0.20.2

Apache Druid 0.20.2 introduces new configurations to address CVE-2021-26919: Authenticated users can execute arbitrary code from malicious MySQL database systems. Users are recommended to enable new configurations in the below to mitigate vulnerable JDBC connection properties. These configurations will be applied to all JDBC connections for ingestion and lookups, but not for metadata store. See security configurations for more details.

druid.access.jdbc.enforceAllowedProperties: When true, Druid appliesdruid.access.jdbc.allowedPropertiesto JDBC connections starting withjdbc:postgresql:orjdbc:mysql:. When false, Druid allows any kind of JDBC connections without JDBC property validation. This config is set to false by default to not break rolling upgrade. This config is deprecated now and can be removed in a future release. The allow list will be always enforced in that case.druid.access.jdbc.allowedProperties: Defines a list of allowed JDBC properties. Druid always enforces the list for all JDBC connections starting withjdbc:postgresql:orjdbc:mysql:ifdruid.access.jdbc.enforceAllowedPropertiesis set to true. This option is tested against MySQL connector 5.1.48 and PostgreSQL connector 42.2.14. Other connector versions might not work.druid.access.jdbc.allowUnknownJdbcUrlFormat: When false, Druid only accepts JDBC connections starting withjdbc:postgresql:orjdbc:mysql:. When true, Druid allows JDBC connections to any kind of database, but only enforcesdruid.access.jdbc.allowedPropertiesfor PostgreSQL and MySQL.

druid-0.20.1

Apache Druid 0.20.1 is a bug fix release that addresses CVE-2021-25646: Authenticated users can override system configurations in their requests which allows them to execute arbitrary code.

# Known issues

# Incorrect Druid version in docker-compose.yml

The Druid version is specified as 0.20.0 in the docker-compose.yml file. We recommend to update the version to 0.20.1 before you run a Druid cluster using docker compose.

druid-0.20.0

Apache Druid 0.20.0 contains around 160 new features, bug fixes, performance enhancements, documentation improvements, and additional test coverage from 36 contributors. Refer to the complete list of changes and everything tagged to the milestone for further details.

# New Features

# Ingestion

# Combining InputSource

A new combining InputSource has been added, allowing the user to combine multiple input sources during ingestion. Please see https://druid.apache.org/docs/0.20.0/ingestion/native-batch.html#combining-input-source for more details.

# Automatically determine numShards for parallel ingestion hash partitioning

When hash partitioning is used in parallel batch ingestion, it is no longer necessary to specify numShards in the partition spec. Druid can now automatically determine a number of shards by scanning the data in a new ingestion phase that determines the cardinalities of the partitioning key.

# Subtask file count limits for parallel batch ingestion

The size-based splitHintSpec now supports a new maxNumFiles parameter, which limits how many files can be assigned to individual subtasks in parallel batch ingestion.

The segment-based splitHintSpec used for reingesting data from existing Druid segments also has a new maxNumSegments parameter which functions similarly.

Please see https://druid.apache.org/docs/0.20.0/ingestion/native-batch.html#split-hint-spec for more details.

# Task slot usage metrics

New task slot usage metrics have been added. Please see the entries for the taskSlot metrics at https://druid.apache.org/docs/0.20.0/operations/metrics.html#indexing-service for more details.

# Compaction

# Support for all partitioning schemes for auto-compaction

A partitioning spec can now be defined for auto-compaction, allowing users to repartition their data at compaction time. Please see the documentation for the new partitionsSpec property in the compaction tuningConfig for more details:

https://druid.apache.org/docs/0.20.0/configuration/index.html#compaction-tuningconfig

# Auto-compaction status API

A new coordinator API which shows the status of auto-compaction for a datasource has been added. The new API shows whether auto-compaction is enabled for a datasource, and a summary of how far compaction has progressed.

The web console has also been updated to show this information:

https://user-images.githubusercontent.com/177816/94326243-9d07e780-ff57-11ea-9f80-256fa08580f0.png

Please see https://druid.apache.org/docs/latest/operations/api-reference.html#compaction-status for details on the new API, and https://druid.apache.org/docs/latest/operations/metrics.html#coordination for information on new related compaction metrics.

# Querying

# Query segment pruning with hash partitioning

Druid now supports query-time segment pruning (excluding certain segments as read candidates for a query) for hash partitioned segments. This optimization applies when all of the partitionDimensions specified in the hash partition spec during ingestion time are present in the filter set of a query, and the filters in the query filter on discrete values of the partitionDimensions (e.g., selector filters). Segment pruning with hash partitioning is not supported with non-discrete filters such as bound filters.

For existing users with existing segments, you will need to reingest those segments to take advantage of this new feature, as the segment pruning requires a partitionFunction to be stored together with the segments, which does not exist in segments created by older versions of Druid. It is not necessary to specify the partitionFunction explicitly, as the default is the same partition function that was used in prior versions of Druid.

Note that segments created with a default partitionDimensions value (partition by all dimensions + the time column) cannot be pruned in this manner, the segments need to be created with an explicit partitionDimensions.

# Vectorization

To enable vectorization features, please set the druid.query.default.context.vectorizeVirtualColumns property to true or set the vectorize property in the query context. Please see https://druid.apache.org/docs/0.20.0/querying/query-context.html#vectorization-parameters for more information.

# Vectorization support for expression virtual columns

Expression virtual columns now have vectorization support (depending on the expressions being used), which an results in a 3-5x performance improvement in some cases.

Please see https://druid.apache.org/docs/0.20.0/misc/math-expr.html#vectorization-support for details on the specific expressions that support vectorization.

# More vectorization support for aggregators

Vectorization support has been added for several aggregation types: numeric min/max aggregators, variance aggregators, ANY aggregators, and aggregators from the druid-histogram extension.

#10260 - numeric min/max

#10304 - histogram

#10338 - ANY

#10390 - variance

We've observed about a 1.3x to 1.8x performance improvement in some cases with vectorization enabled for the min, max, and ANY aggregator, and about 1.04x to 1.07x wuth the histogram aggregator.

# offset parameter for GroupBy and Scan queries

It is now possible set an offset parameter for GroupBy and Scan queries, which tells Druid to skip a number of rows when returning results. Please see https://druid.apache.org/docs/0.20.0/querying/limitspec.html and https://druid.apache.org/docs/0.20.0/querying/scan-query.html for details.

# OFFSET clause for SQL queries

Druid SQL queries now support an OFFSET clause. Please see https://druid.apache.org/docs/0.20.0/querying/sql.html#offset for details.

# Substring search operators

Druid has added new substring search operators in its expression language and for SQL queries.

Please see documentation for CONTAINS_STRING and ICONTAINS_STRING string functions for Druid SQL (https://druid.apache.org/docs/0.20.0/querying/sql.html#string-functions) and documentation for contains_string and icontains_string for the Druid expression language (https://druid.apache.org/docs/0.20.0/misc/math-expr.html#string-functions).

We've observed about a 2.5x performance improvement in some cases by using these functions instead of STRPOS.

# UNION ALL operator for SQL queries

Druid SQL queries now support the UNION ALL operator, which fuses the results of multiple queries together. Please see https://druid.apache.org/docs/0.20.0/querying/sql.html#union-all for details on what query shapes are supported by this operator.

# Cluster-wide default query context settings

It is now possible to set cluster-wide default query context properties by adding a configuration of the form druid.query.override.default.context.*, with * replaced by the property name.

# Other features

# Improved retention rules UI

The retention rules UI in the web console has been improved. It now provides suggestions and basic validation in the period dropdown, shows the cluster default rules, and makes editing the default rules more accessible.

# Redis cache extension enhancements

The Redis cache extension now supports Redis Cluster, selecting which database is used, connecting to password-protected servers, and period-style configurations for the `exp...

druid-0.19.0

Apache Druid 0.19.0 contains around 200 new features, bug fixes, performance enhancements, documentation improvements, and additional test coverage from 51 contributors. Refer to the complete list of changes and everything tagged to the milestone for further details.

# New Features

# GroupBy and Timeseries vectorized query engines enabled by default

Vectorized query engines for GroupBy and Timeseries queries were introduced in Druid 0.16, as an opt in feature. Since then we have extensively tested these engines and feel that the time has come for these improvements to find a wider audience. Note that not all of the query engine is vectorized at this time, but this change makes it so that any query which is eligible to be vectorized will do so. This feature may still be disabled if you encounter any problems by setting druid.query.vectorize to false.

# Druid native batch support for Apache Avro Object Container Files

New in Druid 0.19.0, native batch indexing now supports Apache Avro Object Container Format encoded files, allowing batch ingestion of Avro data without needing an external Hadoop cluster. Check out the docs for more details

# Updated Druid native batch support for SQL databases

An 'SqlInputSource' has been added in Druid 0.19.0 to work with the new native batch ingestion specifications first introduced in Druid 0.17, deprecating the SqlFirehose. Like the 'SqlFirehose' it currently supports MySQL and PostgreSQL, using the driver from those extensions. This is a relatively low level ingestion task, and the operator must take care to manually ensure that the correct data is ingested, either by specially crafting queries to ensure no duplicate data is ingested for appends, or ensuring that the entire set of data is queried to be replaced when overwriting. See the docs for more operational details.

# Apache Ranger based authorization

A new extension in Druid 0.19.0 adds an Authorizer which implements access control for Druid, backed by Apache Ranger. Please see [the extension documentation]((https://druid.apache.org/docs/0.19.0/development/extensions-core/druid-ranger-security.html) and Authentication and Authorization for more information on the basic facilities this extension provides.

# Alibaba Object Storage Service support

A new 'contrib' extension has been added for Alibaba Cloud Object Storage Service (OSS) to provide both deep storage and usage as a batch ingestion input source. Since this is a 'contrib' extension, it will not be packaged by default in the binary distribution, please see community extensions for more details on how to use in your cluster.

# Ingestion worker autoscaling for Google Compute Engine

Another 'contrib' extension new in 0.19.0 has been added to support ingestion worker autoscaling, which allows a Druid Overlord to provision or terminate worker instances (MiddleManagers or Indexers) whenever there are pending tasks or idle workers, for Google Compute Engine. Unlike the Amazon Web Services ingestion autoscaling extension, which provisions and terminates instances directly without using an Auto Scaling Group, the GCE autoscaler uses Managed Instance Groups to more closely align with how operators are likely to provision their clusters in GCE. Like other 'contrib' extensions, it will not be packaged by default in the binary distribution, please see community extensions for more details on how to use in your cluster.

# REGEXP_LIKE

A new REGEXP_LIKE function has been added to Druid SQL and native expressions, which behaves similar to LIKE, except using regular expressions for the pattern.

# Web console lookup management improvements

Druid 0.19 also web console also includes some useful improvements to the lookup table management interface. Creating and editing lookups is now done with a form to accept user input, rather than a raw text editor to enter the JSON spec.

Additionally, clicking the magnifying glass icon next to a lookup will now allow displaying the first 5000 values of that lookup.

# New Coordinator per datasource 'loadstatus' API

A coordinator API can make it easier to determine if the latest published segments are available for querying. This is similar to the existing coordinator 'loadstatus' API, but is datasource specific, may specify an interval, and can optionally live refresh the metadata store snapshot to get the latest up to date information. Note that operators should still exercise caution when using this API to query large numbers of segments, especially if forcing a metadata refresh, as it can potentially be a 'heavy' call on large clusters.

# Native batch append support for range and hash partitioning

Part bug fix, part new feature, Druid native batch (once again) supports appending new data to existing time chunks when those time chunks were partitioned with 'hash' or 'range' partitioning algorithms. Note that currently the appended segments only support 'dynamic' partitioning, and when rolling back to older versions that these appended segments will not be recognized by Druid after the downgrade. In order to roll back to a previous version, these appended segments should be compacted with the rest of the time chunk in order to have a homogenous partitioning scheme.

# Bug fixes

Druid 0.19.0 contains 65 bug fixes, you can see the complete list here.

# Fix for batch ingested 'dynamic' partitioned segments not becoming queryable atomically

Druid 0.19.0 fixes an important query correctness issue, where 'dynamic' partitioned segments produced by a batch ingestion task were not tracking the overall number of partitions. This had the implication that when these segments came online, they did not do so as a complete set, but rather as individual segments, meaning that there would be periods of swapping where results could be queried from an incomplete partition set within a time chunk.

# Fix to allow 'hash' and 'range' partitioned segments with empty buckets to now be queryable

Prior to 0.19.0, Druid had a bug when using hash or ranged partitioning where if data skew was such that any of the buckets were 'empty' after ingesting, the partitions would never be recognized as 'complete' and so never become queryable. Druid 0.19.0 fixes this issue by adjusting the schema of the partitioning spec. These changes to the json format should be backwards compatible, however rolling back to a previous version will again make these segments no longer queryable.

# Incorrect balancer behavior

A bug in Druid versions prior to 0.19.0 allowed for (incorrect) coordinator operation in the event druid.server.maxSize was not set. This bug would allow segments to load, and effectively randomly balance them in the cluster (regardless of what balancer strategy was actually configured) if all historicals did not have this value set. This bug has been fixed, but as a result druid.server.maxSize must be set to the sum of the segment cache location sizes for historicals, or else they will not load segments.

# Upgrading to Druid 0.19.0

Please be aware of the f...

druid-0.18.1

Apache Druid 0.18.1 is a bug fix release that fixes Streaming ingestion failure with Avro, ingestion performance issue, upgrade issue with HLLSketch, and so on. The complete list of bug fixes can be found at https://github.com/apache/druid/pulls?q=is%3Apr+milestone%3A0.18.1+label%3ABug+is%3Aclosed.

# Bug fixes

- #9823 rollbacks the new Kinesis lag metrics as it can stall the Kinesis supervisor indefinitely with a large number of shards.

- #9734 fixes the Streaming ingestion failure issue when you use a data format other than CSV or JSON.

- #9812 fixes filtering on boolean values during transformation.

- #9723 fixes slow ingestion performance due to frequent flushes on local file system.

- #9751 reverts the version of

datasketches-javafrom 1.2.0 to 1.1.0 to workaround upgrade failure with HLLSketch. - #9698 fixes a bug in inline subquery with multi-valued dimension.

- #9761 fixes a bug in

CloseableIteratorwhich potentially leads to resource leaks in Data loader.

# Known issues

Incorrect result of nested groupBy query on Join of subqueries

A nested groupBy query can result in an incorrect result when it is on top of a Join of subqueries and the inner and the outer groupBys have different filters. See #9866 for more details.

# Credits

Thanks to everyone who contributed to this release!

@clintropolis

@gianm

@jihoonson

@maytasm

@suneet-s

@viongpanzi

@whutjs

druid-0.18.0

Apache Druid 0.18.0 contains over 200 new features, performance enhancements, bug fixes, and major documentation improvements from 42 contributors. Check out the complete list of changes and everything tagged to the milestone.

# New Features

# Join support

Join is a key operation in data analytics. Prior to 0.18.0, Druid supported some join-related features, such as Lookups or semi-joins in SQL. However, the use cases for those features were pretty limited and, for other join use cases, users had to denormalize their datasources when they ingest data instead of joining them at query time, which could result in exploding data volume and long ingestion time.

Druid 0.18.0 supports real joins for the first time ever in its history. Druid supports INNER, LEFT, and CROSS joins for now. For native queries, the join datasource has been newly introduced to represent a join of two datasources. Currently, only the left-deep join is allowed. That means, only a table or another join datasource is allowed for the left datasource. For the right datasource, lookup, inline, or query datasources are allowed. Note that join of Druid datasources is not supported yet. There should be only one table datasource in the same join query.

Druid SQL also supports joins. Under the covers, SQL join queries are translated into one or several native queries that include join datasources. See Query translation for more details of SQL translation and best practices to write efficient queries.

When a join query is issued, the Broker first evaluates all datasources except for the base datasource which is the only table datasource in the query. The evaluation can include executing subqueries for query datasources. Once the Broker evaluates all non-base datasources, it replaces them with inline datasources and sends the rewritten query to data nodes (see the below "Query inlining in Brokers" section for more details). Data nodes use the hash join to process join queries. They build a hash table for each non-primary leaf datasource unless it already exists. Note that only lookup datasource currently has a pre-built hash table. See Query execution for more details about join query execution.

Joins can affect performance of your queries. In general, any queries including joins can be slower than equivalent queries against a denormalized datasource. The LOOKUP function could perform better than joins with lookup datasources. See Join performance for more details about join query performance and future plans for performance improvement.

# Query inlining in Brokers

Druid is now able to execute a nested query by inlining subqueries. Any type of subquery can be on top of any type of another, such as in the following example:

topN

|

(join datasource)

/ \

(table datasource) groupBy

To execute this query, the Broker first evaluates the leaf groupBy subquery; it sends the subquery to data nodes and collects the result. The collected result is materialized in the Broker memory. Once the Broker collects all results for the groupBy query, it rewrites the topN query by replacing the leaf groupBy with an inline datasource which has the result of the groupBy query. Finally, the rewritten query is sent to data nodes to execute the topN query.

# Query laning and prioritization

When you run multiple queries of heterogenous workloads at a time, you may sometimes want to control the resource commitment for a query based on its priority. For example, you would want to limit the resources assigned to less important queries, so that important queries can be executed in time without being disrupted by less important ones.

Query laning allows you to control capacity utilization for heterogeneous query workloads. With laning, the broker examines and classifies a query for the purpose of assigning it to a 'lane'. Lanes have capacity limits, enforced by the Broker, that can be used to ensure sufficient resources are available for other lanes or for interactive queries (with no lane), or to limit overall throughput for queries within the lane.

Automatic query prioritization determines the query priority based on the configured strategy. The threshold-based prioritization strategy has been added; it automatically lowers the priority of queries that cross any of a configurable set of thresholds, such as how far in the past the data is, how large of an interval a query covers, or the number of segments taking part in a query.

See Query prioritization and laning for more details.

New dimension in query metrics

Since a native query containing subqueries can be executed part-by-part, a new subQueryId has been introduced. Each subquery has different subQueryIds but same queryId. The subQueryId is available as a new dimension in query metrics.

New configuration

A new druid.server.http.maxSubqueryRows configuration controls the maximum number of rows materialized in the Broker memory.

Please see Query execution for more details.

# SQL grouping sets

GROUPING SETS is now supported, allowing you to combine multiple GROUP BY clauses into one GROUP BY clause. This GROUPING SETS clause is internally translated into the groupBy query with subtotalsSpec. The LIMIT clause is now applied after subtotalsSpec, rather than applied to each grouping set.

# SQL Dynamic parameters

Druid now supports dynamic parameters for SQL. To use dynamic parameters, replace any literal in the query with a question mark (?) character. These question marks represent the places where the parameters will be bound at execution time. See SQL dynamic parameters for more details.

# Important Changes

# applyLimitPushDownToSegments is disabled by default

applyLimitPushDownToSegments was added in 0.17.0 to push down limit evaluation to queryable nodes, limiting results during segment scan for groupBy v2. This can lead to performance degradation, as reported in #9689, if many segments are involved in query processing. This is because “limit push down to segment scan” initializes an aggregation buffer per segment, the overhead for which is not negligible. Enable this configuration only if your query involves a relatively small number of segments per historical or realtime task.

# Roaring bitmaps as default

Druid supports two bitmap types, i.e., Roaring and CONCISE. Since Roaring bitmaps provide a better out-of-box experience (faster query speed in general), the default bitmap type is now switched to Roaring bitmaps. See Segment compression for more details about bitmaps.

# Complex metrics behavior change at ingestion time when SQL-compatible null handling is disabled (default mode)

When SQL-compatible null handling is disabled, the behavior of complex metric aggregation at ingestion time has now changed to be consistent with that at query time. The complex metrics are aggregated to the default 0 values for nulls instead of skipping them during ingestion.

# Array expression syntax change

Druid expression now supports typed constructors for creating arrays. Arrays can be defined with an explicit type. For example, <LONG>[1, 2, null] creates an array of LONG type containing 1, 2, and null. Note that you can still create an array without an explicit type. For example, [1, 2, null] is still a valid syntax to create an equivalent array. In this case, Druid will infer the type of array from its elements. This new syntax applies to empty arrays as well. <STRING>[], <DOUBLE>[], and <LONG>[] will create an empty array of STRING, DOUBLE, and LONG type, respectively.

# Enabling pending segments cleanup by default

The pendingSegments table in the metadata store is used to create unique new segment IDs for appending tasks such as Kafka/Kinesis indexing tasks or batch tasks of appending mode. Automatic pending segments cleanup was introduced in 0.12.0, but has been disabled by default prior to 0.18....