-

Notifications

You must be signed in to change notification settings - Fork 3

Home

This repository holds the code and wiki of MAEG5755 (Robotics) course project in CUHK (Team P.A.R.K). Team members are Pretty, Alice, Rui and Kenson. We are asked to implement a bin picking task using rethink robotics' Baxter and Dexnet grasp pose detectpr.

All packages follow ROS conventions. A collection of packages to run the baxter with realsense depth camera using moveit interface and adopt dexnet4.0 as grasp pose detector.

- This node is a bridge between the RGBD camera and ROS. It publish relevant camera info including camera intrinsic, RGB image, depth image and point cloud data. They are then visualized in Rviz and being used by other nodes in the system.

- The camera intrinsic (factory calibrated) of the realsense RGBD camera are loaded from the device without the need of manual calibration.

- The hand eye calibration result is published in the same launch file, stating the spatial relationship between the camera optical frame and the robot base frame.

-

This node is a bridge between Dexnet4.0 GQCNN and ROS. It waits for a depth image, and the camera intrinsic from ROS topics and a preprocessing (unit, data type conversion and ROI masking) is performed before passing them to the dexnet 4.0 GQCNN package. The dexnet package would return a list of grasp pose candidates with Q value implying the grasp quality (0.0 - 1.0, higher is better).

-

Note that the pose from dexnet is relative to the camera optical frame instead of robot base frame. Therefore, a transformation from camera frame to robot base frame is required. Since the hand eye calibration result is available, the transform can be easily done by making use of the TF listener transformPose function provided by ROS.

-

Then the transformed candidate with best grasp quality would be chosen and is added with a Z-axis offset (e.g. 15cm upwards, relative to robot torso frame). Then Moveit node would plan a valid joint trajectory and execute it.

- This node makes use of moveit planning group interface and command the robot to a specific ready pose represented by a joints position.

- This node is responsible for execution a pre-defined Cartesian space motion moving alone the x/y/z axis to perform the actual pick and place operation. Here is the predefined relative motions.

- This node provides an essential interface to make the baxter robot communicates with all other ROS nodes. More details on Baxter Research Robot SDK. It is a robot specific interface providing the joint states and impementating trajectory end point execution and control.

- This node offers move_group who talks to the controllers on the robot using the FollowJointTrajectoryAction interface. This is a ROS action interface. A server on the robot needs to service this action - this server is not provided by move_group itself. move_group will only instantiate a client to talk to this controller action server on your robot.

Few possible reasons.

- There is missing apt dependent package. Try to "google" what is missing and install it.

- Racing conditions due to multithreading cmake. Try to rebuild the workspace by deleting the devel and build folder. Use

catkin_make -j1. - Make sure you install all recommended apt packages. A list of ros package names was extracted from my working computer.

Remove the gazebo_ros_pkgs in learning_ros_external_pkgs_noetic. Recently the ros-noetic-gazebo-ros-pkgs apt deb package is up-to-date and that one from learning_ros_external_pkgs_noetic is outdated. You should rebuild your workspace for safe. Take a break and it should take more than a while.

rm -rf $HOME/5755_ws/src/learning_ros_external_pkgs_noetic/gazebo_ros_pkgs

rm -rf $HOME/5755_ws/devel

rm -rf $HOME/5755_ws/build

catkin_make -j1

ubuntu hostname resolving issue. See my wiki section

Two options.

- Keep your code written in python

-

catkin_make --only-pkg-with-deps ar_track_alvaronly compile specified package. Here the example is "ar_track_alvar". Later you need this commandcatkin_make -DCATKIN_WHITELIST_PACKAGES=""to go back full scan and compile.

['head_pan']

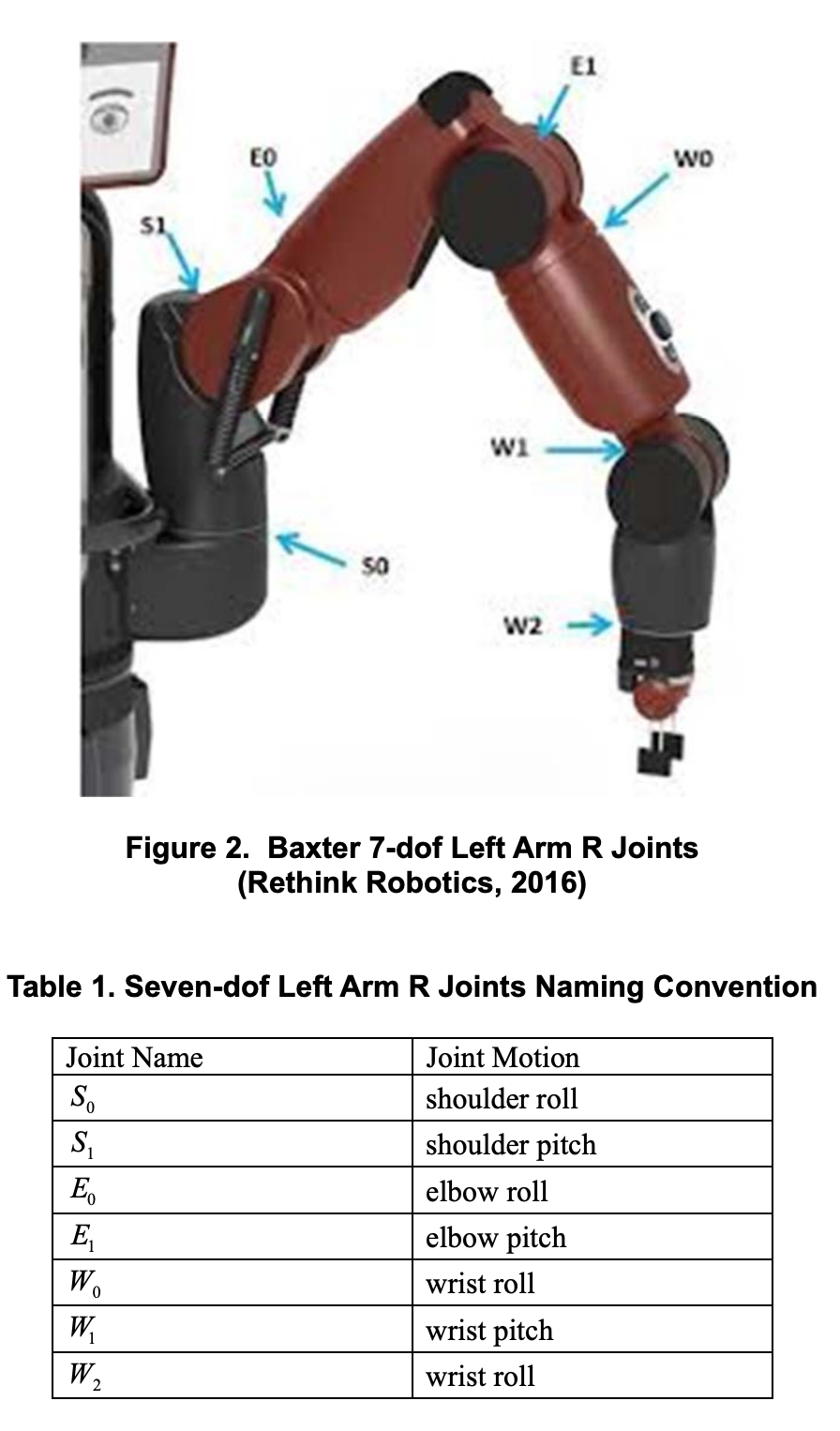

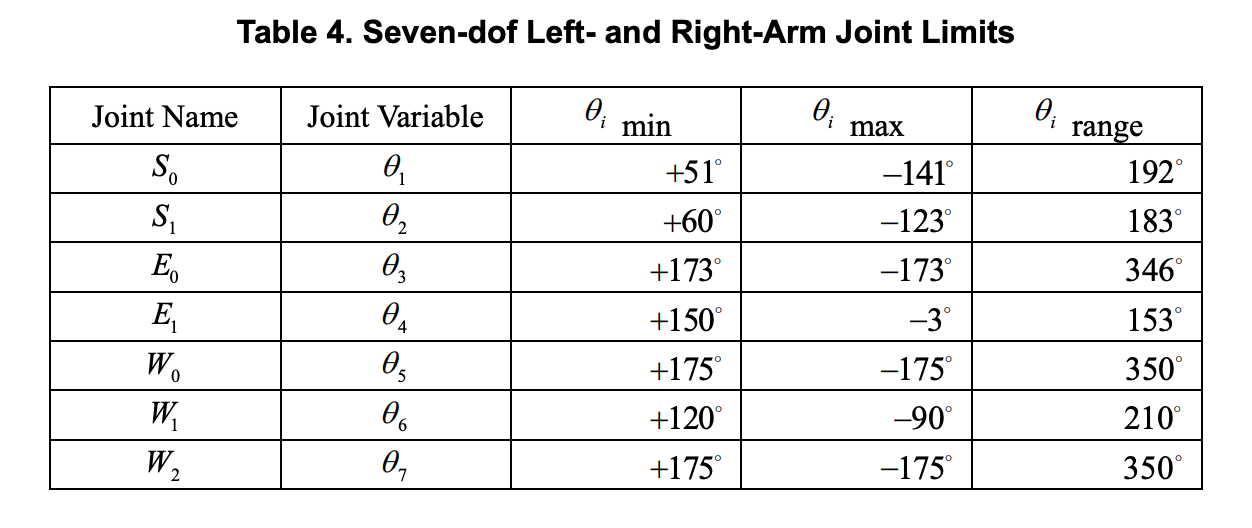

['left_s0', 'left_s1', 'left_e0', 'left_e1', 'left_w0', 'left_w1', 'left_w2', 'l_gripper_l_finger_joint', 'l_gripper_r_finger_joint']

['right_s0', 'right_s1', 'right_e0', 'right_e1', 'right_w0', 'right_w1', 'right_w2', 'r_gripper_l_finger_joint', 'r_gripper_r_finger_joint']

The view_frames node was implemented in Python and was targeted on Python2. A patch for python3 is here

Error message

rosrun tf view_frames

Listening to /tf for 5.0 seconds

Done Listening

b'dot - graphviz version 2.43.0 (0)\n'

Traceback (most recent call last):

File "/opt/ros/noetic/lib/tf/view_frames", line 119, in <module>

generate(dot_graph)

File "/opt/ros/noetic/lib/tf/view_frames", line 89, in generate

m = r.search(vstr)

TypeError: cannot use a string pattern on a bytes-like object

8. moveit_calibration (HandEyeCalibration plugin) from rviz failed to solve after taking 5 samples. Why?

"handeye" and "baldor" ROS packages are needed as run-time dependencies but there are no clear error messages nor compilation warning. Those packages are now included in our repo. Checking out them and perform catkin_make could solve this.

The clock of the computer inside real baxter robot is not synced. Generally the robot would perform time sync through the internet using NTP. However if the robot is not connected to the internet then you may want to setup your own NTP service on your workstation and let baxter connect to it as regular NTP sync.

See the time difference between your workstation and the baxter:

ntpdate -q 011508P0007.local