-

Notifications

You must be signed in to change notification settings - Fork 134

Adapter Developer Guide

Note: This page is meant for developers who are interested in creating/maintaining new/current adapters. If you're only interested in using them, you probably want to visit the driver usage page instead.

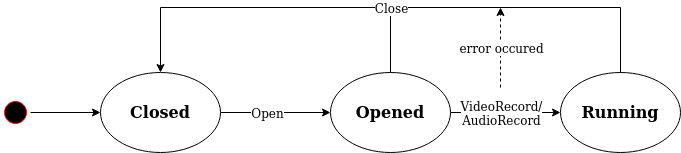

The design for adapter/driver is heavily based on unix design principle, everything is a file. Therefore, you can always think of any adapter as a file, you open the adapter, read from it, and then close it:

First of all, any adapter has to implement the Adapter interface,

type Adapter interface {

Open() error

Close() error

Properties() []prop.Media

}This interface is being used to generalize different kinds of adapters. We use this interface so that we can store it in the driver manager (I'll talk about this more later).

We see that when you implement the Adapter interface, you implement the Open() and Close() methods shown in the Driver Lifecycle diagram above.

- Open

Open() opens the underlying hardware through an OS interface, might also get permission when it's needed (permission to read from the hardware). At this point, the adapter user can start getting its

Propertiesand get data from it through,VideoRecordorAudioRecord(which are described below).

- Close

Close() is a cleanup method to free all used resources and stop the underlying hardware from transmitting data.

- Properties

Properties() returns a list of

prop.Mediathat the adapter supports. These values will later be chosen by the adapter user forVideoRecordorAudioRecord.

Each adapter must implement either

type VideoRecorder interface {

VideoRecord(p prop.Media) (r video.Reader, err error)

}or

type

AudioRecorder interface {

AudioRecord(p prop.Media) (r audio.Reader, err error)

}These methods do the work of getting the actual data from the hardware.

You must implement the VideoRecord method.

Which interacts with the hardware to get frames and returns a video.Reader.

type video.Reader interface {

Read() (img image.Image, err error)

}You must implement the AudioRecord method. This interacts with the hardware to get audio frames and returns a audio.Reader.

type audio.Reader interface {

Read(samples [][2]float32) (n int, err error)

}The L and the R are simply floating point numbers in the range of [0,1], this number represents the amplitude at a given time.

In stereo, a datapoint is a 'LR pair' which represent what input we are giving the left ear and independent input we are giving to the right ear at this point in time.

We consider a 'LR pair' as a datapoint and a frame as a list of 'LR pairs.

This is the same concept as Stereo Channel, except for we consider ourselves giving the listener the same sound to both ears, so instead of 'LR pairs' we have 'LL pairs' / 'RR pairs'. This is how you are expected to encode the audio.

You must register yourself to allow your adapter to be discoverable.

Your device type is exactly one of these:

- Camera

- Screen ** for screen-sharing

- Microphone

A label for your adapter (for metadata). A string.

You should define a type for your Adapter to hold necessary information.

You should then create an instance of your type and pass this to the Register method

driver.GetManager().Register(<DEVICE_INSTANCE>, driver.Info{

Label: <ADAPTER_LABEL>,

DeviceType: driver.<DEVICE_TYPE>,

})

Usage

Developer Guide