-

Notifications

You must be signed in to change notification settings - Fork 0

Data processing overview

Each data "source" requires an entry in the ALA Collections application (metadata store - aka Collectory). When a new data resource is minted, it gets a unique ID in the form of dr12345 (see example for BPA Portal). This ID is then used throughout the processing as the "key" for this dataset.

Therefore any new datasets must first have a metadata ID assigned by creating a new entry in collections.ala.org.au (or collections-test.ala.org.au for testing and non-production loading). Matt can give you access to allow the creation of new entries via https://collections-test.ala.org.au/admin. Use an existing ARGA dataset as a template.

To convert a plain CSV DwC file to a Darwin Core Archive file (DwCA), the preingestion Python module is used (note the use of the arga branch). The output is a ZIP file containing 3 or more files:

-

eml.xml- metadata about the dataset, info is pulled in from the collectory -

meta.xml- metadata about the DwC data, has URIs for field headings in the CSV file and contains relational information when multiple CSV files are provided -

occurrence.csv- DwC CSV file (can also be a TSV if specified in meta.xml)

Example Python script to perform this conversion, where the input CSV file has been saved in /data/arga-data/bpa_export.csv.

from dwca import DwcaHandler

from dwca import CsvFileType

data_resource = "dr18544"

core_file = CsvFileType(files=['/data/arga-data/bpa_export.csv'], type='occurrence')

DwcaHandler.create_dwca(dr=data_resource, core_csv=core_file,

output_dwca_path=f"/data/biocache-load/{data_resource}/{data_resource}.zip", use_chunking=False)

# Copy over to server via:

# rsync -avz --progress /data/biocache-load/dr18544 [email protected]:"/data/biocache-load/dr18544/"ARGA uses the livingatlas set of pipeline processes, found at https://github.com/gbif/pipelines/tree/dev/livingatlas. The README file has all the information needed to run the processing on a local development machine.

The intermediate outputs of the processing are AVRO files, which can be viewed (as JSON) using the avro-tools utility (see README). The final solr step adds records to the SOLR index, which can be viewed via its web interface.

ARGA-specific config - save to configs/la-pipelines-local.yaml:

index:

includeSensitiveDataChecks: false

includeImages: false

collectory:

httpHeaders:

Authorization: xxxxx-xxxx-xxxx-xxxx

alaNameMatch:

wsUrl: https://namematching-ws.ala.org.au

timeoutSec: 100

retryConfig:

maxAttempts: 5

initialIntervalMillis: 5000Note the Authorization key needs to be obtained (Slack Nick).

Code snippet to run the ARGA pipelines

cd scripts

# set shell variable for dataset - dr18509 dr18541 dr18544

# DR="dr18509 dr18541 dr18544"

DR=dr18509

./la-pipelines dwca-avro $DR && ./la-pipelines interpret $DR --embedded && ./la-pipelines uuid $DR --embedded && ./la-pipelines index $DR --embedded && ./la-pipelines sample $DR --embedded && ./la-pipelines solr $DR --embeddedChecking AVRO files

avro-tools tojson ./occurrence/location/interpret-1663602980-00000-of-00008.avro | jq '. | select(.country=="Australia")' | more

Some example SOLR queries

-

https://nectar-arga-dev-4.ala.org.au/api/select?q=*:*- view all docs -

https://nectar-arga-dev-4.ala.org.au/api/select?q=dataResourceUid:dr18509- view records for one dataset -

https://nectar-arga-dev-4.ala.org.au/api/select?q=*:*&facet=true&facet.field=matchType&rows=0- view facet formatchType -

https://nectar-arga-dev-4.ala.org.au/api/get?id=1fbe4731-4ef1-4197-99ad-2c7dba1f892f- view a document

The test server https://nectar-arga-dev-4.ala.org.au/ is where SOLR is running but is also the place where new data is ingested via Pipelines. The process is roughly"

- Extract

DwCCSV files on a local computer and convert toDwCAvia thepreingestionscript - Copy the resulting file to the server via the command:

rsync -avz --progress /data/biocache-load/dr18544 [email protected]:"/data/biocache-load/dr18544/"where username is your login name for the server

- login to the server via ssh and cd to the

pipelinesdirectory:

ssh [email protected]

cd /data/pipelines/livingatlas/- Follow the instructions at https://github.com/gbif/pipelines/tree/master/livingatlas to setup and ingest a dataset. I usually do the following:

cd scripts

DR=dr18509 # set ENV variable for dataset ID. Indexing multiple datasets are space separated - DR="dr18509 dr18541 dr18544"

/la-pipelines dwca-avro $DR && ./la-pipelines interpret $DR --embedded && ./la-pipelines uuid $DR --embedded && ./la-pipelines index $DR --embedded && ./la-pipelines sample $DR --embedded && ./la-pipelines solr $DR --embeddedThis can take a while to run so sometimes I run it inside a screen command so that if my Mac goes to sleep it doesn't interrupt the process. If any of the commands error, you'll need to debug the output to see why. Assuming its all worked as expected, you should this output at the end:

05-Apr 15:50:34 [LA-PIPELINES] [dr18541] [INFO] END SOLR of dr18541 in [spark-embedded], took 12 minutes and 2 seconds.- Check the SOLR index or the React demo app to confirm the data was indexed (exercise for the reader).

- Pipelines adds extra data fields to records at the taxon level, via species species lists (marked as

authoritative). These include conservation status, traits andtaxonLocatedInCountryfields. They are currently stored in the test lists server but some lists will need to be added to the production server at some point (export CSV and import as new list entry). - the

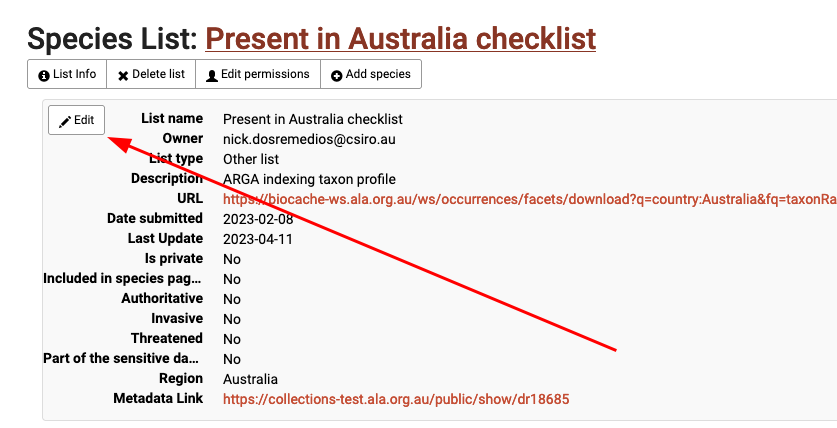

taxonLocatedInCountrylist is https://lists-test.ala.org.au/speciesListItem/list/dr18685 and is a very large list. It may have itsauthoritativeflag turned off (set tofalse) at times, due to it slowing the indexing of ALA data. So if this fields is not getting populated, check that list hasAuthoritative: Yesunder theInfosection (which is hidden by default - click theInfobutton to show). Someone with ROLE_ADMIN can edit that list via the "Edit" button.

- Pipelines has dependencies on quite a few services and some are run on the same machine via Docker (see README). Docker can sometimes fill-up the root partition on the server and this can be fixed by clearing out old and unused images (via dedicated Docker commands - google it). SOLR is also running in Docker and can sometimes crash and not come up - this is usually fixed by runnging the

docker-composeupcommand again but occassionally it needs to be created from scratch and all data is lost. In that case all datasets need to be reindexed again but only theSOLR pipeline needs to be run via./la-pipelines solr $DR --embedded`.