chore(deps): update dependency sentence_transformers to v3 #1291

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

This PR contains the following updates:

==2.7.0->==3.2.1Release Notes

UKPLab/sentence-transformers (sentence_transformers)

v3.2.1: - Patch CLIP loading, small ONNX fix, compatibility with other librariesCompare Source

This patch release fixes some small bugs, such as related to loading CLIP models, automatic model card generation issues, and ensuring compatibility with third party libraries.

Install this version with

Fixing Loading non-Transformer models

In v3.2.0, a non-Transformer based model (e.g. CLIP) would not load correctly if the model was saved in the root of the model repository/directory. This has been resolved in #3007.

Throw error if

StaticEmbedding-based model is finetuned with incompatible lossesThe following losses are not compatible with

StaticEmbedding-based models:An error is now thrown when one of these are used with a

StaticEmbedding-based model. I recommend using MultipleNegativesRankingLoss to finetune these models, e.g. as in https://huggingface.co/tomaarsen/static-bert-uncased-gooaq.Note: to get good performance, you must use much higher learning rates than otherwise. In my experiments, 2e-1 worked well.

Patch ONNX model when the model uses

output_hidden_statesFor example, this script used to fail, but passes now:

All changes

docs] Update the training snippets for some losses that should use the v3 Trainer by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2987enh] Throw error if StaticEmbedding-based model is trained with incompatible loss by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2990fix] Fix semantic_search_usearch with 'binary' by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2989model cards] Prevent crash on generating widgets if dataset column is empty by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2997warn] Throw a warning if compute_metrics is set, as it's not used by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/3002fix] Prevent IndexError if output_hidden_states & ONNX by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/3008New Contributors

Full Changelog: UKPLab/sentence-transformers@v3.2.0...v3.2.1

v3.2.0: - ONNX and OpenVINO backends offering 2-3x speedup; Static Embeddings offering 50x-500x speedups at ~10-20% performance costCompare Source

This release introduces 2 new efficient computing backends for SentenceTransformer models: ONNX and OpenVINO + optimization & quantization, allowing for speedups up to 2x-3x; static embeddings via Model2Vec allowing for lightning-fast models (i.e., 50x-500x speedups) at a ~10%-20% performance cost; and various small improvements and fixes.

Install this version with

Faster ONNX and OpenVINO Backends for SentenceTransformer (#2712)

Introducing a new

backendkeyword argument to theSentenceTransformerinitialization, allowing values of"torch"(default),"onnx", and"openvino".These come with new installations:

It's as simple as:

If you specify a

backendand your model repository or directory contains an ONNX/OpenVINO model file, it will automatically be used! And if your model repository or directory doesn't have one already, an ONNX/OpenVINO model will be automatically exported. Just remember tomodel.push_to_hubormodel.save_pretrainedinto the same model repository or directory to avoid having to re-export the model every time.All keyword arguments passed via

model_kwargswill be passed on toORTModel.from_pretrainedorOVBaseModel.from_pretrained. The most useful arguments are:provider: (Only ifbackend="onnx") ONNX Runtime provider to use for loading the model, e.g."CPUExecutionProvider". See https://onnxruntime.ai/docs/execution-providers/ for possible providers. If not specified, the strongest provider (E.g."CUDAExecutionProvider") will be used.file_name: The name of the ONNX file to load. If not specified, will default to "model.onnx" or otherwise "onnx/model.onnx" for ONNX, and "openvino_model.xml" and "openvino/openvino_model.xml" for OpenVINO. This argument is useful for specifying optimized or quantized models.export: A boolean flag specifying whether the model will be exported. If not provided, export will be set to True if the model repository or directory does not already contain an ONNX or OpenVINO model.For example:

Benchmarks

We ran benchmarks for CPU and GPU, averaging findings across 4 models of various sizes, 3 datasets, and numerous batch sizes. Here are the findings:

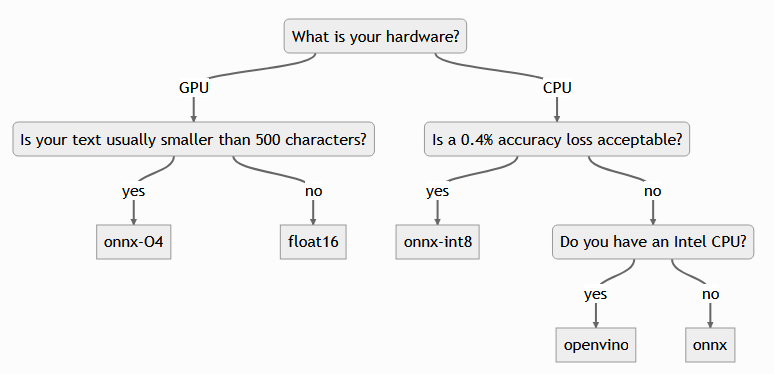

These findings resulted in these recommendations:

For GPU, you can expect 2x speedup with fp16 at no cost, and for CPU you can expect ~2.5x speedup at a cost of 0.4% accuracy.

ONNX Optimization and Quantization

In addition to exporting default ONNX and OpenVINO models, we also introduce 2 helper methods for optimizing and quantizing ONNX models:

Optimization

export_optimized_onnx_model: This function uses Optimum to implement several optimizations in the ONNX model, ranging from basic optimizations to approximations and mixed precision. Read about the 4 default options here. This function accepts:modelA SentenceTransformer model loaded withbackend="onnx".optimization_config: "O1", "O2", "O3", or "O4" from 🤗 Optimum or a customOptimizationConfiginstance.model_name_or_path: The directory or model repository where the optimized model will be saved.push_to_hub: Whether the push the exported model to the hub withmodel_name_or_pathas the repository name. If False, the model will be saved in the directory specified withmodel_name_or_path.create_pr: Ifpush_to_hub, then this denotes whether a pull request is created rather than pushing the model directly to the repository. Very useful for optimizing models of repositories that you don't have write access to.file_suffix: The suffix to add to the optimized model file name. Will use theoptimization_configstring or"optimized"if not set.The usage is like this:

After which you can load the model with:

or when it gets merged:

Quantization

export_dynamic_quantized_onnx_model: This function uses Optimum to quantize the ONNX model to int8, also allowing for hardware-specific optimizations. This results in impressive speedups for CPUs. In my findings, each of the default quantization configuration options gave approximately the same performance improvements. This function acceptsmodelA SentenceTransformer model loaded withbackend="onnx".quantization_config: "arm64", "avx2", "avx512", or "avx512_vnni" representing quantization configurations from AutoQuantizationConfig, or an QuantizationConfig instance.model_name_or_path: The directory or model repository where the optimized model will be saved.push_to_hub: Whether the push the exported model to the hub withmodel_name_or_pathas the repository name. If False, the model will be saved in the directory specified withmodel_name_or_path.create_pr: Ifpush_to_hub, then this denotes whether a pull request is created rather than pushing the model directly to the repository. Very useful for quantizing models of repositories that you don't have write access to.file_suffix: The suffix to add to the optimized model file name. Will use thequantization_configstring or e.g."int8_quantized"if not set.The usage is like this:

After which you can load the model with:

or when it gets merged:

Lightning-Fast Static Embeddings via Model2Vec (#2961)

If ONNX or OpenVINO isn't fast enough for you yet, then perhaps you'll enjoy Static Embeddings. These embeddings are a bit akin to GLoVe or Word2vec, i.e. they're bags of token embeddings that are summed together to create text embeddings, allowing for lightning-fast embeddings that don't require any neural networks.

However, these Static Embeddings are created in different ways. For example:

You can initialize Static Embeddings via Model2Vec in two ways:

from_model2vec: You can load one of the pretrained Model2Vec models:note:

pip install model2vecis needed, but not for inferenceInitialize a Sentence Transformer model with a static embedding from a pretrained model2vec model

Encode some texts

Compute similarities

note:

pip install model2vecis needed, but not for inferenceInitialize a Sentence Transformer model with a static embedding by distilling via model2vec

Encode some texts

Compute similarities

That's not a typo: I can compute embeddings for about 14000 stsb sentences from per second on CPU, compared to about ~24 with BAAI/bge-base-en-v1.5, a.k.a. 625x faster.

Small changes

InformationRetrievalEvaluatornow acceptsquery_prompt,query_prompt_name,corpus_prompt, andcorpus_prompt_namearguments, useful if your model requires specific prompts for queries and/or documents for the best performance. (#2951)mine_hard_negativesfunction now acceptsanchor_column_nameandpositive_column_namefor specifying which dataset columns will be used. If not specified, the first two columns are used, respectively. Additionally, themin_scoreparameter is added, ensuring that all mined negatives have a similarity score of at leastmin_scoreaccording to the chosenSentenceTransformerorCrossEncodermodel. (#2977)CachedGISTEmbedLosshas been improved to support multiple negatives per sample, i.e. the loss now accepts data in the(anchor, positive, negative_1, …, negative_n)format. It is the third loss to support this format (see docs):All changes

fix] Only save first module in root if "save_in_root" is specified. by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2957feat] Add query prompts to Information Retrieval Evaluator by @ArthurCamara in https://github.com/UKPLab/sentence-transformers/pull/2951model cards] Keep evaluation order in training logs if there's multiple evaluators by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2963CrossEncoder.rankby @it176131 in https://github.com/UKPLab/sentence-transformers/pull/2947feat] Add lightning-fast StaticEmbedding module based on model2vec by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2961feat] Add ONNX and OpenVINO backends by @helena-intel and @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2712New Contributors

Special thanks to @echarlaix for making the new backends possible due to some last-minute changes in

optimumandoptimum-intel.Full Changelog: UKPLab/sentence-transformers@v3.1.1...v3.2.0

v3.1.1: - Patch hard negative mining & removenumpy<2restrictionCompare Source

This patch release fixes hard negatives mining for models that don't automatically normalize their embeddings and it lifts the

numpy<2restriction that was previously required.Install this version with

Hard Negatives Mining Patch (#2944)

The

mine_hard_negativesutility introduced in the previous release would fail ifuse_faiss=True& the model does not automatically normalize its embeddings. This release patches that, allowing the utility to work with all Sentence Transformer models:Thanks to @omarnj-lab for pointing out the bug to me.

Numpy restriction lifted (#2937)

The v3.1.0 Sentence Transformers release required

numpy<2to prevent crashes on Windows. However, various third-parties (e.g. scipy) have now been recompiled & released, allowing the Windows tests to pass again.If you experience the following snippet:

Then consider 1) upgrading the dependency from which the error occurred or 2) downgrading

numpyto below v2:Thanks to @kozlek for pointing this out to me and helping getting it resolved.

All changes

deps] Attempt to remove numpy restrictions by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2937metadata] Extend pyproject.toml metadata by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2943fix] Ensure that the embeddings from hard negative mining are normalized by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2944Full Changelog: UKPLab/sentence-transformers@v3.1.0...v3.1.1

v3.1.0: - Hard Negatives Mining utility; new loss function for symmetric tasks; streaming datasets; custom modulesCompare Source

This release introduces a hard negatives mining utility to get better models out of your data, a new strong loss function for symmetric tasks, training with streaming datasets to avoid having to store datasets fully on disk, custom modules to allow for more creativity from model authors, and many bug fixes, small additions and documentation improvements.

Install this version with

Hard Negatives Mining utility (#2768, #2848)

Hard negatives are texts that are rather similar to some anchor text (e.g. a question), but are not the correct match. For example:

These negatives are more difficult for a model to distinguish from the correct answer, leading to a stronger training signal and a stronger overall model when used with one of the Loss Functions that accepts (anchor, positive, negative) pairs such as the one above.

This release introduces a utility function called

mine_hard_negativesthat allows you to mine for these hard negatives given a (anchor, positive) dataset (and optionally a corpus of negative candidate texts).It boasts the following features to give you fine-grained control over the similarity of the mined negatives relative to the anchor:

marginof the true similarity between anchor and positive.max_score.2 + num_negatives-tuples.This dataset can immediately be used in conjunction with MultipleNegativesRankingLoss, likely resulting in a stronger model than if you had just used the natural-questions dataset outright.

Here are some example datasets that I created using this new function:

Big thanks to @ChrisGeishauser and @ArthurCamara for assisting with this feature.

Add CachedMultipleNegativesSymmetricRankingLoss loss function (#2879)

Let's break this down:

The v3.1 Sentence Transformers release now introduces a new loss: CachedMultipleNegativesSymmetricRankingLoss (CMNSRL), which combines both of the previous adaptations. The result is a loss adept at symmetric training tasks for which you can pick an arbitrarily large batch size. It is likely the strongest loss for Semantic Textual Similarity (STS) tasks in Sentence Transformers now.

Big thanks to @madhavthaker1 for working to include it.

Streaming Dataset support (#2792)

The v3.1 release introduces support for training with

datasets.IterableDataset(Differences between Dataset and IterableDataset docs). This means that you can train without first downloading the full dataset to disk. For example:or

(Read more about Dataset features here)

For a full example of training with a streaming dataset, consider this script:

Advanced: Allow for Custom Modules (#2773)

Sentence Transformer models consist of several modules that are executed sequentially. Most models consist of a Transformer module, a Pooling module, and perhaps a Dense and/or Normalize module. However, as of the v3.1 release, model authors can create their own modules by writing some custom modeling code. This code can be uploaded to the Hugging Face Hub alongside the model itself, after which users can load the model like normal.

This allows for authors to replace the

Transformermodule with one that includes model-specific quirks, or replace thePoolingmodule with an all-new pooling method. This even allows for multi-modal models as authors can customize the preprocessing of the first module.jinaai/jina-clip-v1 is the first model to take advantage of this new feature, allowing you to encode both texts and images (via paths to local images or URLs) due to their custom preprocessing. Try it out yourself:

Additionally, model authors can take advantage of keyword argument passthrough. By updating the

modules.jsonfile to include a list ofkwargs, e.g.:[ { "idx": 0, "name": "0", "path": "", "type": "custom_transformer.CustomTransformer", "kwargs": ["task_type"] }, ... ]then if a user provides the

task_typekeyword argument inmodel.encode, this value will be propagated to theforwardof the custom module(s). This way, users can specify some custom functionality on the fly during inference time (as well as during load time via themodel_kwargsoption when initializing aSentenceTransformermodel).Update dependency versions (#2757)

numpy<2.0.0due to issues withtorchandnumpyinteroperability on Windows.transformersversion to 4.38.0 &huggingface-hubto 0.19.3 to prevent a training crash related to theprefetch_factoroptionSmaller Highlights

Features

show_progress_bartoencode_multi_process(#2762)revisiontopush_to_hub(#2902)cache_dirandconfig_argsto CrossEncoder (#2784)Bug fixes

GISTEmbedLosswith DataParallel (DP) and DataDistributedParallel (DDP) (#2772)GroupByLabelBatchSamplerresulting in some data not being used in training (#2788)datasetsdirectory exists locally (#2859)Matryoshka2dLossnot importing correctly (#2907)dataloader_drop_last=True(#2877)torch_compile=Truenot working in theSentenceTransformersTrainingArguments: should now work for faster training (#2884)SoftmaxLossperforming worse since v3.0 as a Linear layer was ignored by the optimizer (#2881)trainer.train(resume_from_checkpoint="...")with custom models (i.e.trust_remote_code) (#2918)model_kwargs={"torch_dtype": torch.float16}with models that use Dense layers (#2889)Documentation

All changes

versions] Increment transformers/hf-hub versions to prevent training crash by @tomaarsen in https://github.com/UKPLab/sentence-transformers/pull/2757fix] Prevent crash when encodingConfiguration

📅 Schedule: Branch creation - At any time (no schedule defined), Automerge - At any time (no schedule defined).

🚦 Automerge: Disabled by config. Please merge this manually once you are satisfied.

♻ Rebasing: Whenever PR becomes conflicted, or you tick the rebase/retry checkbox.

🔕 Ignore: Close this PR and you won't be reminded about this update again.

This PR was generated by Mend Renovate. View the repository job log.