You can find a detailed write-up about this project here.

I decided to go with a single module write.py so that it's more easily hackable to your needs.

Make sure to install the package manager uv, and it will take care of installing all the necessary python dependencies the first time you run the script.

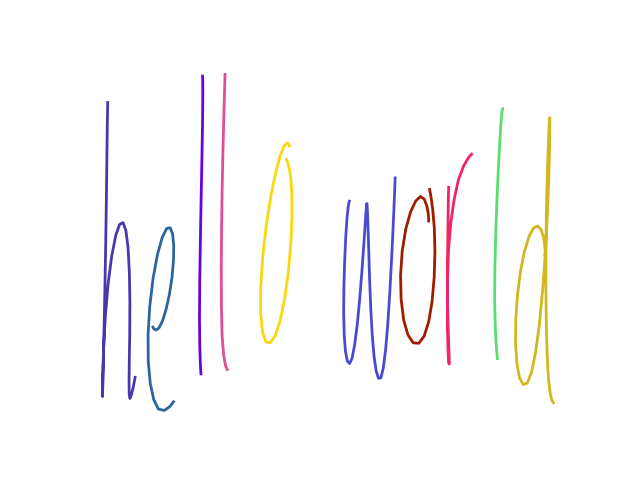

You can then create some handwriting, for instance for the text "hello world!", using:

uv run write.py "hello world" --seed 23 --bias 20This should give you the following:

As a rule of thumb, you can play with the seed parameter if some letters are missing/wrong, and with the bias

parameter to improve the legibility.

Sometimes, it helps to put the letters multiple times so that the soft window attention doesn't skip it by accident. That's one of the points to be improved with the model. For instance:

uv run write.py "handwriting syntthesiss" --seed 213 --bias 10All additional options will be printed when running:

uv run write.py --help

# or

uv run write.py handwrite --helpI got a lot of help for data processing and model setup from the following resources:

- sjvasquez/handwriting-synthesis

- hardmaru/write-rnn-tensorflow

- nnsvs/nnsvs

- karpathy/nanoGPT

- karpathy/makemore

If you find this work useful, please cite it as:

@article{anomam2025softwindowtransformers,

title = "Soft window attention with transformers for handwriting synthesis",

author = "Abou Anoma, Marc",

journal = "blog.anomam.com",

year = "2025",

url = "https://blog.anomam.com/posts/2025-06-18-soft-window-transformers-hw-synthesis"

}