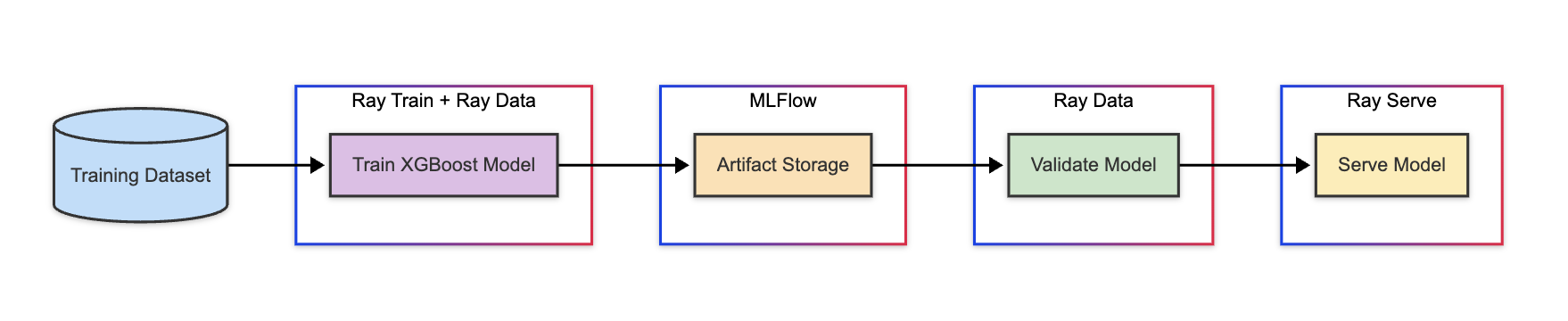

These tutorials implement an end-to-end XGBoost application including:

- Distributed data preprocessing and model training: Ingest and preprocess data at scale using Ray Data. Then, train a distributed XGBoost model using Ray Train. See Distributed training of an XGBoost model.

- Model validation using offline inference: Evaluate the model using Ray Data offline batch inference. See Model validation using offline batch inference.

- Online model serving: Deploy the model as a scalable online service using Ray Serve. See Scalable online XGBoost inference with Ray Serve.

- Production deployment: Create production batch Jobs for offline workloads including data prep, training, batch prediction, and potentially online Services.

:hidden:

notebooks/01-Distributed_Training

notebooks/02-Validation

notebooks/03-Serving