-

Notifications

You must be signed in to change notification settings - Fork 705

Add support for using custom Environments and Strategies ⚡

#608

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Conversation

Codecov ReportAttention:

Additional details and impacted files@@ Coverage Diff @@

## main #608 +/- ##

==========================================

- Coverage 83.24% 78.60% -4.65%

==========================================

Files 11 12 +1

Lines 376 430 +54

==========================================

+ Hits 313 338 +25

- Misses 63 92 +29 ☔ View full report in Codecov by Sentry. |

|

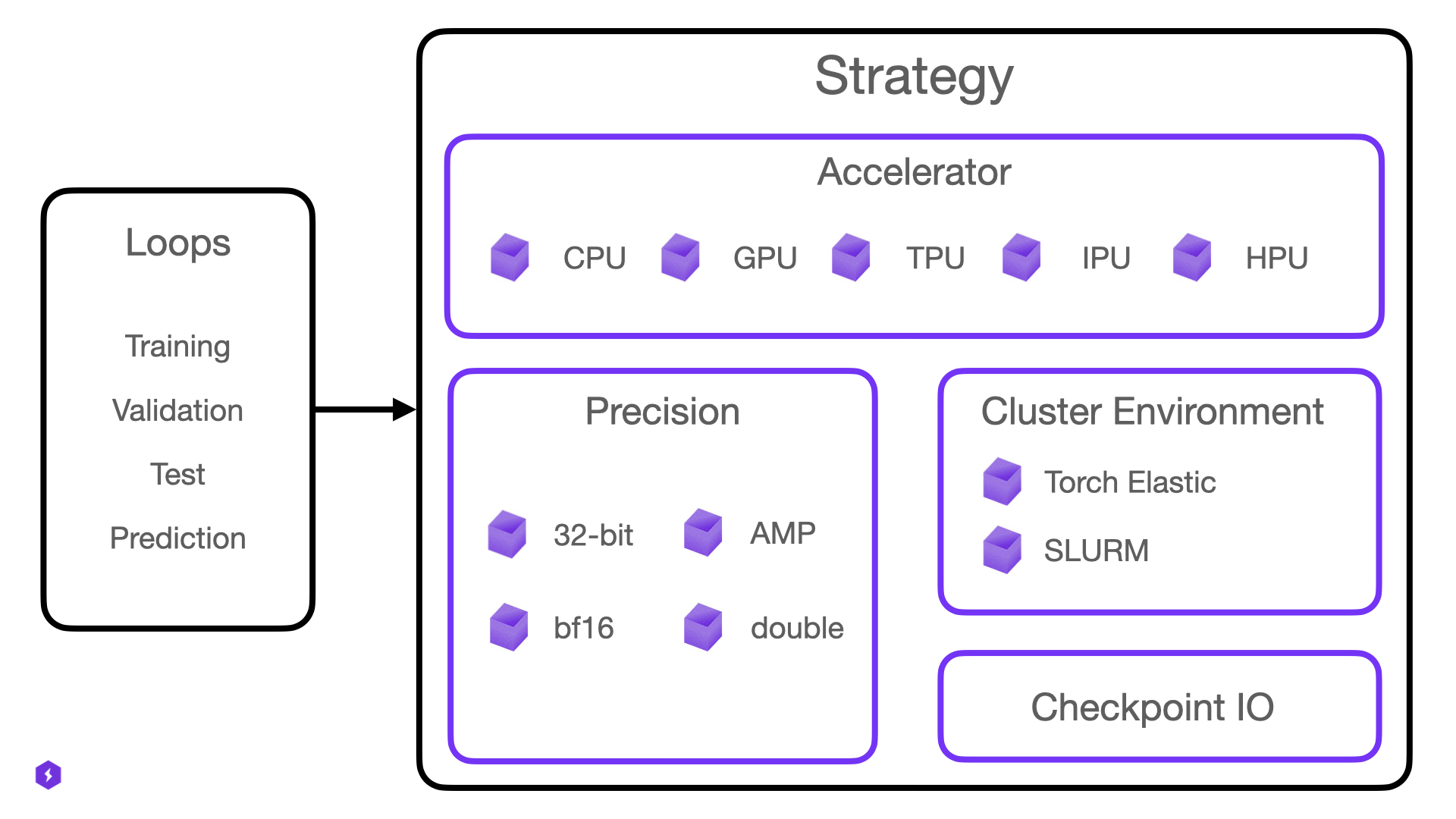

@amorehead Can you explain your rationale behind adding these additional configs? Super curious to know your thought process. Is it related to PyTorch Lighting's use of a "strategy" defined here: https://lightning.ai/docs/pytorch/stable/extensions/strategy.html? And some example strategies:

|

|

Hey, @gil2rok. Yes, Lightning's |

Because this pull request has not been accepted, how did you use these advanced strategies? Did you just manually add them the PyTorch Lightning trainer arguments? |

| callbacks=callbacks, | ||

| logger=logger, | ||

| plugins=plugins, | ||

| strategy=strategy, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As you can see here, now one can specify an optional strategy for Lightning to use e.g., via OmegaConf YAML config files.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@gil2rok

|

@ashleve, may I ask for you to review these changes when you get a chance? I've been using them in my own forked projects for a couple years now, and I think the community would benefit from having them be available by default. |

|

+1 to add this feature. Would be very helpful IMO. Cool work @amorehead!!

…On Sat, Apr 19, 2025 at 7:41 PM Alex Morehead ***@***.***> wrote:

@ashleve <https://github.com/ashleve>, may I ask for you to review these

changes when you get a chance? I've been using them in my own forked

projects for a couple years now, and I think the community would benefit

from having them be available by default.

—

Reply to this email directly, view it on GitHub

<#608 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AIZ4NS6UTLZW76PPRZMNE5L22J4FLAVCNFSM6AAAAAB3OXKDR6VHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDQMJWG43TOMJSGQ>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

*amorehead* left a comment (ashleve/lightning-hydra-template#608)

<#608 (comment)>

@ashleve <https://github.com/ashleve>, may I ask for you to review these

changes when you get a chance? I've been using them in my own forked

projects for a couple years now, and I think the community would benefit

from having them be available by default.

—

Reply to this email directly, view it on GitHub

<#608 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AIZ4NS6UTLZW76PPRZMNE5L22J4FLAVCNFSM6AAAAAB3OXKDR6VHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDQMJWG43TOMJSGQ>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

What does this PR do?

Adds the ability for one to employ custom (e.g., Lightning Fabric)

Environments(e.g.,SlurmEnvironment) andStrategies(e.g.,DeepSpeedStrategy) during model training or evaluation.Before submitting

pytestcommand?pre-commit run -acommand?Did you have fun?

Make sure you had fun coding 🙃 ⚡