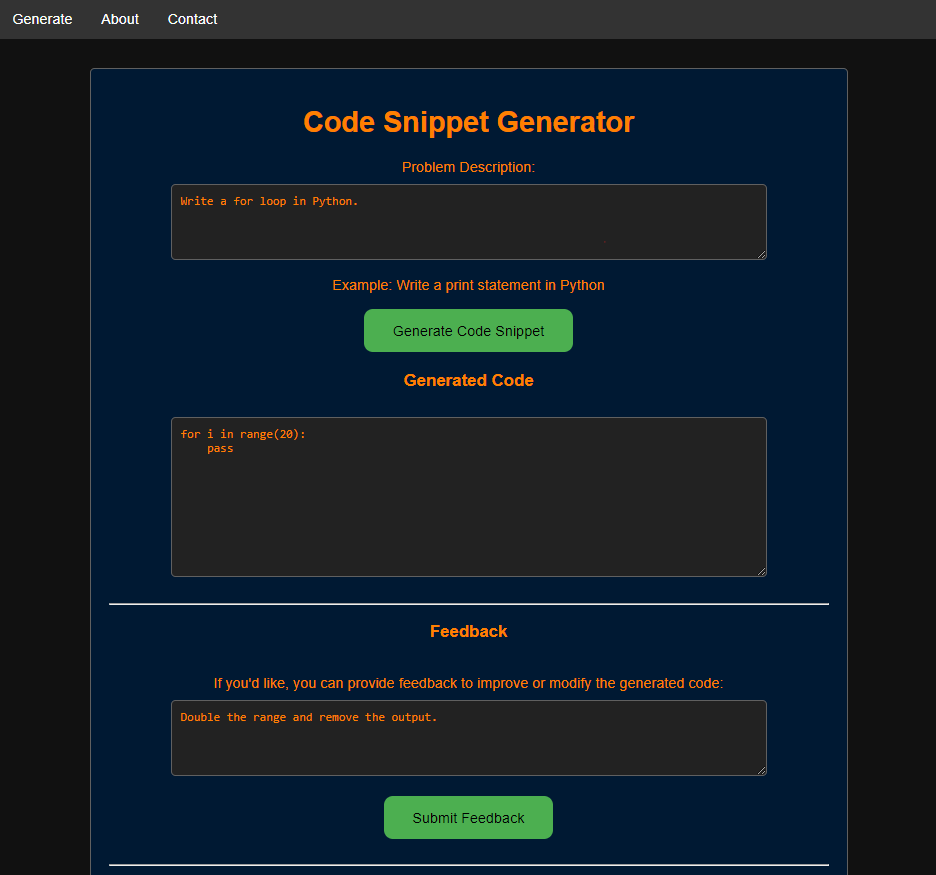

A web application that leverages an LLM to generate code snippets based on user inputs. Code snippets can be improved through user feedback.

Tools Used:

- Python

- FastAPI

- Docker

- HTML, CSS, JavaScript

- GPT 3.5 Turbo

The application is Dockerized an deployed on Microsoft Azure: Link

The deployment is online as of 10 March 2024, but will be taken offline eventually. Note that the application uses a personal API key, so it cannot be used indefinitely.

In order to run it locally, follow the instructions below.

ChatGPT and Copilot were used for identifying and fixing bugs.

The user interface is simple and straightforward. Type in your prompt in the text box, then click the "Generate Code Snippet" button. Once the model generates and displays your code, you will have the option to provide feedback in a new text box. You may rewrite and submit feedback as many times as you like, and the model will rewrite its output as per your instructions.

- Install Python 3.10

- Download the source code.

- Create a .env file in the same directory as main.py

- Put your OpenAI API key within the .env file, like so:

- Open the terminal and go to the project directory.

- Install dependencies by executing the following command in the terminal:

pip install -r requirements. txt

- Run the application by executing the following command in the terminal:

uvicorn main:app --reload

- Use the application by going to 'localhost:8000' on your browser.

- Install Docker

- Download the source code.

- Create a .env file in the same directory as main.py

- Put your OpenAI API key within the .env file

- Open the terminal and go to the project directory.

- Build Docker image by executing the following command in the terminal:

docker build -t csg .

- Deploy Docker image by executing the following command in the terminal:

docker run -d --name csgc -p 80:80 csg

- Use the application by going to 'localhost:80' on your browser.

Code Snippet Generator, as it is, makes use of OpenAI's GPT 3.5 Turbo Instruct model through their API. However, it is possible to pick a different model and change the parameters to one's liking. These settings can be found in main.py, specifically under the call_openai_api() function:

Simply browse OpenAI's documentation to pick your ideal model, and replace the model parameter which is set to "gpt-3.5-turbo-instruct" by default. It is also possible to fine-tune the model's output by changing the max_tokens and temperature parameters. Experiment with the parameters until you find an ideal configuration.

The application was built with Python 3.10.9, and should be compatible with any version >= 3.10. Below is the full list of required packages, as listed in requirements.txt:

- annotated-types==0.6.0

- anyio==4.3.0

- certifi==2024.2.2

- click==8.1.7

- colorama==0.4.6

- distro==1.9.0

- exceptiongroup==1.2.0

- fastapi==0.110.0

- greenlet==3.0.3

- h11==0.14.0

- httpcore==1.0.4

- httpx==0.27.0

- idna==3.6

- Jinja2==3.1.3

- MarkupSafe==2.1.5

- openai==1.13.3

- pydantic==2.6.3

- pydantic_core==2.16.3

- python-dotenv==1.0.1

- python-multipart==0.0.9

- sniffio==1.3.1

- starlette==0.36.3

- tqdm==4.66.2

- typing_extensions==4.10.0

- uvicorn==0.27.1

The application is a personal project with a small scope. As such, it does not have persistent storage and instead stores your input in memory. Once you close the application, your data will be deleted. If you are a developer and wish to implement permanent storage, consider installing the required packages and creating a SQLite or PostgreSQL database, which you can integrate through main.py.