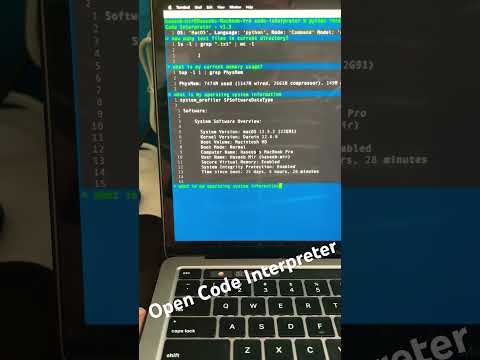

Welcome to Open-Code-Interpreter 🎉, an innovative open-source and free alternative to traditional Code Interpreters. This is powerful tool and it also leverages the power of GPT 3.5 Turbo,PALM 2, HuggingFace models like Code-llama, Mistral 7b, Wizard Coder, and many more to transform your instructions into executable code for free and safe to use environments.

Open-Code-Interpreter is more than just a code generator. It's a versatile tool that can execute a wide range of tasks. Whether you need to find files in your system 📂, save images from a website and convert them into a different format 🖼️, create a GIF 🎞️, edit videos 🎥, or even analyze files for data analysis and creating graphs 📊, Open-Code-Interpreter can handle it all.

After processing your instructions, Open-Code-Interpreter executes the generated code and provides you with the result. This makes it an invaluable tool for developers 💻, data scientists 🧪, and anyone who needs to quickly turn ideas into working code.

Designed with versatility in mind, Open-Code-Interpreter works seamlessly on every operating system, including Windows, MacOS, and Linux. So, no matter what platform you're on, you can take advantage of this powerful tool 💪.

Experience the future of code interpretation with Open-Code-Interpreter today! 🚀

The distinguishing feature of this interpreter, as compared to others, is its commitment to remain free 🆓. It does not require any model to download or follow to tedious processes or methods for execution. It is designed to be simple and free for all users and works on all major OS Windows,Linux,MacOS

🎯 We plan to integrate GPT 3.5 models.🎯 We have added support for GPT 3.5 models.- 🌐 .

We plan to provide Vertex AI (PALM 2) models..We have added support for PALM-2 model using LiteLLM - 🔗

We plan to provide API Base change using LiteLLM. Added Support for LiteLLM - 🤖 More Hugging Face models with free-tier.

- 💻 Support for more Operating Systems.

- Features

- Installation

- Usage

- Examples

- Settings

- Structure

- Contributing

- Versioning

- License

- Acknowledgments

To get started with Open-Code-Interpreter, follow these steps:

- Clone the repository:

git clone https://github.com/haseeb-heaven/open-code-interpreter.git

cd open-code-interpreter

- Install the required packages:

pip install -r requirements.txt- Setup the Keys required.

Step 1: Obtain the HuggingFace API key.

Step 2: Visit the following URL: https://huggingface.co/settings/tokens and get your Access Token.

Step 3: Save the token in a .env file as:

echo "HUGGINGFACE_API_KEY=Your Access Token" > .envStep 1: Obtain the Google Palm API key.

Step 2: Visit the following URL: https://makersuite.google.com/app/apikey

Step 3: Click on the Create API Key button.

Step 4: The generated key is your API key. Please make sure to copy it and paste it in the required field below.

echo "PALM_API_KEY=Your API Key" > .envStep 1: Obtain the OpenAI API key.

Step 2: Visit the following URL: https://platform.openai.com/account/api-keys

Step 3: Sign up for an account or log in if you already have one.

Step 4: Navigate to the API section in your account dashboard.

Step 5: Click on the Create New Key button.

Step 6: The generated key is your API key. Please make sure to copy it and paste it in the required field below.

echo "OPENAI_API_KEY=Your API Key" > .env- Run the interpreter with Python:

python interpreter.py -md 'code' -m 'gpt-3.5-turbo' -dc - Run the interpreter directly:

./interpreter -md 'code' -m 'gpt-3.5-turbo' -dc -

🚀 Code Execution: Open-Code-Interpreter can execute the code generated from your instructions.

-

💾 Code Saving: It has the ability to save the generated code for future use or reference.

-

📜 Command History: It has the ability to save all the commands as history.

-

🔄 Mode Selection: It allows you to select the mode of operation. You can choose from

codefor generating code,scriptfor generating shell scripts, orcommandfor generating single line commands. -

🧠 Model Selection: You can set the model for code generation. By default, it uses the

code-llamamodel. -

🌐 Language Selection: You can set the interpreter language to Python or

JavaScript. By default, it usesPython. -

👀 Code Display: It can display the generated code in the output, allowing you to review the code before execution.

-

💻 Cross-Platform: Open-Code-Interpreter works seamlessly on every operating system, including Windows, MacOS, and Linux.

-

🤝 Integration with HuggingFace: It leverages the power of HuggingFace models like Code-llama, Mistral 7b, Wizard Coder, and many more to transform your instructions into executable code.

-

🎯 Versatility: Whether you need to find files in your system, save images from a website and convert them into a different format, create a GIF, edit videos, or even analyze files for data analysis and creating graphs, Open-Code-Interpreter can handle it all.

To use Open-Code-Interpreter, use the following command options:

- Code interpreter with least options.

python interpreter.py -dc- Code interpreter with GPT 3.5.

python interpreter.py -md 'code' -m 'gpt-3.5-turbo' -dc - Code interpreter with PALM-2.

python interpreter.py -md 'code' -m 'palm-2' -dc - Code Llama with code mode selected.

python interpreter.py -m 'code-llama' -md 'code'- Code Llama with command mode selected.

python interpreter.py -m 'code-llama' -md 'command'- Mistral with script selected

python interpreter.py -m 'mistral-7b' -md 'script'- Wizard Coder with code selected and display code.

python interpreter.py -m 'wizard-coder' -md 'code' -dc- Wizard Coder with code selected and display code and auto execution.

python interpreter.py -m 'wizard-coder' -md 'code' -dc -e- Code Llama with code mode selected and save code

python interpreter.py -m 'code-llama' -md 'code' -s- Code Llama with code mode selected and javascript selected langauge.

python interpreter.py -m 'code-llama' -md 'code' -s -l 'javascript'Example of PALM-2 based on Google Vertex AI.

Example of Code llama with code mode:

Example of Code llama with command mode:

Example of Mistral with code mode:

You can customize the settings of the current model from the .config file. It contains all the necessary parameters such as temperature, max_tokens, and more.

To integrate your own API server for OpenAI instead of the default server, follow these steps:

- Navigate to the

Configsdirectory. - Open the configuration file for the model you want to modify. This could be either

gpt-3.5-turbo.configorgpt-4.config. - Add the following line at the end of the file:

Replace

api_base = https://my-custom-base.comhttps://my-custom-base.comwith the URL of your custom API server. - Save and close the file.

Now, whenever you select the

gpt-3.5-turboorgpt-4model, the system will automatically use your custom server.

- 📋 Copy the

.configfile and rename it toconfigs/hf-model-new.config. - 🛠️ Modify the parameters of the model like

start_sep,end_sep,skip_first_line. - 📝 Set the model name from Hugging Face to

HF_MODEL = 'Model name here'. - 🚀 Now, you can use it like this:

python interpreter.py -m 'hf-model-new' -md 'code' -e. - 📁 Make sure the

-m 'hf-model-new'matches the config file inside theconfigsfolder.

This is the directory strcuture of this repo.

.

|____.config: Configuration file for the project.

|____resources: Directory containing various resource files used in the project.

|____libs: Directory containing various Python modules used in the project.

| |____package_installer.py: Module for installing necessary packages.

| |____code_interpreter.py: Module for code execution and management.

| |____markdown_code.py: Handles markdown messages and code snippets.

| |____logger.py: Logs interpreter activities.

| |____utility_manager.py: Provides utility functions like reading configs and getting OS platform.

|____README.md: Project's main documentation.

|____interpreter.py: Handles command-line arguments, manages code generation, and executes code.

|____logs: Directory containing log files.

| |____interpreter.log: Log file for the interpreter activities.

| |____code-interpreter.log: Log file for the code interpreter activities.

|____.gitignore: Specifies intentionally untracked files that Git should ignore.

|____.env: Environment variables for the project.

|____configs: Directory containing configuration files for different models.

| |____mistral-7b.config: Configuration file for the Mistral-7b model.

| |____wizard-coder.config: Configuration file for the Wizard Coder model.

| |____star-chat.config: Configuration file for the Star Chat model.

| |____code-llama.config: Configuration file for the Code Llama model.

| |____code-llama-phind.config: Configuration file for the Code Llama Phind model.

|____history: Directory containing history files.

| |____history.json: JSON file storing the history of commands.

|____LICENSE.txt: Text file containing the license details for the project.If you're interested in contributing to Open-Code-Interpreter, we'd love to have you! Please fork the repository and submit a pull request. We welcome all contributions and are always eager to hear your feedback and suggestions for improvements.

🚀 v1.0 - Initial release.

📊 v1.1 - Added Graphs and Charts support.

🔥 v1.2 - Added LiteLLM Support.

🌟 v1.3 - Added GPT 3.5 Support.

🌴 v1.4 - Added PALM 2 Support.

🎉 v1.5 - Added GPT 3.5/4 models official Support.

📝 v1.6 - Updated Code Interpreter for Documents files (JSON, CSV,XML).

This project is licensed under the MIT License. For more details, please refer to the LICENSE file.

Please note the following additional licensing details:

-

The GPT 3.5/4 models are provided by OpenAI and are governed by their own licensing terms. Please ensure you have read and agreed to their terms before using these models. More information can be found at OpenAI's Terms of Use.

-

The PALM models are officially supported by the Google PALM 2 API. These models have their own licensing terms and support. Please ensure you have read and agreed to their terms before using these models. More information can be found at Google Generative AI's Terms of Service.

-

The Hugging Face models are provided by Hugging Face Inc. and are governed by their own licensing terms. Please ensure you have read and agreed to their terms before using these models. More information can be found at Hugging Face's Terms of Service.

- We would like to express our gratitude to HuggingFace,Google,META,OpenAI for providing the models.

- A special shout-out to the open-source community. Your continuous support and contributions are invaluable to us.