-

Notifications

You must be signed in to change notification settings - Fork 7.6k

efficient tuning for gpt-oss #9354

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Conversation

Summary of ChangesHello @Imbernoulli, I'm Gemini Code Assist1! I'm currently reviewing this pull request and will post my feedback shortly. In the meantime, here's a summary to help you and other reviewers quickly get up to speed! This pull request focuses on enhancing the training efficiency of Highlights

Using Gemini Code AssistThe full guide for Gemini Code Assist can be found on our documentation page, here are some quick tips. Invoking Gemini You can request assistance from Gemini at any point by creating a comment using either

Customization To customize Gemini Code Assist for GitHub experience, repository maintainers can create a configuration file and/or provide a custom code review style guide (such as PEP-8 for Python) by creating and adding files to a Limitations & Feedback Gemini Code Assist may make mistakes. Please leave feedback on any instances where its feedback is incorrect or counter productive. You can react with 👍 and 👎 on @gemini-code-assist comments. If you're interested in giving your feedback about your experience with Gemini Code Assist for Github and other Google products, sign up here. You can also get AI-powered code generation, chat, as well as code reviews directly in the IDE at no cost with the Gemini Code Assist IDE Extension. Footnotes

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Code Review

This pull request introduces several optimizations for gpt-oss model training, including support for Flash Attention 3, the Liger Kernel, and compatibility with DeepSpeed ZeRO-3. These changes are well-targeted and should improve performance and memory usage as described. I've provided a few suggestions to enhance code maintainability and address minor issues. A key point of concern is the modification of model_args.flash_attn with a value not present in the AttentionFunction enum, which could introduce type-related bugs.

| load_and_register_kernel("kernels-community/vllm-flash-attn3") | ||

| setattr(config, "_attn_implementation", "kernels-community/vllm-flash-attn3") | ||

| setattr(config, "_attn_implementation_internal", "kernels-community/vllm-flash-attn3") | ||

| model_args.flash_attn = "kernels-community/vllm-flash-attn3" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The flash_attn attribute of model_args is of type AttentionFunction, which is an enum. You are assigning a string value "kernels-community/vllm-flash-attn3" which is not a member of this enum. While this works here because the function returns early, it can lead to unexpected behavior in other parts of the code that might expect a valid AttentionFunction member. For better type safety and maintainability, please consider adding this new attention type to the AttentionFunction enum in src/llamafactory/extras/constants.py.

| if model_type == "qwen3_omni_moe": | ||

| from transformers.models.qwen3_omni_moe.modeling_qwen3_omni_moe import Qwen3OmniMoeThinkerTextSparseMoeBlock | ||

|

|

||

| _set_z3_leaf_modules(model, [Qwen3OmniMoeThinkerTextSparseMoeBlock]) | ||

|

|

||

|

|

||

| if model_type == "gpt_oss": | ||

| from transformers.models.gpt_oss.modeling_gpt_oss import GptOssMLP | ||

|

|

||

| _set_z3_leaf_modules(model, [GptOssMLP]) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are some unnecessary empty lines and lines with only whitespace. These should be removed for better code formatting and consistency.

| if model_type == "qwen3_omni_moe": | |

| from transformers.models.qwen3_omni_moe.modeling_qwen3_omni_moe import Qwen3OmniMoeThinkerTextSparseMoeBlock | |

| _set_z3_leaf_modules(model, [Qwen3OmniMoeThinkerTextSparseMoeBlock]) | |

| if model_type == "gpt_oss": | |

| from transformers.models.gpt_oss.modeling_gpt_oss import GptOssMLP | |

| _set_z3_leaf_modules(model, [GptOssMLP]) | |

| if model_type == "qwen3_omni_moe": | |

| from transformers.models.qwen3_omni_moe.modeling_qwen3_omni_moe import Qwen3OmniMoeThinkerTextSparseMoeBlock | |

| _set_z3_leaf_modules(model, [Qwen3OmniMoeThinkerTextSparseMoeBlock]) | |

| if model_type == "gpt_oss": | |

| from transformers.models.gpt_oss.modeling_gpt_oss import GptOssMLP | |

| _set_z3_leaf_modules(model, [GptOssMLP]) |

Co-authored-by: gemini-code-assist[bot] <176961590+gemini-code-assist[bot]@users.noreply.github.com>

Co-authored-by: gemini-code-assist[bot] <176961590+gemini-code-assist[bot]@users.noreply.github.com>

What I Changed

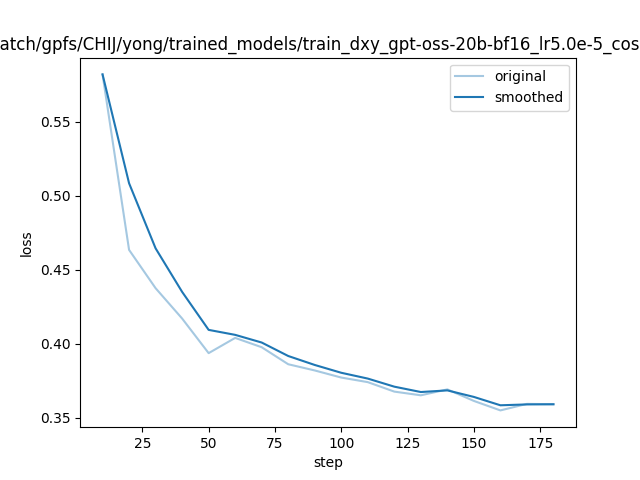

I updated three parts to make gpt-oss training run faster and use less memory:

After this Change, you can train gpt-oss-120b with 60k context len within ~40G mem per GPU.

Environment

You need to do two things:

Install Liger Kernel: You must install this manually from here:

https://github.com/Comet0322/Liger-Kernel

Download Flash Attention 3: If you are in a place with an internet connection, first run the code below to download and cache Flash Attention 3.