-

Notifications

You must be signed in to change notification settings - Fork 890

Cluster Component Model

Aeron Cluster is a Raft-based consensus system, designed host application in the form of finite state machines.

The intention is to provide a system for high performance fault tolerant services.

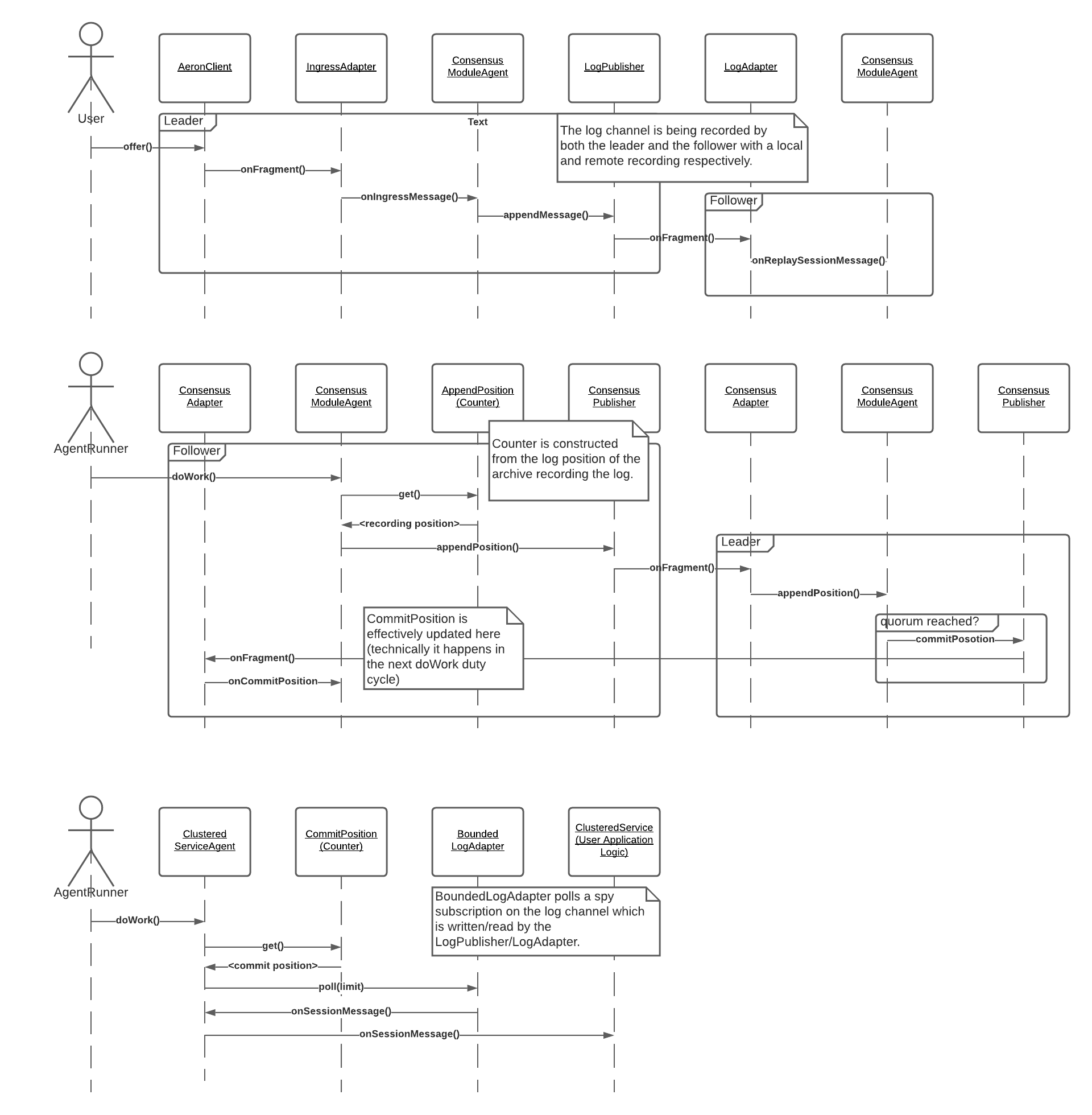

Below is a high-level diagram of some of the main components in play when a cluster is running normally (without faults).

To simplify the image some components of Aeron have been hidden (media driver, client, and archive).

This doesn't include the components that come into play during the election cycle.

Aeron Cluster, more so than any other part of Aeron has a lot of moving parts that need to co-ordinate in order to provide the desired functionality.

AeronCluster is the main entry point for clients that submit messages into the cluster.

Its offer method call directly onto a publication for the ingress channel into the cluster.

It will also maintain a subscription receiving response messages from the cluster.

The ConsensusModule is the core part of Aeron Cluster, being responsible for ensuring that nodes within the cluster are in agreement and allowing client application logic to progress in a fault tolerant fashion.

The entry point for messages coming into the cluster.

It is constructed by the ConsensusModuleAgent and is responsible for decoding the input messages from the ingress channel before passing them onto the ConsensusModuleAgent.

This is the nerve centre for Aeron Cluster. All the application and consensus messages passes through this component.

It is responsible for triggering and running Elections at the appropriate time. It will construct publications for consensus (ConsensusPublisher) and application traffic (LogPublisher) used for sending data onto the other instances of the Consensus Module with the cluster.

In turn, it will handle traffic from other nodes and react to it appropriately.

This is the channel that creates the single linearised log of application messages that is central to the Raft algorithm.

Constructed by the ConsensusModuleAgent, once the Election is closed, the leader will add a publication and start recording it to Aeron Archive, then inject the publication into the LogPublisher.

All application messages received by the ConsensusModuleAgent (via the IngressAdapter) will be passed onto this publisher for storing to disk and replication to the other nodes in the cluster.

This is the receiving side of the log channel.

Constructed by the ConsensusModuleAgent, once an Election is closed, the followers will add a subscription to the log channel, as well as setting up a remote recording of the log channel to ensure that it has a copy of the data from the leader stored to disk.

Messages received via the log channel are forwarded onto the ConsensusModuleAgent. As the LogAdapter is only running on the followers, the callbacks on the ConsensusModuleAgent are all named onReplayXXX, because that is effectively the behaviour of a follower node, it is replaying the data from the leader.

The LogAdapter is also used during the recovery portion of the Election process.

The ConsensusPublisher is constructed for both leaders and followers and used for passing all the traffic used to ensure that the nodes have reached consensus before allowing the application to progress. The two most significant events are CommitPosition and AppendPosition. The followers will send an AppendPosition message to the leader as the local copy of the application log appended to. The leader will send a CommitPosition message once a quorum between the nodes has been reached with regard to what data has been safely recorded. The nodes will also update a CommitPosition counter either when quorum is detected (leader) or on receipt of the CommitPosition message (follower).

The ConsensusAdapter is the receiver side of the consensus channel and decodes the messages for forwarding onto the ConsensusModuleAgent.

The clustered service is where the application logic runs.

An interested aspect of Aeron's Raft design is that many of the components run in parallel.

This reduces the possibility of having the application logic and the ConsensusModuleAgent logic stalling each other.

The ClusteredServiceContainer uses a variation of the LogAdapter to read the same stream of log messages being received by the ConsensusModuleAgent.

It runs on a subscription that is set up as a spy of the incoming log channel, removing and data copies for moving data between the ConsensusModule and the ClusteredService.

It is bounded because the log may have data in it that the cluster is yet to come to consensus that it should be processed by the application logic. The bound is handled by having the ClusteredServiceAgent poll the CommitPosition counter owned by the ConsensusModule.

The main component within the ClusteredServiceContainer.

It takes an instance of a ClusteredService that represents the user's application logic and passes the messages it receives from the LogAdapter onto the ClusteredService.

An interface that is implemented by a user to contain their application logic.

A session is created for each client that connects to the cluster.

The application logic can use the session to send response messages back to a specific client using its offer method.

Those messages will be published on the egress channel back to the client.

One of the tricky concepts to internalise with respect to cluster, is that there is not a direct flow for a message from ingress to application logic. The message must be appended to a log on each of the cluster nodes and a quorum need to agree on what messages are available to be consumed. As well as the requirement for consensus, Aeron Cluster aims for high-performance delivery of messages, so in a number of cases relaying of messages from one component to another is elided in favour of sharing an term buffer (using a spy subscription) and polling a counter to determine if forward progress can be made. To understand the flow of a message from ingress to application it is necessary to break up the flow and understand the basic pieces to see how they fit together.

There three main parts of the message flow are illustrated above.

Firstly, the incoming ingress messages come in via the IngressAdapter and are published using the LogPublisher.

The leader creates a local recording and the followers create a remote recording to store the messages in the Raft log.

The followers also read the message into the ConsensusModuleAgent via the LogAdapter in order to keep internal state correct.

In the ConsensusModuleAgent counter call appendPosition tracks the recording position of the log from within the archive.

During the duty cycle of the ConsensusModuleAgent the followers will check the appendPosition and communicate those values back to the leader.

When the leader sees a quorum agreement on the positions within the log of the followers and its own append position, it will publish a CommitPosition event.

The CommitPosition counter will be updated by all the followers in the cluster.

The ClusteredServiceAgent, which is hosting the user defined application logic in the form of an implementation of the ClusteredService will poll a spy subscription of the log channel.

This makes the transfer of data between the ConsensusModuleAgent and the ClusteredServiceAgent very efficient as they will effectively be sharing the data via the same underlying term buffer.

The ClusteredServiceAgent holds a reference to the CommitPosition counter and will poll that value each time it polls the subscription using the BoundedLogAdapter.

This prevents the ClusteredServiceAgent from progressing past the point which has quorum has been reached by the cluster.

The messages from the BoundedLogAdapter are passed back through the ClusteredServiceAgent and onto the application logic, when they can finally be processed by the application.