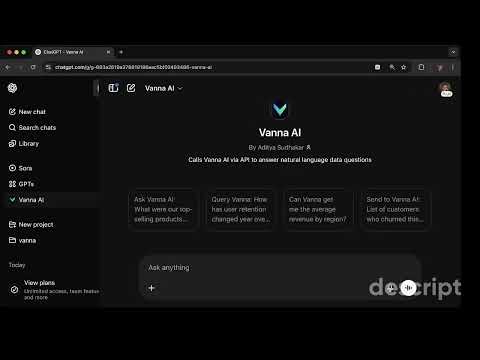

This starter kit lets you run Vanna AI through your own Custom GPT by deploying a lightweight backend that forwards requests to the Vanna API.

main.py— Flask app to relay requests to Vannarequirements.txt— Python dependenciesProcfile— For Railway/Heroku deploymentopenapi.yaml— Upload to your Custom GPT.env.example— Template for environment variablesREADME.md— You’re reading it 🙂

Create a .env file by copying the example:

cp .env.example .envThen edit .env and add your Vanna API key:

VANNA_API_KEY=your-api-key-heregcloud auth login

gcloud run deploy vanna-api \

--source . \

--entry-point app \

--runtime python311 \

--port 8080 \

--allow-unauthenticated \

--region us-central1 \

--set-env-vars VANNA_API_KEY=your-api-key-here- Go to https://railway.app and log in

- Create a new project and deploy this repo

- Set an environment variable:

VANNA_API_KEY=your-api-key-here - Railway will auto-detect the Flask app from

Procfile

heroku login

heroku create vanna-api

heroku config:set VANNA_API_KEY=your-api-key-here

git push heroku main- Go to https://chat.openai.com/gpts/new

- In the Actions tab, upload the

openapi.yamlfile - Update the

servers:section inopenapi.yamlwith your deployed endpoint, e.g.:

servers:

- url: https://your-cloudrun-or-railway-url-here- Save and start chatting!

Reach out to your Vanna contact or [email protected] for assistance.