-

Notifications

You must be signed in to change notification settings - Fork 9.9k

modeld: skip redundant cast, reshape, and flatten #35735

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: master

Are you sure you want to change the base?

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Pull Request Overview

This PR removes redundant tensor-to-NumPy operations (cast, reshape, flatten, and extra .to("CPU")) in model inference to lower CPU usage and slightly speed up execution.

- Replace

.numpy().flatten()with direct buffer extraction via.contiguous().realize().uop.base.buffer.numpy() - Apply the same change for both vision and policy outputs in

modeld.pyand for the monitoring model indmonitoringmodeld.py

Reviewed Changes

Copilot reviewed 2 out of 2 changed files in this pull request and generated 1 comment.

| File | Description |

|---|---|

| selfdrive/modeld/modeld.py | Drop .flatten() and use direct buffer NumPy extraction |

| selfdrive/modeld/dmonitoringmodeld.py | Remove redundant .flatten() in monitoring model output process |

Comments suppressed due to low confidence (3)

selfdrive/modeld/modeld.py:166

- Removing

.flatten()changesvision_outputfrom a 1D array to its original multi-dimensional shape, which may breakslice_outputs. To preserve existing behavior, reapply a flatten step (e.g.,.reshape(-1)or.flatten()) after the NumPy conversion.

self.vision_output = self.vision_run(**self.vision_inputs).contiguous().realize().uop.base.buffer.numpy()

selfdrive/modeld/modeld.py:173

- The

.flatten()call was removed here as well, alteringpolicy_outputshape from a flat vector to multi-dimensional. Consider adding.reshape(-1)or.flatten()to maintain the expected 1D output.

self.policy_output = self.policy_run(**self.policy_inputs).contiguous().realize().uop.base.buffer.numpy()

selfdrive/modeld/dmonitoringmodeld.py:96

- By removing

.flatten(),outputwill retain its original multi-dimensional shape. If downstream logic expects a flat array, reapply a flatten (e.g.,.reshape(-1)or.flatten()) after conversion.

output = self.model_run(**self.tensor_inputs).contiguous().realize().uop.base.buffer.numpy()

| self.vision_output = self.vision_run(**self.vision_inputs).contiguous().realize().uop.base.buffer.numpy() | ||

| vision_outputs_dict = self.parser.parse_vision_outputs(self.slice_outputs(self.vision_output, self.vision_output_slices)) | ||

|

|

||

| self.full_features_buffer[0,:-1] = self.full_features_buffer[0,1:] | ||

| self.full_features_buffer[0,-1] = vision_outputs_dict['hidden_state'][0, :] | ||

| self.numpy_inputs['features_buffer'][:] = self.full_features_buffer[0, self.temporal_idxs] | ||

|

|

||

| self.policy_output = self.policy_run(**self.policy_inputs).numpy().flatten() | ||

| self.policy_output = self.policy_run(**self.policy_inputs).contiguous().realize().uop.base.buffer.numpy() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

[nitpick] The long chain .contiguous().realize().uop.base.buffer.numpy() is repeated multiple times. Consider extracting this pattern into a helper function (e.g., to_cpu_array(tensor)) to reduce duplication and improve readability.

Copilot uses AI. Check for mistakes.

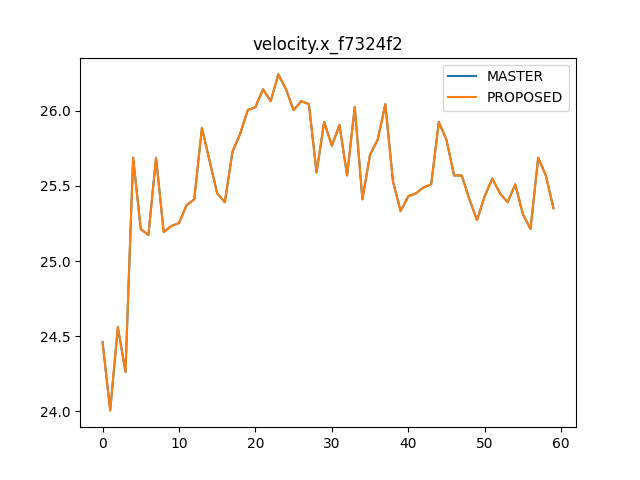

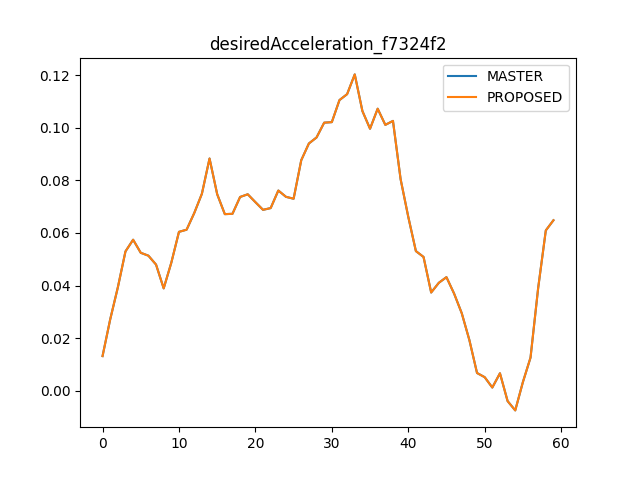

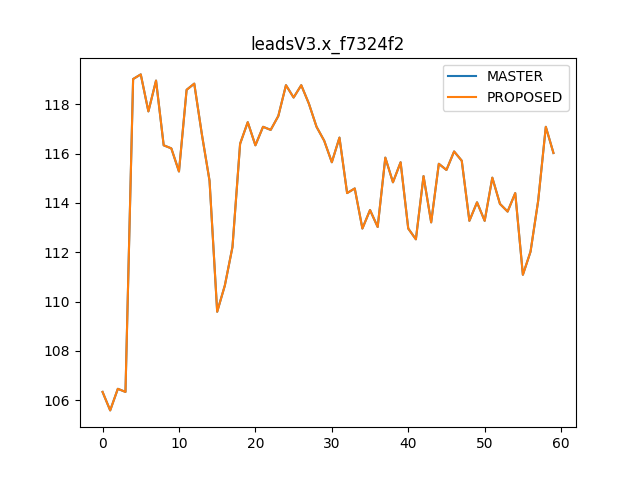

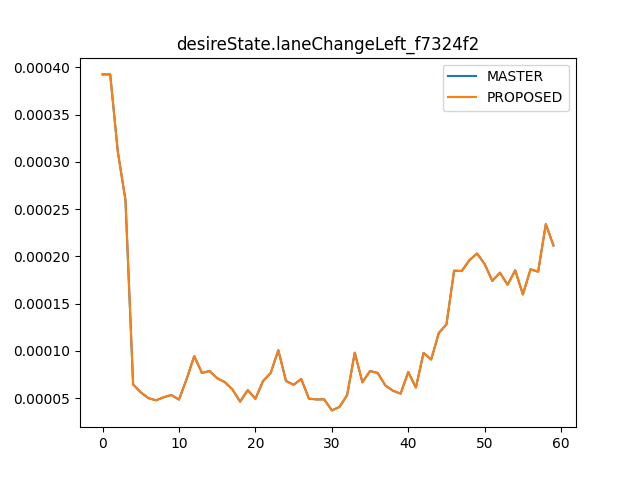

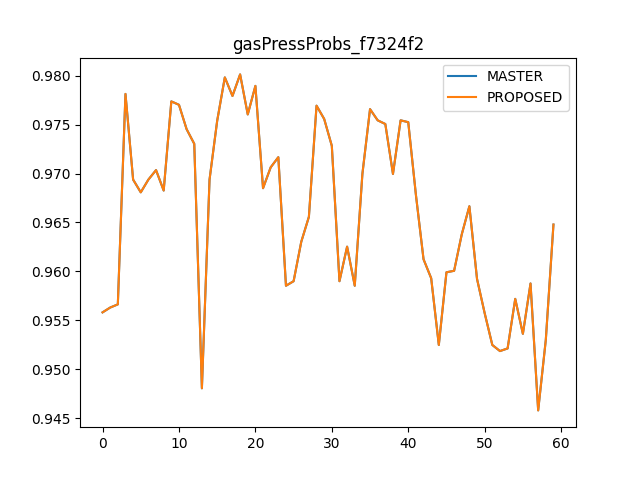

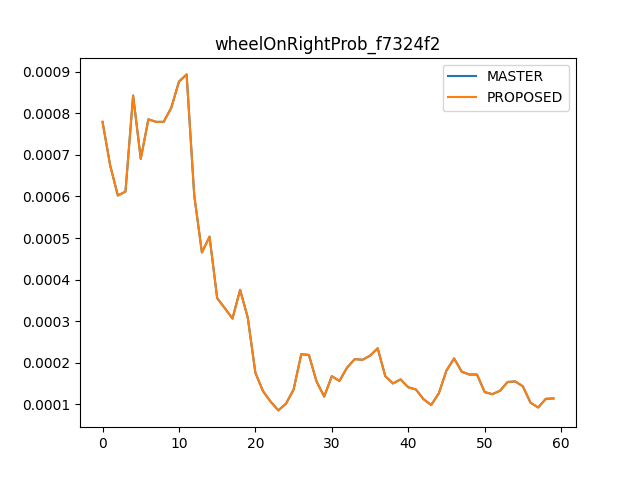

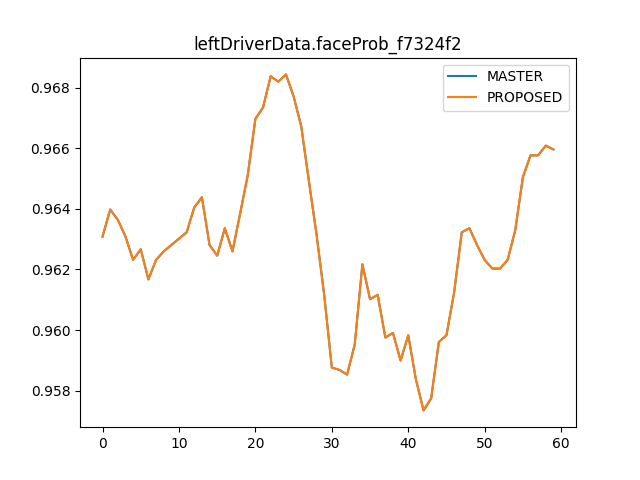

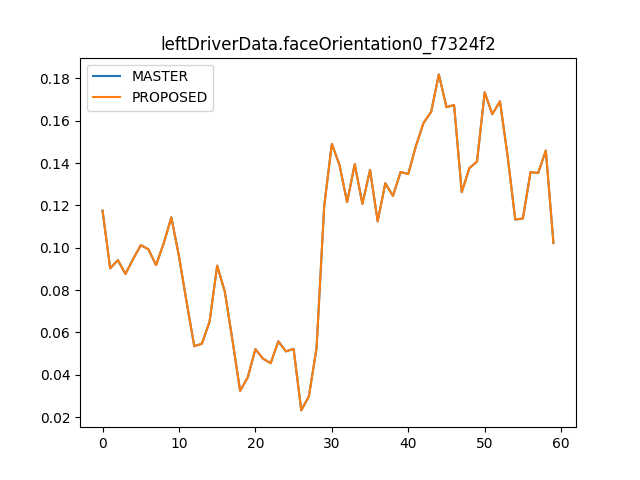

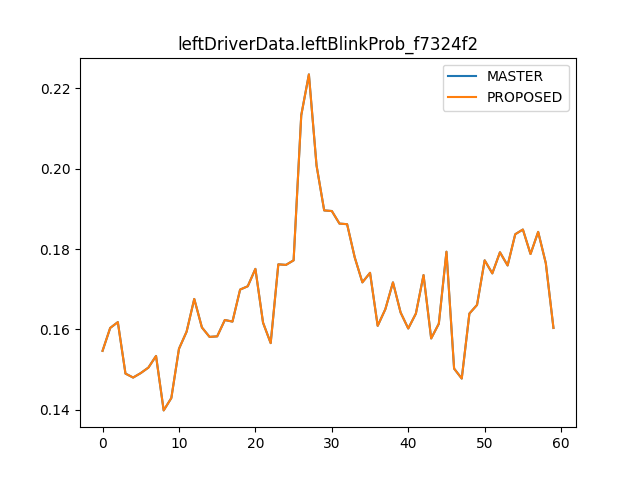

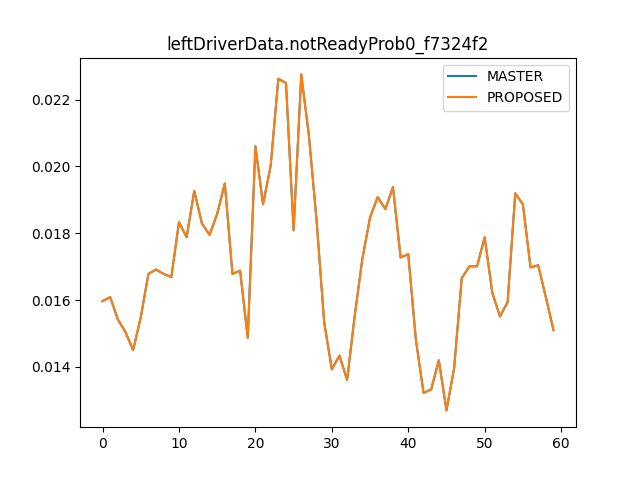

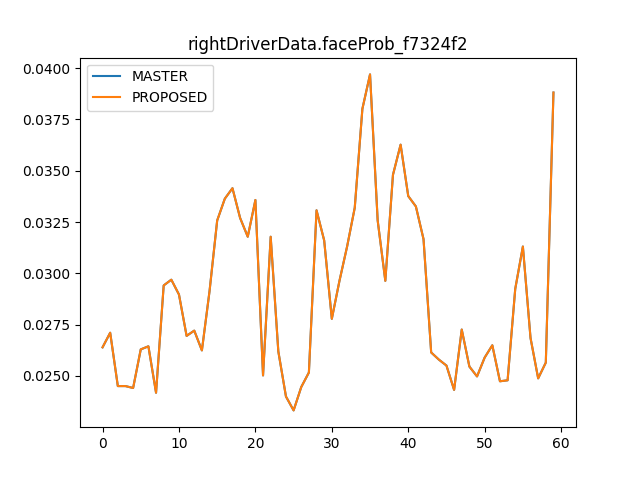

Removing the redundant operations improves

modeld.pyCPU usage from 32% to 24% and makes execution time ~2% fasterFor

dmonitoringmodeld.py, it improves CPU usage from 17% to 12% and makes execution time ~4% faster.In tinygrad,

Tensor.numpy()is essentially defined asin

compile3.py, we already cast to float32and in

modeld.pyanddmonitoringmodeld.py,flatten()is called after.numpy(), making the reshape redundant.There is also an extra .to("CPU") which I think currently causes tinygrad to do a useless copy from CPU to NPY

gotta afford the hey comma cpu usage somehow